« MySQL Performance: Welcome 5.6 RC, the best MySQL ever | Main

Monday, 15 October, 2012

MySQL Performance: InnoDB Purge Thread(s) in 5.6

NOTE: this article I've started to write yet before summer vacations,

but timing was against me, and I'm able to finish and publish it only

now. While my investigations are continuing, you'll find here the

explanations why Vadim observed unexpected

performance improvements when using a single InnoDB BP instance

rather 8, and some other

things as well..

The InnoDB Buffer Pool (BP) Instances feature was introduced since MySQL 5.5.4 and presented for the first time during MySQL UC2010. This solution was the key point on its time, as without BP instances no one from other newly added great features was able to show any positive impact on performance (as otherwise all the stuff was still remaining blocked on the BP mutex contention (you may find more details about here and here ))..

During MySQL UC2010 we presented the following graph representing TPS levels reached on db_STRESS workload:

Well, you may quickly remark that Adaptive Flushing in MySQL 5.5.4 was not yet really working ;-)

However, there are 4 tests on the graph executed sequentially, but each one with a different number of BP instances:

- #1 -- innodb_buffer_pool_instances=1

- #2 -- innodb_buffer_pool_instances=2

- #3 -- innodb_buffer_pool_instances=4

- #4 -- innodb_buffer_pool_instances=8

And as you can see the difference in TPS between the test #1 and #4 is not far from 50% ;-)

The improvement was huge, and since then within all my next benchmarks I've used 8 or 16 BP instances always "by default" without even looking on impact -- performance may be only better, no? ;-)

However, having several BP instances will not always guarantee that you'll avoid BP mutex contention.. - while the distribution of your data within InnoDB pages may be completely random, your workload activity may still hit manly only a subset of pages which is grouped (by no chance) within the same BP instance!.. And in this case for your workload it'll be likely you have only one instance within your Buffer Pool, which brings the fixed problem once again on the table.. (more details on how to discover if you're hitting this issue is here ).

But since MySQL 5.6 things become much more interesting!.. In MySQL 5.6 we have now not only BP instances (as in 5.5), but also improved page_hash locks which are reducing BP contentions even when some pages become more hot then others, and even if they belong to the same BP instance! And I was able to confirm it during many tests over a time, and on small servers there was even near no difference if you're using one or several BP instances..

So, nothing surprising here that for MySQL 5.6 we're willing to have the innodb_buffer_pool_instances=8 by default, right?.. ;-)

BUT!.. - for my huge surprise, MySQL QA Team reported a performance regression on their DBT2 tests once they are using innodb_buffer_pool_instances=8 instead of innodb_buffer_pool_instances=1.. - and the first thing coming in mind "it's impossible!".. - but by a long benchmarking experience I know that everything is possible ;-)) and the first step to do in such a situation is to be able to reproduce the same or similar issue on a different server (it'll avoid you to check in depth HW and OS setup/impact, etc. etc. - then very quickly you'll be able to focus only on what is really important)..

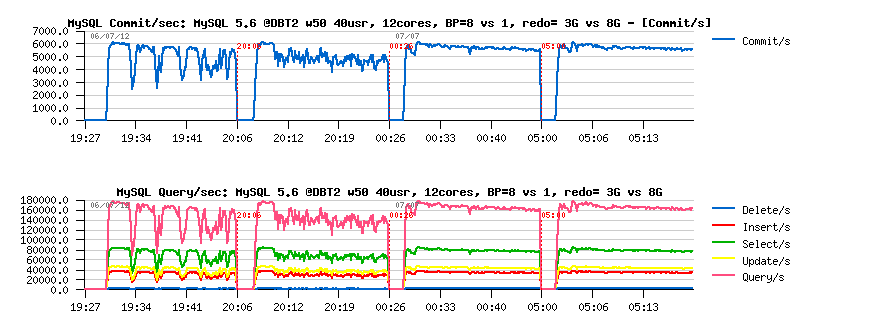

So far, I was "lucky" here! - as I was able to reproduce the same issue on my, similar to QA server, 12cores (Intel) box, and was facing to the following:

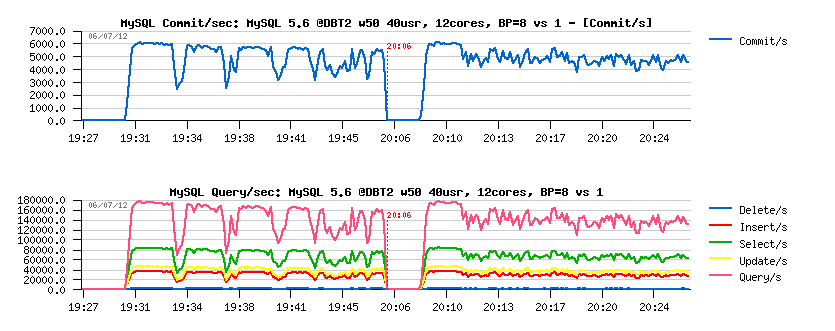

Comments :

- on the left side there is the result with MySQL 5.6 configured with innodb_buffer_pool_instances=8, and on the right side with innodb_buffer_pool_instances=1

- the workload is DBT2, 50 warehouses, 40 concurrent users

- from the results you can see that in the first case the top level on performance is still higher, but periodic drops in activity making its overall performance lower comparing to the second case... - very comparable to the test results when Adaptive flushing is disabled vs enabled ;-)

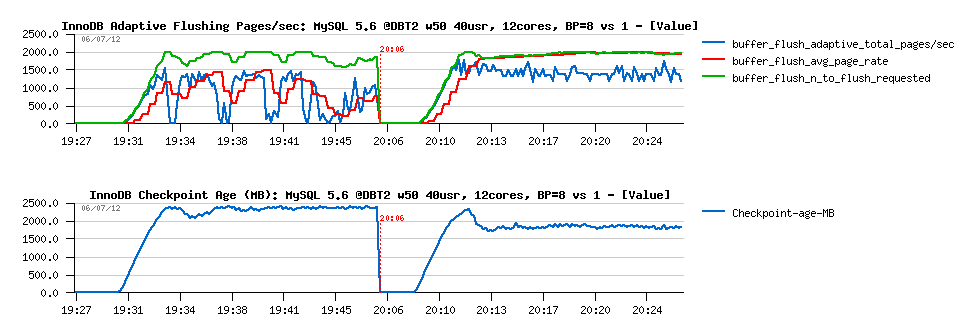

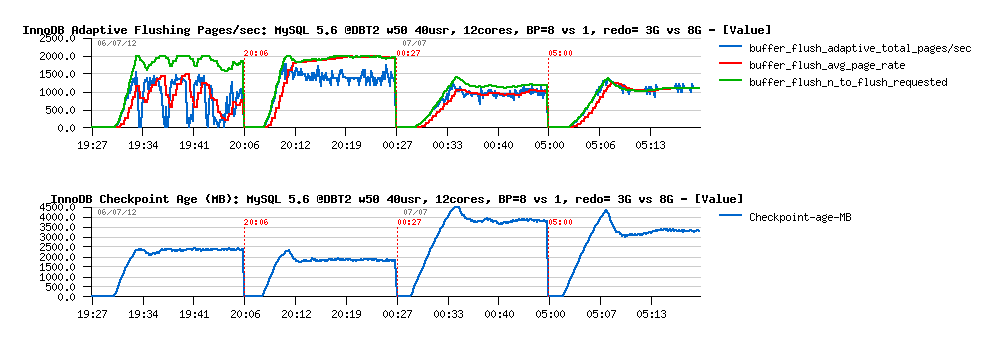

Well, Adaptive Flushing is enabled in both cases for sure, but let's get a look on the flushing activity and Checkpoint Age during this test:

Observations :

- it's clear that the flushing in the first case is not really adaptive (and having drops), while in the second case it's pretty stable and working as expected!..

- then the level of Checkpoint Age is clearly indicating that in the first case we're hitting "sync flushing" most of the time..

- Why?.. - ok, my disks are not very fast on this server, but if the problem was on the storage, it should not be better with a single BP instance either..

- and again, even when we're hitting "sync flushing", since MySQL 5.6 there should be no more killing "furious flushing" as before, because as soon as we have some free space within REDO logs -- user threads will be able to continue to work, and no drops should be observed!..

So, what is wrong here?..

My initial feeling was that there are some differences in the way how flushing is operating when several BP instances are used comparing to a single BP instance.. This feeling finally was correct ;-)) but we spent hours and hours discussing with Inaam (who is our page flushing guru in InnoDB Team) and analyzing the flushing code to find how ever it's possible?.. The only "working" explanation we've found is the following:

- when "sync flushing" is happening, InnoDB will flush sequentially instance by instance dirty pages from the Buffer Pool

- what is new in MySQL 5.6 is that as soon as there will be little bit of free REDO space available, the user threads (sessions) will be able to continue their work!

- but the main difference between single BP instance and several ones is that as soon as sync flushing is started from a single instance - the most oldest pages will be flushed first and very quickly make some free room within REDO space

- while when several BP instance are used, the most oldest page may still remain within one of the BP instances until sync flushing did not finish to flush all previous instances (sequentially), and there will be the turn of the instance keeping the most oldest page!..

Usually to prove such a thing some code tracing should be involved.. But since MySQL 5.6 many things particular to InnoDB are already instrumented within InnoDB METRICS table -- one of these is "flush sync waits" telling if there were some waits on sync flushing by user threads.

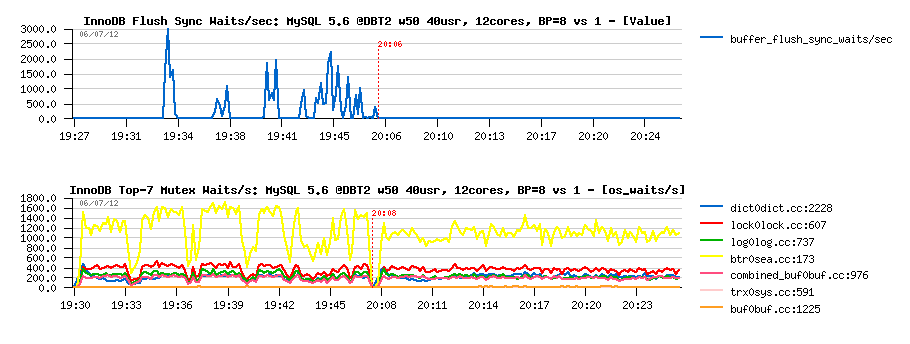

Let's get a look on the following graph:

As you can see:

- there were up to 3000 waits/sec when several BP instances were used, and no waits for a single one!

- and note that InnoDB reporting the main waits on the btr_search_latch RW-lock, let's get a look on it later..

So far, at least we found the real explanation to the observed issue! :-)

But now, what about the fix?..

Several ways are possible:

- well, the most appropriate solution here will be to involve a parallel flushing from several BP instances on the same time (but it yet should be well designed as it will involve a per instance double write buffer as well, so it's the way how it should be fixed, but for sure cannot be just available for tomorrow ;-))

- having a faster storage should remove this issue too.. ;-)) but is there any other?..

- we may still try a bigger REDO log space expecting to reduce the page flushing frequency (as it looks like this workload activity may be a good candidate for it)..

The following graphs are representing 2 additional cases more: the same 2 tests executed now with 8GB REDO log space instead of 3GB:

As you can see, there is no QPS drops anymore, regardless if we used 8 BP instances or a single one. Performance is also better, and the next graphs are explaining why:

The bigger REDO log space giving a bigger marge for pages flushing:

- more bigger REDO - more longer the page flush can be delayed..

- if during this period the same page was modified more than once - we'll reduce the number of I/O writes (flushes)

- and as you can see, the flushing activity was reduced from 1500 to 1000 pages/sec, which made it matching the storage layer performance capacity limitations, so no QPS drops are present anymore

- as well REDO logs space usage is remaining under 4.5GB and also keeping a sufficient marge for potential spikes in workload activity

OK, looks better now :-)

However, it's still unclear if there is any benefit from using more than one InnoDB Buffer Pool instance in MySQL 5.6.. - seems to me like 12cores server is enough "optimal" (means: small) to observe any BP contentions.. What about 32cores host and with a more powerful storage?..

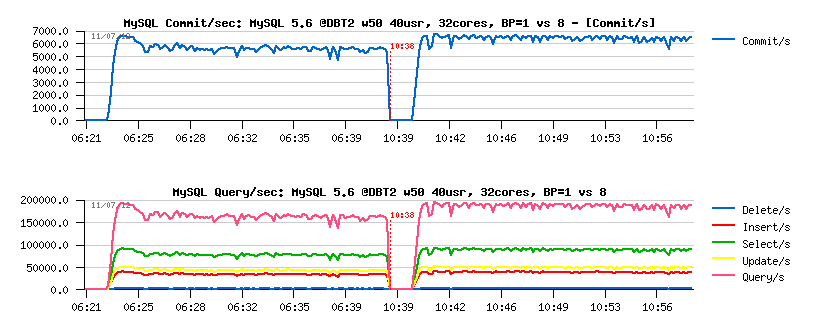

Same test with 3GB REDO on 32cores server, full SSD:

Observations :

- as you can see, there is no any QPS drops even with 3GB REDO log space

- performance with 8 BP instances is better than with a single instance

- as well and overall performance is better than on 12cores (6500 commit/sec vs 5500 commit/sec before)

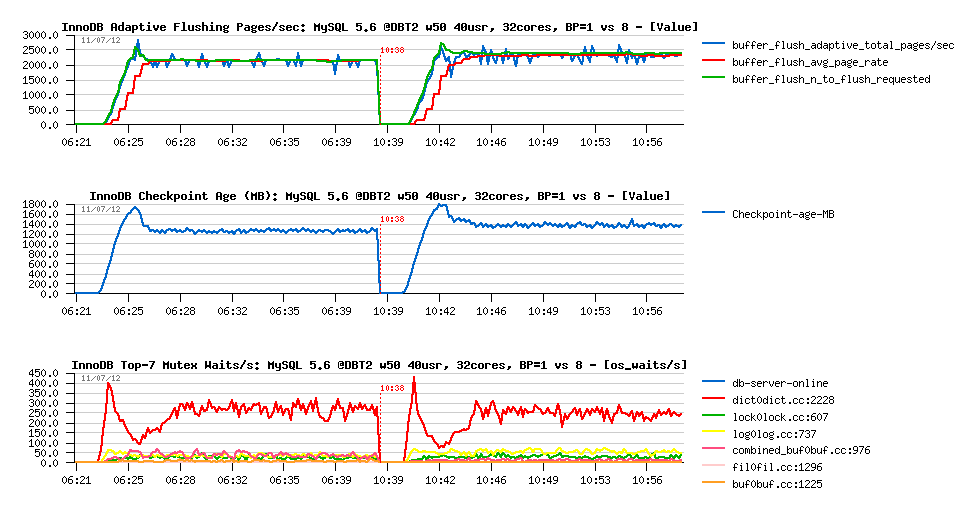

And what about flushing?.. Let's see:

Observations :

- flushing is looking pretty stable! and seems like flushing of 2000 pages/sec is the rate required by this workload

- waits on the index lock are reported by InnoDB, however it's hard to understand if they are really important as they are not expressed with time waited, just waits/sec..

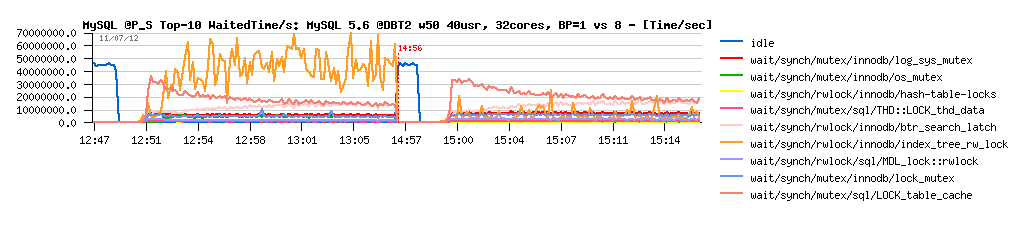

Once again, more info from PERFORMANCE SCHEMA:

Observations :

- as you can see, to top wait time on the test case with a single BP instance is corresponding to the InnoDB index lock

- and having 8 BP instances is dramatically reduced this wait! - and this explains why performance with 8 BP instance was better..

- while the gain is pretty strange, because BP instances and index lock are completely independent things..

- but the fact is the fact :-) and probably once index lock contention will be fixed, things will become once more different again.. who knows :-)

And then summary vacations arrived...

After vacations I've tried to replay the tests and get a more in depth look to the problem:

- but I was unable anymore to reproduce the same thing ;-)

- seems like my servers also got a very positive rest during a summer time ;-))

- 12cores server with 3GB REDO space did not show any QPS drops anymore..

- Surprise... - storage started to work better than before...

- So, to reproduce the same issue there was an easy way -- simply set the "innodb_io_capacity_max" to 1000 (as at least flush of 1500 pages/sec is required, my MySQL server will be in trouble now to follow the REDO speed, and I'll hit the sync flushing for sure!)..

- Ok, done, and it worked exactly as I've expected ;-)

- Just that I did not expect that in both configurations (1 and 8 BP instances) there will be QPS drops...

- Why?...

- It's good to have vacations to get a more freshly view on the same problem ;-)

- if you'll look now on the very first graphs with Checkpoint Age (when the issue just started), you may see than in case with a single BP instance there was no sync flushing at all ;-) as the Checkpoint Age level is under Max Age limit...

- So, the explanation is still good for several BP instances, but not matching a single one..

- So, why we still have QPS drops during sync flushing even if a single BP instance is used?..

- I've supposed that it's because the flush batch is too long and all threads are waiting too long on it..

- So, I've tried then to reduce the amount of flushed pages within a single loop in batch.. - but it did not help either..

Seems like the "stop the world" state is inevitable for any user threads

trying to write while sync flush is involved (which is partially

understandable as there is no free space anymore in the REDO logs, and

user threads are simply must wait).. - but it'll be great to improve

something here, and get this wait more smooth if possible.

Until then, my only advice will be: don't hit sync flushing in InnoDB and avoid it as much as possible!! That's all.

Until then, my only advice will be: don't hit sync flushing in InnoDB and avoid it as much as possible!! That's all.

To be continued... ;-)

blog comments powered by Disqus