MySQL Performance on IO-bound workloads is still extremely depending on the underlaying storage layer (thus is directly depending on your Storage Performance).. Indeed, flash storage is

definitively changing the game, but even with flash there is, as usual, "flash and flash" -- all storage vendors are improving their stuff constantly, so every time you have something new to

discover and to learn ;-)) During all my MySQL 8.0 GA tests I was very pleasantly surprised by IO performance delivered by Intel Optane SSD. However, what the storage device can deliver alone on

pure IO tests is not at all the same to what you could observe when it's used by MySQL -- unfortunately, in the past I've observed many cases when with a device claimed to be x2 times faster we were

even not observing 10% gain.. But MySQL 8.0 is probably the most best placed MySQL version today to re-visit all this IO-bound story (there are many "under-hood" changes in the code helping to use

your storage more efficiently) -- well, we're definitively yet very far from "perfect" ;-)) -- but again, as usual -- "everything is relative".. And for the "relative" point for this article I'll

propose you to get a look on 2 different flash drives from Intel :

-

Intel SSD: SSDSC2BA800G4 (800GB)

-

Intel Optane SSD: P4800X Series (375GB)

yes, both devices are from Intel, so it's only Intel -vs- Intel (e.g. no blame for "competition" post ;-))

so far, if you'll look on their specs, both drives are looking pretty good for avg. IO-bound workloads, except that Optane drive is claimed to be x5 times faster in all categories (and it's

particularly impressive with its low IO latency -- see 1M IO-bound QPS with MySQL 8.0

-- so, can confirm at least for latency ;-)) -- however, will the x5 claim still be valid in different conditions / workloads ?

For my testing I'll use the same config as before (the same Skylake server as well),

and the key config points are :

-

InnoDB page size : 16K

-

trx_commit : 1 (flush REDO on every commit)

-

single flash drive (just EXT4 on top of single drive, no RAID, no LVM, etc..)

-

InnoDB Buffer Pool (BP) : 128G / 64G / 32G (will vary along with test scenarios)

(no double write, no binlog for the moment -- these ones will be covered later, patience ;-))..

So far, let's start first with so called "in-memory" Sysbench OLTP_RW workload :

-

test workload : Sysbench OLTP_RW uniform

-

concurrent users : 1, 2, 4, .. 1024

-

data volume : 8 tables of 10M rows each (~20GB)

-

InnoDB Buffer Pool (BP) : 128GB

-

so, all the data will be fully cached in BP, thus NO any IO reads (the reason to be so called "in-memory")

-

however, there will be definitively IO writes (as there are writes in OLTP_RW ;-))

-

and in our case, if all is going well, there will be only 2 types of writes :

-

1) dirty pages flushing

-

2) REDO log writes

-

the 1) is going in background, and as soon as your flushing is fast enough -- your performance is not impacted

-

the 2) are pure sequential writes only mixed with periodic fsync(), and generally as soon as you use a good flash drive for your REDO files -- you're fine ;-))

Ok, what about results ?

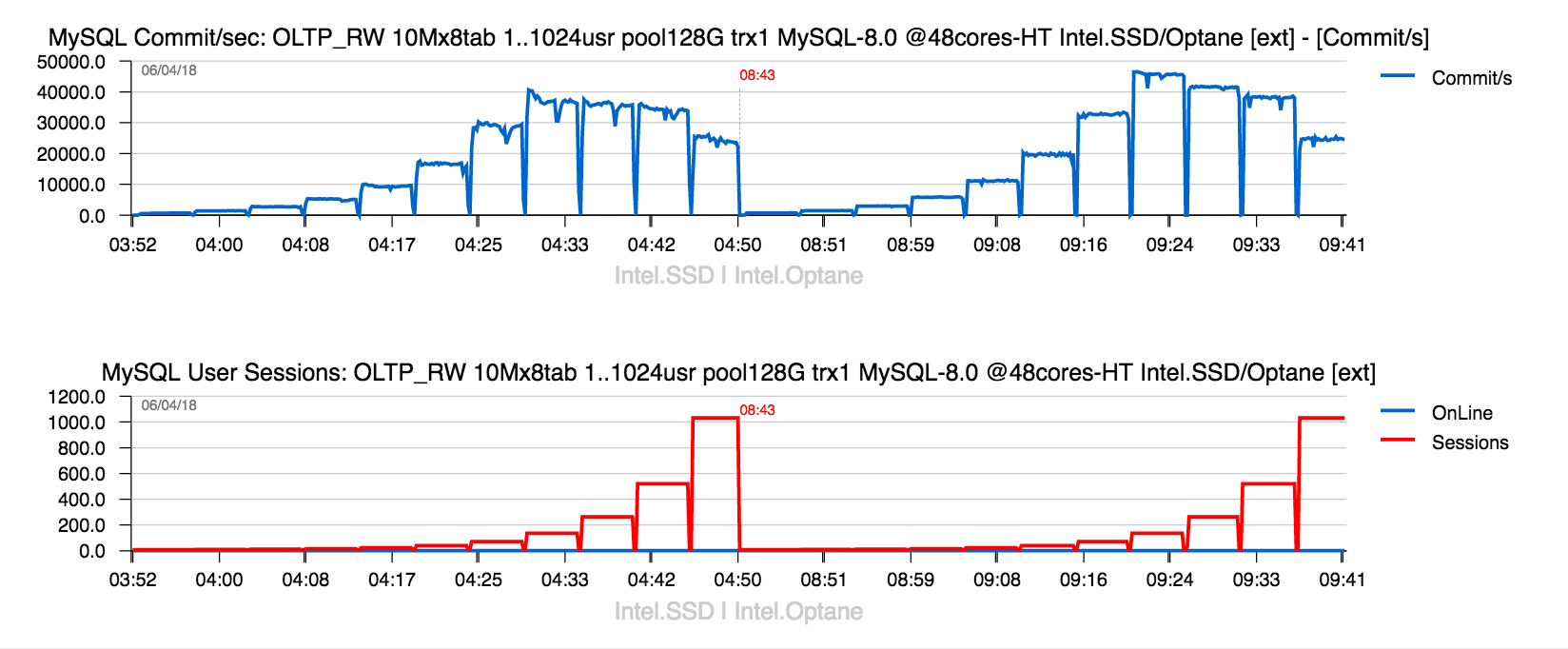

Sysbench OLTP_RW 10Mx8-tables BP = 128GB : Intel SSD -vs- Intel Optane

Comments :

-

on the left side of the graph you can see TPS level of MySQL 8.0 running on SSD, and on the right side -- the same but on Optane

-

concurrent users level is progressing from 1 to 2, 4, 8, .. 1024

-

and from TPS results you can see that up to 16 users there is nearly no TPS difference between SSD and Optane

-

then Optane is slightly better on 64usr level, and definitively better on higher load

-

but you don't see any sign of expected x5 difference, right ?

-

and if you'll stop here, you could just say "all this Optane story is b*shit !", Dimitri, WFT ?.. ;-))

Indeed, on such a small data volume and having all the active data set cached in BP you'll not see much difference with a faster storage.. -- however, what will happen if your data will grow ?..

The following are the results on the same OLTP_RW workload, but with 50M tables (x5 more data) :

-

so, 50Mx8-tables (~100GB)

-

and with 128GB BP the workload still remains "in-memory" (e.g. there will be no IO reads)

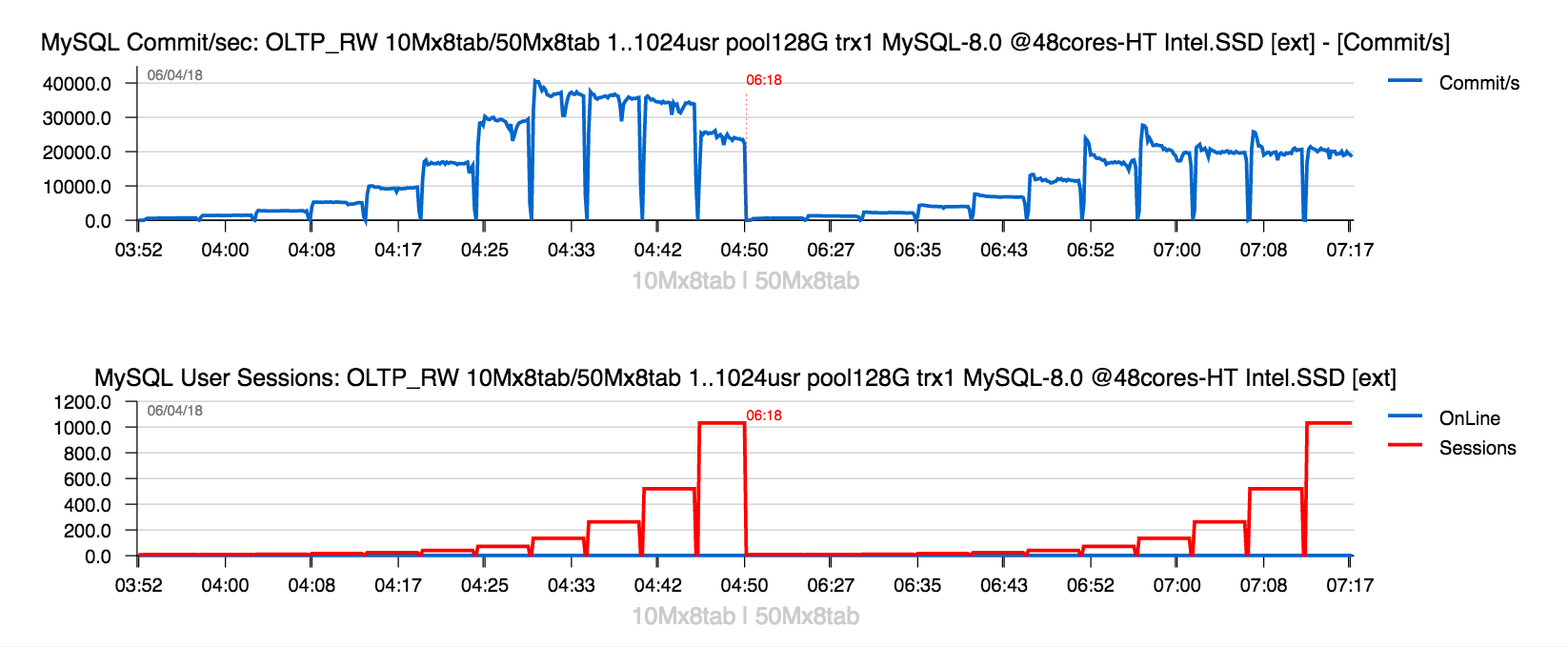

let's first get a look on how different will be TPS on Intel SSD when the table volume will be moved from 10M to 50M rows:

Sysbench OLTP_RW x8-tables BP = 128GB Intel SSD : 10M -vs- 50M rows in table

Comments :

-

as you can see, on SSD there is near x2 times drop on TPS with 50M -vs 10M.. -- why ??

-

many people are thinking TPS becomes lower because with a bigger data volume we have to flush more pages, so everything becomes slower due more heavy flushing..

-

and this claim here is only partially true.. ;-)) -- why ?

-

mind that the whole active data set is still cached by BP (so, no IO reads)

-

indeed, with x5 times bigger data volume we will have x5 times more pages in BP

-

and with uniform random writes with the same processing rate we'll have all the chances to modify x5 more pages per the same time interval..

-

which will result in having more dirty pages to flush, yes

-

however, again -- all the flushing is going in background, and as long as it's going fast enough to keep Checkpoint Age low, there will be no any impact on overall processing !

-

so, why then we're getting a lower performance here ?..

-

the main problem is that in the current case both DATA and REDO writes are going to the same device

-

and a given SSD is not able to keep in peace both write streams in parallel..

-

which is resulting in higher times in REDO writes + fsyncs

-

which is then directly impacting transaction times, and overall TPS becomes lower..

-

NOTE : if another dedicated SSD was used here for REDO only -- there would be no any difference in TPS in the current test on move from 10M to 50M rows volume in tables..

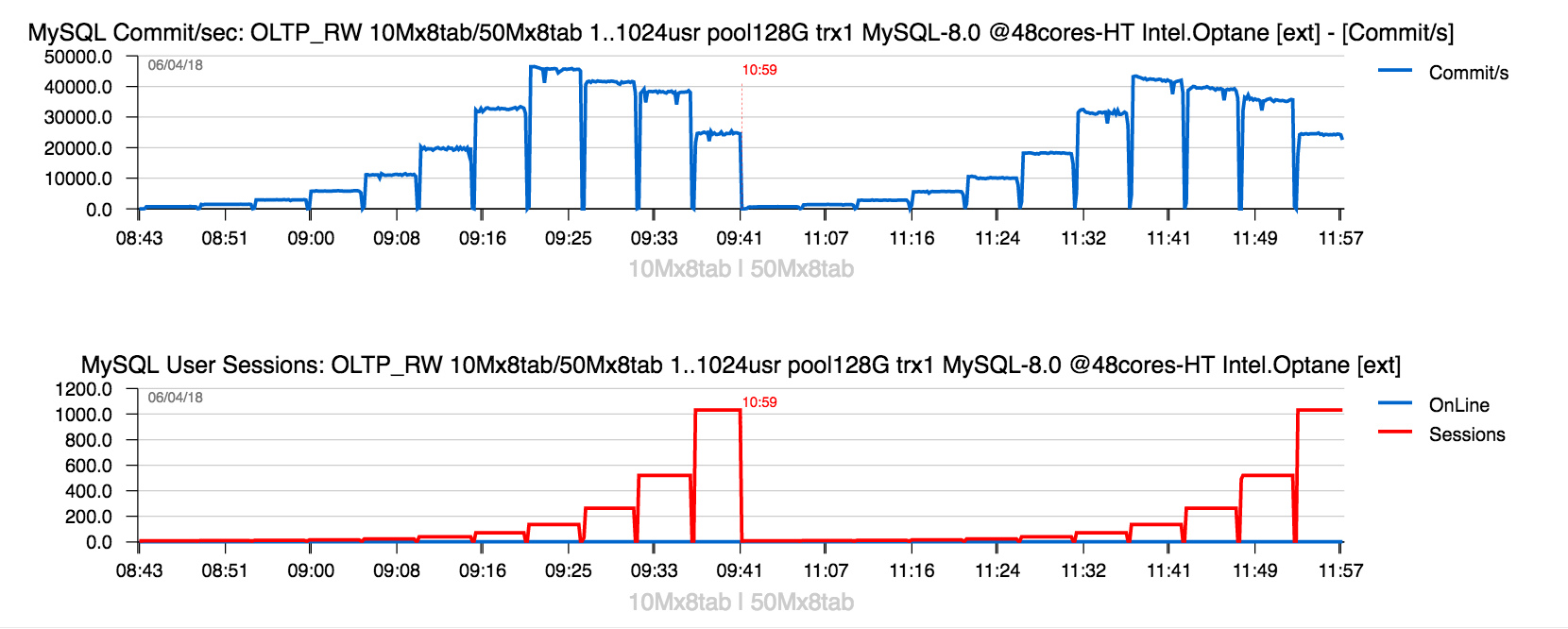

So far, and now how much TPS will be impacted if Optane SSD was used in the same conditions ?

Sysbench OLTP_RW x8-tables BP = 128GB Intel Optane SSD : 10M -vs- 50M rows in table

Comments :

-

here, as on the previous graph : result with 10Mx8tables on the left side, and 50Mx8tables on the right

-

small, but visible impact is present on Optane as well (specially on peak TPS)

-

however, it's far away from near x2 times worse, right ? ;-))

to see it more clear, let's compare SSD and Optane on the same graph now with 50Mx8tables volume test :

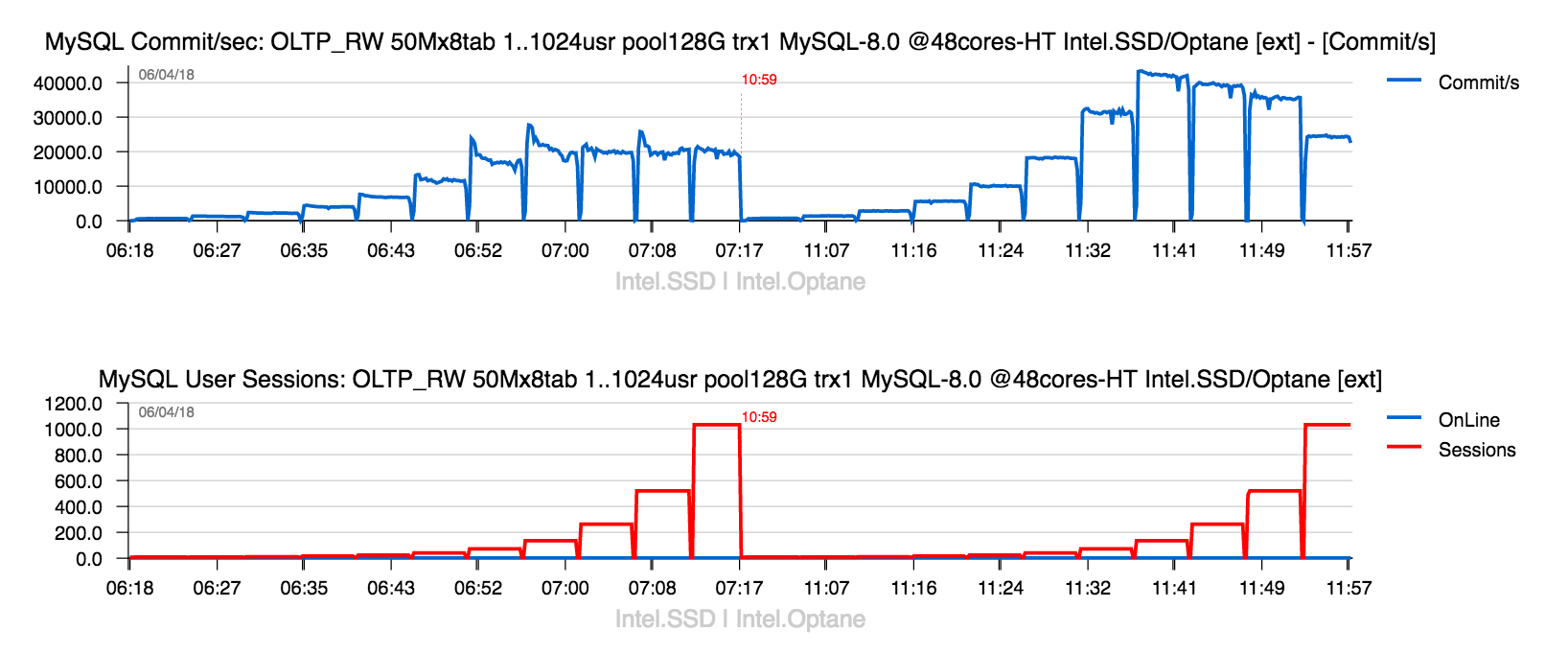

Sysbench OLTP_RW 50Mx8-tables BP = 128GB : Intel SSD -vs- Intel Optane

Comments :

-

SSD is on the left, and Optane is on the right of the graph

-

no comments ;-))

and now all 4 test cases together :

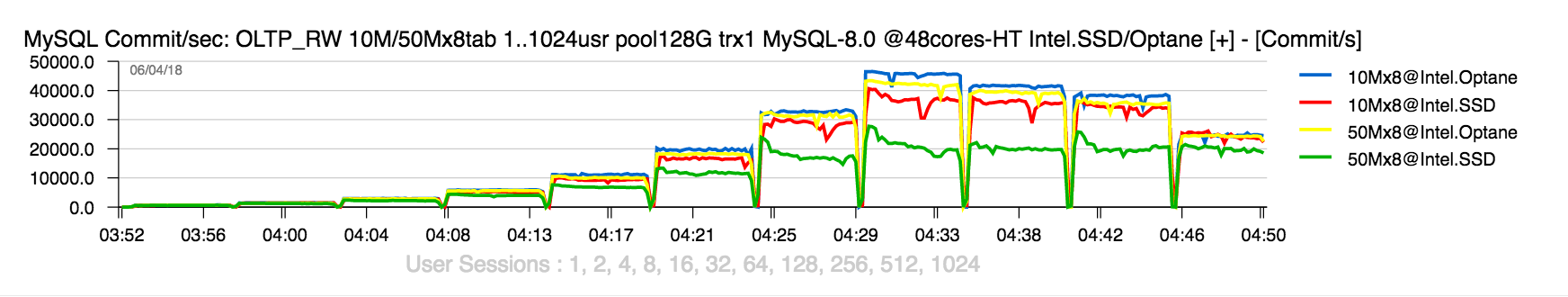

Sysbench OLTP_RW 10M/50Mx8-tables BP = 128GB : Intel SSD -vs- Intel Optane

As you can see, even if all your active data are fitting BP size, there may still be surprises.. -- and the 50Mx8tables data volume is a pretty good size to test workloads with a smaller BP (so,

fully IO-bound then).

Now, let's switch to more "user friendly" graphs and go just to pure test results comparing SSD -vs- Optane with :

-

BP 128GB :

-

all data matching BP (in-memory)

-

so no IO reads (makes no sense to test RO workloads as storage will be not involved)

-

comparing OLTP_RW and Update-NoKEY tests only

-

BP 64GB :

-

1/2 of data can be cached in BP only

-

so IO reads are here, IO writes yet more present

-

both RO and RW workload results are compared

-

BP 32GB :

-

less than 1/3 of data can be cached

-

much more intensive IO reads + yet more intensive IO writes

-

again, both RO and RW results are presented

Don't think you'll need any comments here as the numbers are speaking for themselves ;-))

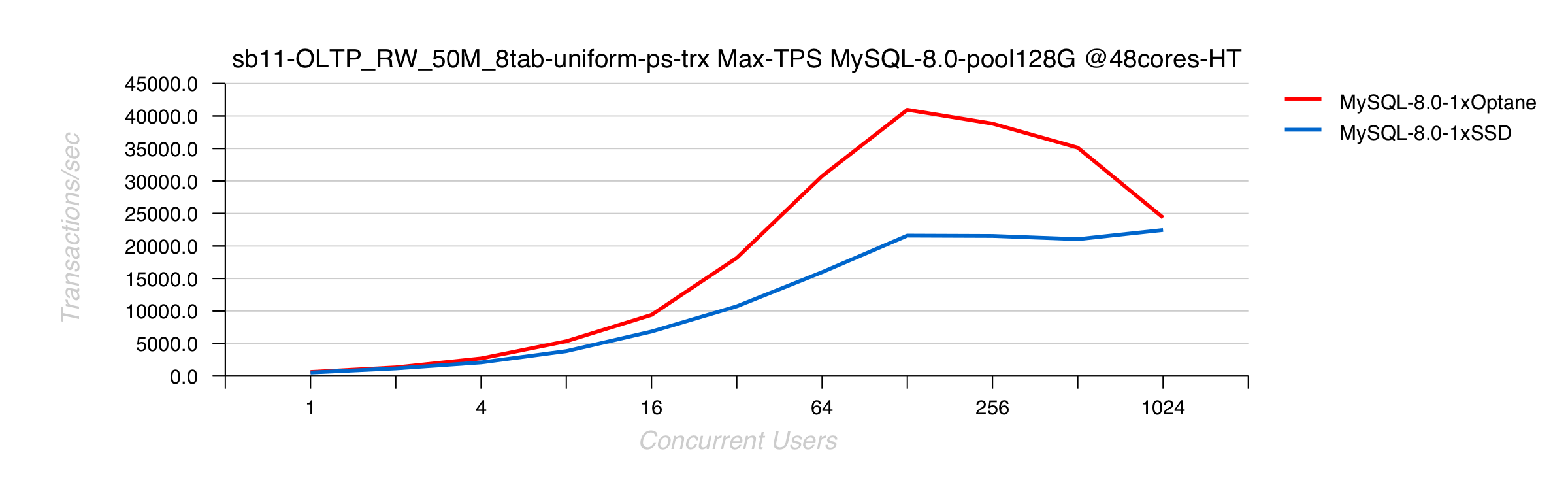

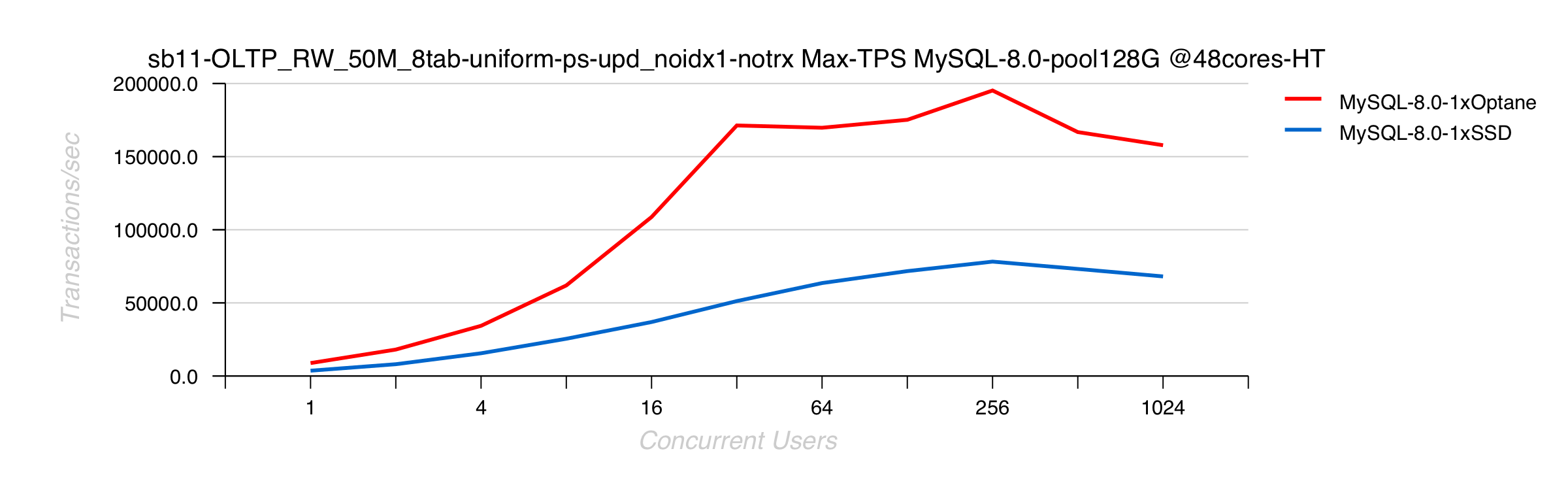

InnoDB Buffer Pool = 128GB

> OLTP_RW 50Mx8tables :

> Update-NoKEY 50Mx8tables :

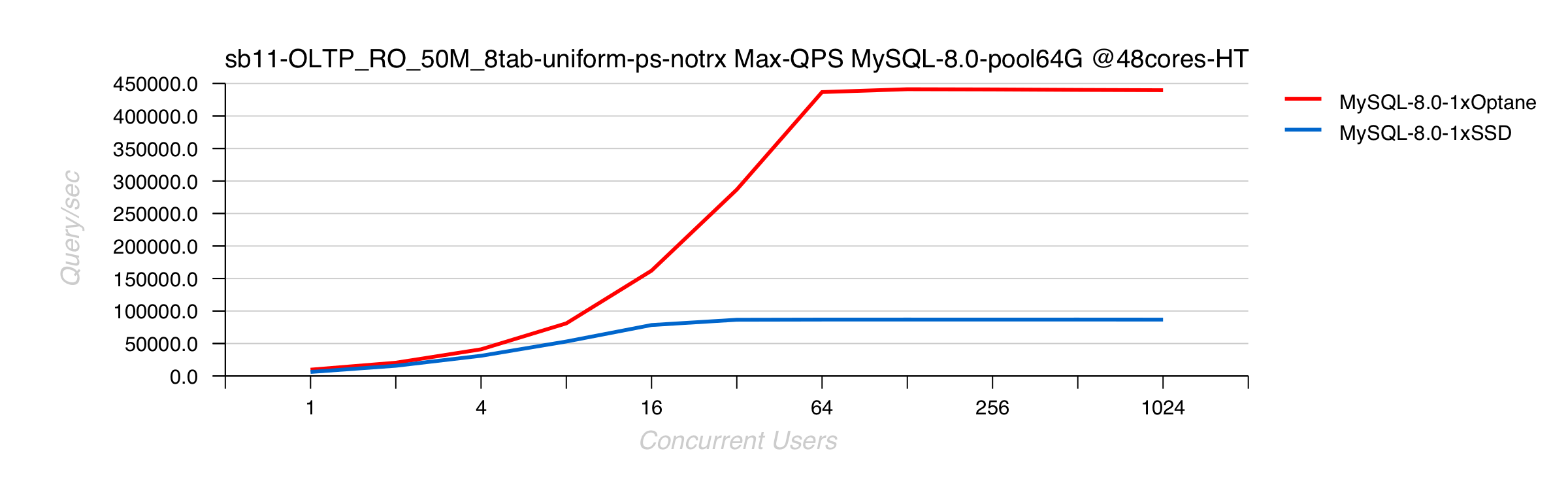

InnoDB Buffer Pool = 64GB

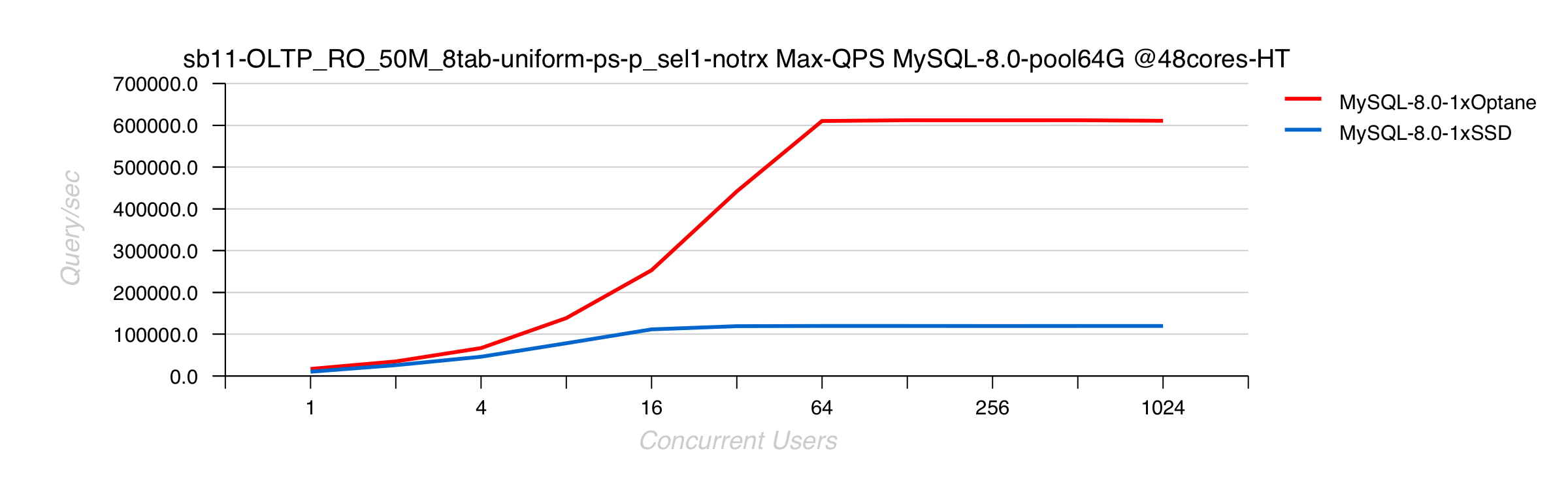

> OLTP_RO 50Mx8tables :

> OLTP_RO Point-Selects 50Mx8tables :

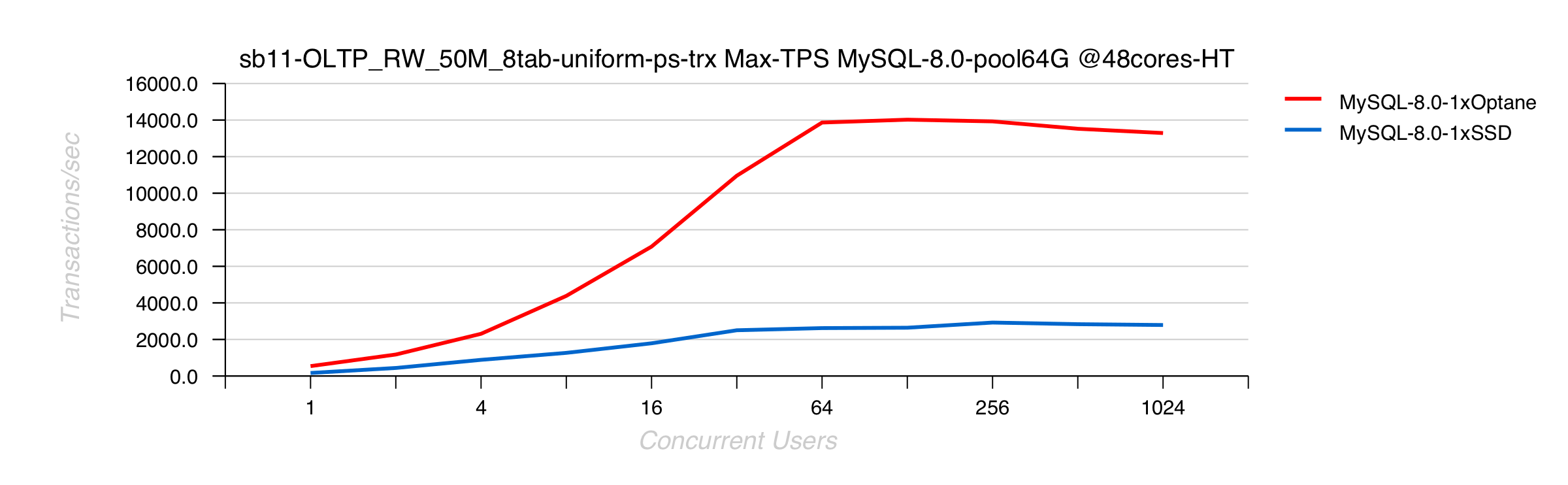

> OLTP_RW 50Mx8tables :

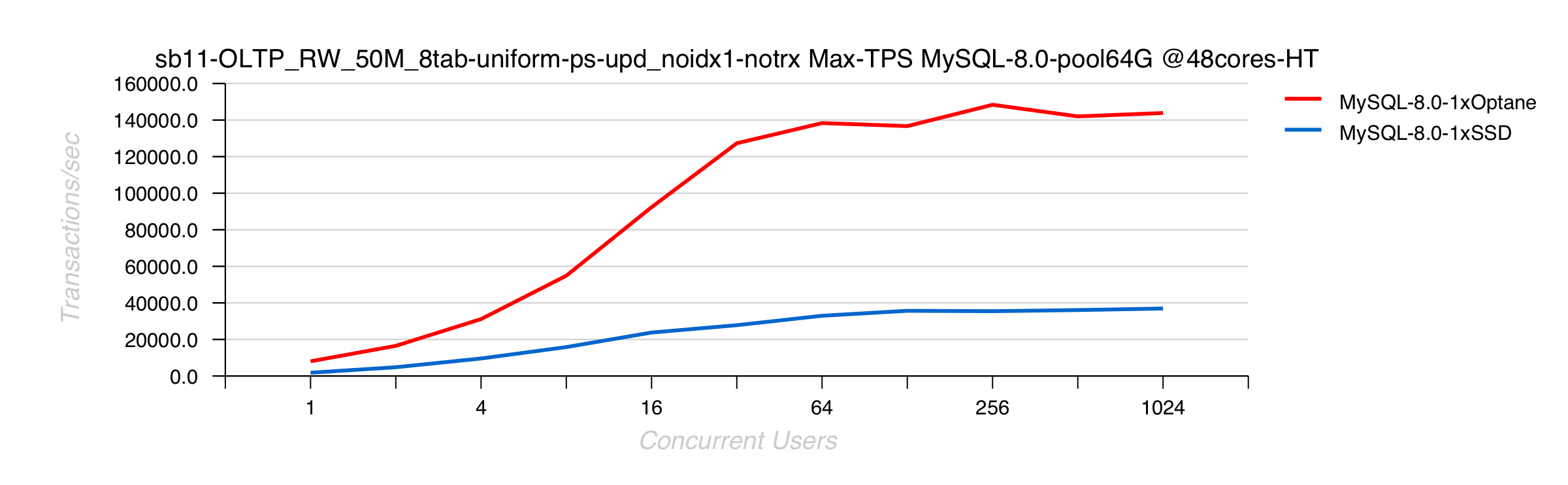

> Update-NoKEY 50Mx8tables :

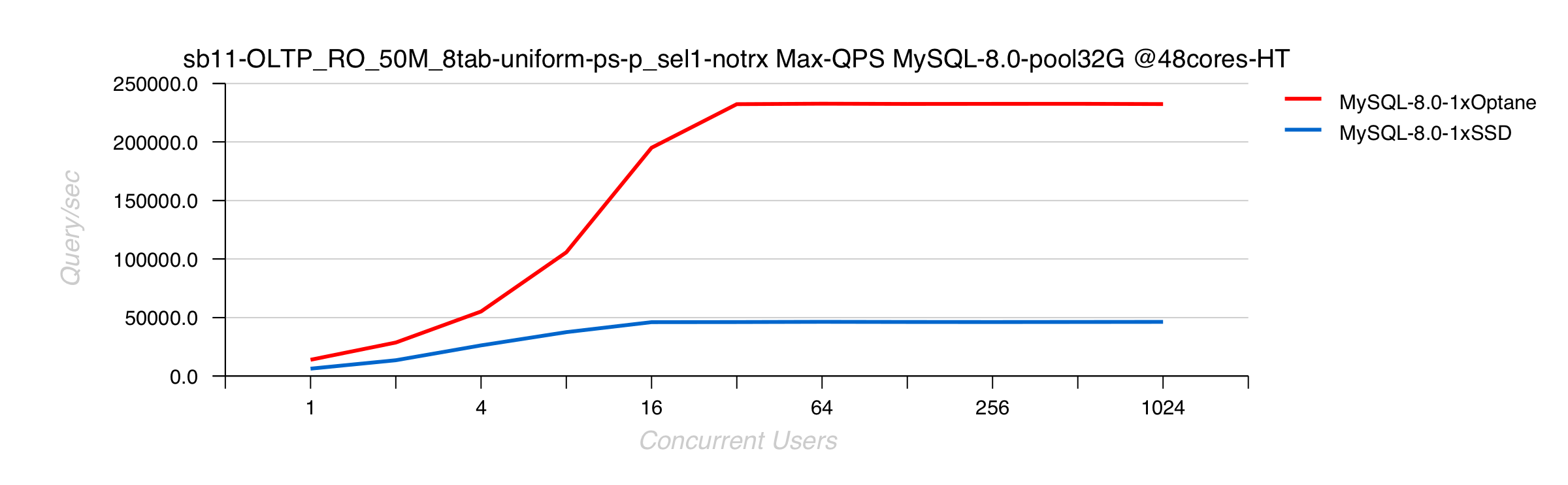

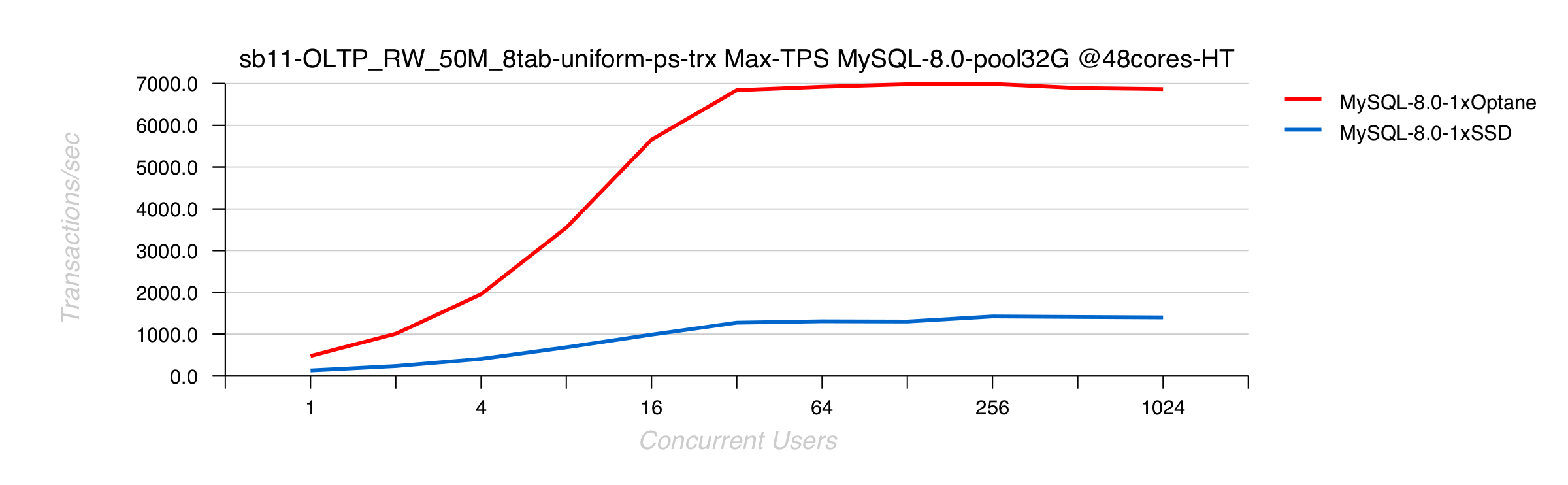

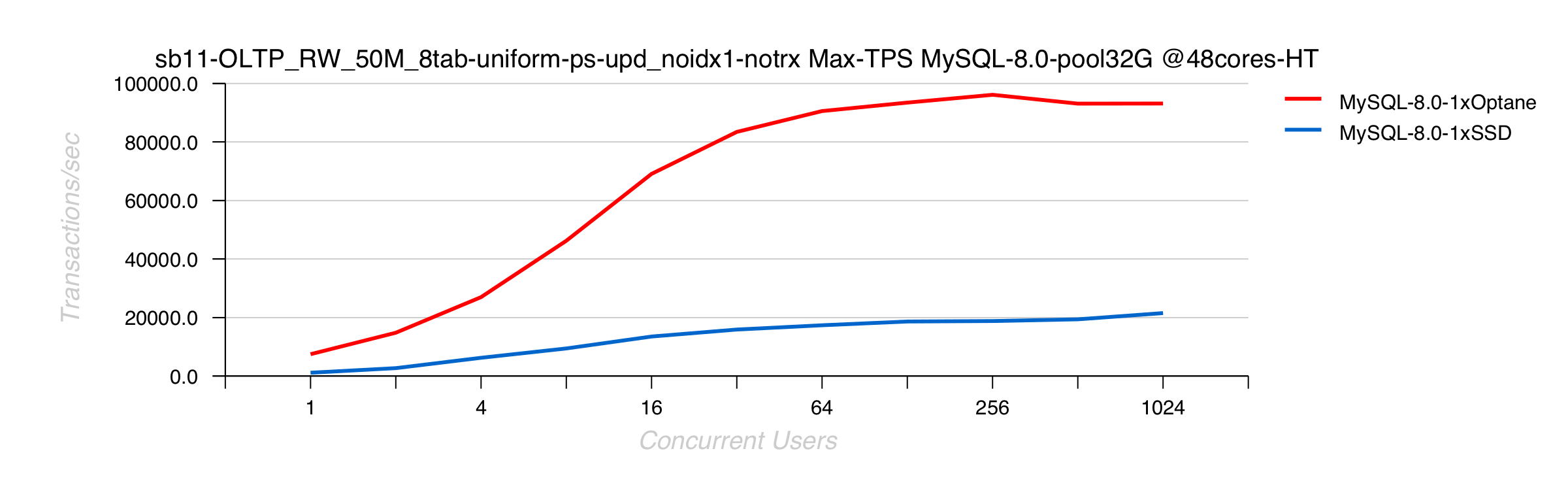

InnoDB Buffer Pool = 32GB

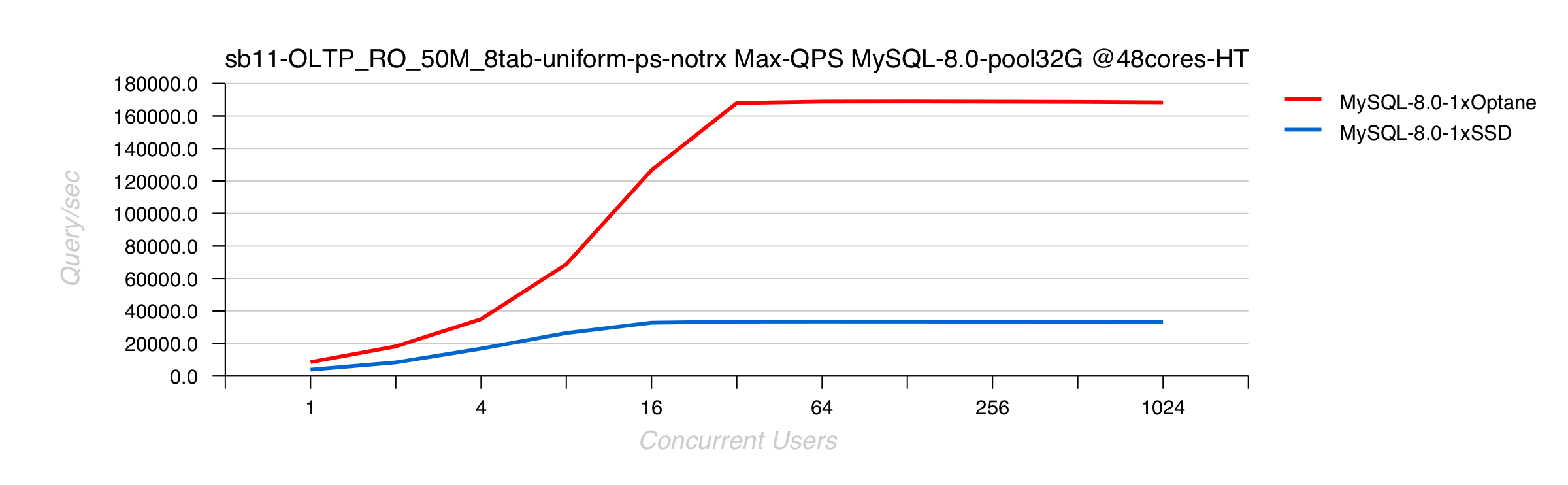

> OLTP_RO 50Mx8tables :

> OLTP_RO Point-Selects 50Mx8tables :

> OLTP_RW 50Mx8tables :

> Update-NoKEY 50Mx8tables :

INSTEAD OF SUMMARY

-

first of all, as you can see by yourself, Sysbench uniform workloads are very good for generic storage evaluation for MySQL -- less data remains cached in BP, and more aggressive IO activity will be generated !

-

and with MySQL 8.0 in above tests we're easily hitting the max. IO throughput of both devices mentioned in their respective specs. ! -- and on heavy IO-bound workloads Optane is proving its claimed mostly x5 times difference -vs- SSD !

-

indeed, no doubt -- Intel Optane SSD is a true killer for IO-bound OLTP !

-

and all IO related improvement made in MySQL 8.0 code are really coming on time ! KUDOS to MySQL Devs & Community !! ;-))

-

NOTE : amazing, but seems like it's a time for us to consider not only CPU priority for each running thread, but also its I/O priority !! -- definitively REDO writes should always go on the highest possible IO prio, and with a new REDO log design in MySQL 8.0 it should be much more easier now to achieve ;-))

Thank you for using MySQL ! -- stay tuned ;-))

Rgds,

-Dimitri