Created: 2008-12-18

Last modified: 2009-01-29

MySQL Performance: PERCONA

by Dimitri

SSC Team, 2008

Sun Microsystems Inc.

|

Ces informations sont données à titre indicatif et n'engagent pas Sun Microsystems.

Table of contents

Customer Name(s): MySQL

Contact Information:

- dimitrik@sun.com

Dates: Dec.2008 - Jan.2009

Keywords: MySQL, InnoDB, Percona, XtraDB, db_STRESS

Server(s):

- M5000 8CPU SPARC64-VIII 2400Mhz quad-core bi-thread, 64GB RAM

- M8000 16CPU SPARC64-VI 2200Mhz bi-core bi-thread, 256GB RAM

Storage:

- ST6140 2x LUNs (RAID1 1TB each), 2Gb Fiber Channel connection

System:

- Solaris 10 Update 6

- UFS

Application(s):

- MySQL

- db_STRESS

Overview: During the last performance study I was very curious to understand what was wrong with MySQL Percona patched build. Due limited time I did not get a 64bit version compiled and supposed it was the main problem... Well, now I have a powerful enough M5000 server in my hands and will try to see more closely what's happens.

Goal(s): Reach the best possible performance level with MySQL Percona build

Result(s): All details are in report :-))

Dec.2008

db_STRESS test scenarios will be still the same (for details get a look at http://dimitrik.free.fr/db_STRESS_BMK_2008.html#note_5220)

On the same time while I've prepared my test platform, there was MySQL Percona build-10 ready for download!

Percona build has a list of additional options (a very good presentation you may find here: http://www.percona.com/files/presentations/percona-patches-opensql-2008.pdf)

I've tried to see the difference by add the following ones:

# Percona

innodb_io_capacity = 10000

innodb_adaptive_checkpoint = 1

innodb_write_io_threads = 16

innodb_read_io_threads = 16

On the same time I've prepared these tests, Persona announced their "XtraDB" engine: http://www.mysqlperformanceblog.com/2008/12/16/announcing-percona-xtradb-storage-engine-a-drop-in-replacement-for-standard-innodb/

Should I say I was curious to see it in action? :-))

I've downloaded the sources and folowing REDME instructions compiled it within MySQL v.5.1.30 as part of mysqld.

As you see the config file just has additional options at the end (same as Percona build before):

[mysqld]

max_connections=2000

key_buffer_size=200M

low_priority_updates=1

sort_buffer_size = 2097152

table_open_cache = 8000

# files

innodb_file_per_table

innodb_log_file_size=500M

# buffers

innodb_buffer_pool_size=16000M

innodb_additional_mem_pool_size=20M

innodb_log_buffer_size=8M

# tune

innodb_checksums=0

innodb_doublewrite=0

innodb_support_xa=0

innodb_thread_concurrency = 16

innodb_flush_log_at_trx_commit=0

innodb_flush_method= O_DIRECT

innodb_max_dirty_pages_pct=15

# Percona

innodb_io_capacity = 10000

innodb_adaptive_checkpoint = 1

innodb_write_io_threads = 16

innodb_read_io_threads = 16

|

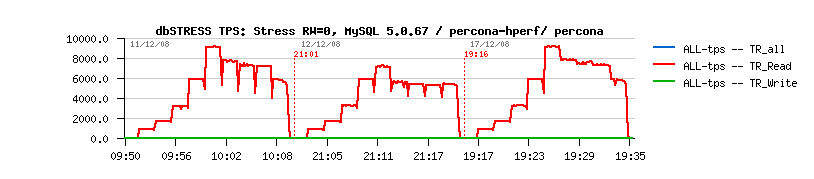

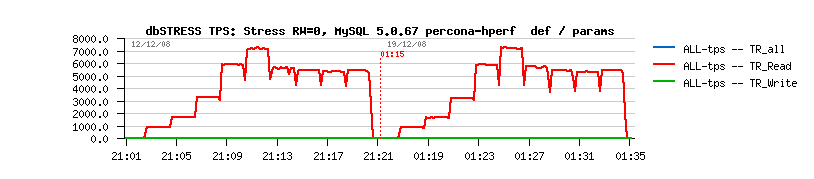

Read-Only Stress Test: 5.1.30 Default / XtraDB

|

|

|

Several points here:

- More than 20% performance gain for XtraDB comparing to official 5.1.30! Wow!

- Huge performance degradation for official 5.1.30 comparing to the 5.0.67...

- And probably it explains why XtraDB showing also here lower numbers comparing to 5.0.67 Percona build-10...

|

|

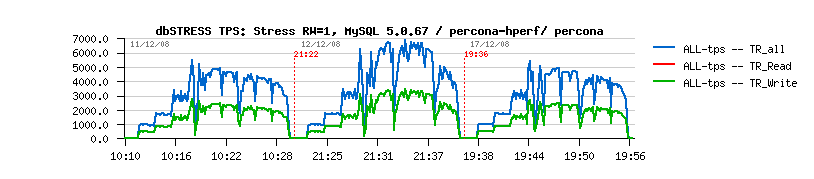

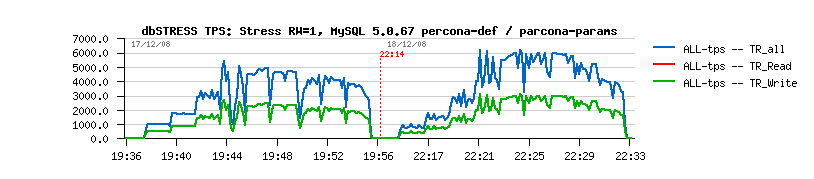

Read+Write Stress Test: 5.1.30 Default / XtraDB

|

|

|

Several points here:

- More than 25% performance gain for official 5.1.30 comparing to XtraDB!

- Slightly better performance level of official 5.1.30 comparing to the 5.0.67

- Huge performance degradation for XtraDB comparing to the 5.0.67 Percona build-10...

- Any idea?...

|

|

Detailed Stress Test STATS: 5.1.30 and XtraDB

|

Detailed Stress Test STATS: 5.1.30 and XtraDB

|

Detailed 1600 Users Test STATS: 5.1.30 and XtraDB

|

Detailed 1600 Users Test STATS: 5.1.30 and XtraDB

|

Investigating on Read+Write preformance...

|

What else I've tried:

- Completely removing Percona-specific parameters: same problem...

- Change innodb_max_dirty_pages_pct=90 : same..

- Use forcedirectio mount option in way to help concurrent writes (if any) : same..

- Install 5.1.30 with InnoDB as plugin (probably the problem is within plugin management?): plugin performs as well as standard 5.1.30...

- Finally out of any idea, I configured both standard and XtraDB "as for production", means innodb_flush_log_at_trx_commit=1, and see what happens... I would say now both engines performed on the same speed, and on the same time XtraDB presents much more favorable - just because it's very stable!

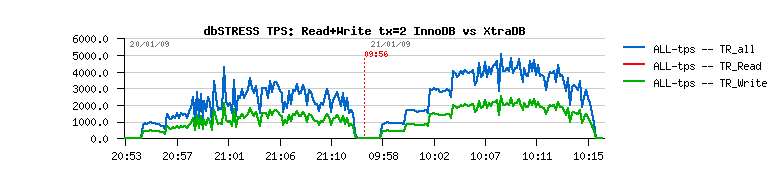

Stress RW=1 and innodb_flush_log_at_trx_commit=1: InnoDB vs XtraDB

Detailed Stress Test STATS with ~production~ config

Detailed Stress Test STATS on production config

Current points:

- Testing is not finished yet :-)

- Percona build and XtraDB are looking very promising!

- Full compatibility with InnoDB! (easy migration)

- More reach in internals info! (better for developer)

- More stable processing! (better in production)

- Still some problems, but let's call it "challenge" :-)

Stay tuned! More to come! :-)

Jan.2009

Discussing with Percona team, we were able to replay the same tests on their Linux server (RedHat, 8cores Xeon). And I was really surprised to hear they obtaining the same performance level on XtraDB as on InnoDB-plugin... Comparing our plateforms, the only significant difference I've found (on my point of view) is a number of CPU/cores (I don't think there is some significant difference between Linux and Solaris for MySQL, as well we're not comparing server results, but performance gap between XtraDB and InnoDB)... Well, to be completely honest I was surprised anyway to see they got on Xeon 6.000 TPS during Read+Write workload comparing to my 4.000 on SPARC64 :-))

Anyway, I supposed the key point here is the number of cores - all applications having scalability problems due locks will have their "critical limit" after which performance level will only decrease... And on my opinion they should try the same test on 16 core server (but they don't have it)... And finally the only way to verify my suppositions is to replay my tests on my platform but with 8 cores too :-))

And here are my results...

|

Stress RW=1 with XtraDB: 16vcpu vs 8vcpu

|

|

|

Observations:

- TPS level is 50% higher on 8 cores comparing to 16

- Same performance on SPARC64 as on Xeon :-)

|

|

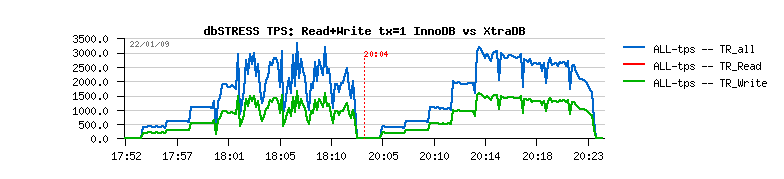

Stress RW=1 on 8vcpu: XtraDB vs InnoDB plugin (using the same my.conf and XtraDB

|

|

|

Observations:

- Same performance on default my.conf!

- Performance is slightly better with my.conf adapted to XtraDB

- No degradation with "adapted" (optimal) XtraDB my.conf (and it's what is observed on Linux Xeon box...)

- InnoDB plugin performs also better on 8cores vs 16

|

|

Investigating InnoDB internals...

|

In parallel I've finally finished to write a wrapper analyzing and reformatting InnoDB status output! Analyzing data from InnoDB internals during my tests I understood I still did not understand everything until no :-))

So, adventure continues... :-))

Here is an example of InnoDB_Stats script output (script is looping on "SHOW INNODB STATUS" and wrapping its output in dynamic format of 2 columns per line: variable name and its value; easy to scan as well easy to extend with other value with time).

$ InnoDB_Stats.sh 5

STAT-name STAT-value

Wait-Array-reservations 3

Wait-Array-reservations/sec 0.000000

Wait-Array-signals 3

Wait-Array-signals/sec 0.000000

Mutex-spin-waits 0

Mutex-spin-waits/sec 0.000000

Mutex-rounds 1

Mutex-rounds/sec 0.000000

Mutex-OS-waits 0

Mutex-OS-waits/sec 0.000000

RW-shared-spins 6

RW-shared-spins/sec 0.000000

RW-shared-OS-waits 3

RW-shared-OS-waits/sec 0.000000

RW-excl-spins 0

RW-excl-spins/sec 0.000000

RW-excl-OS-waits 0

RW-excl-OS-waits/sec 0.000000

OS-file-reads 482

OS-file-reads/sec 0.000000

OS-file-writes 6

OS-file-writes/sec 0.000000

OS-fsyncs 6

OS-fsyncs/sec 0.000000

Insert-Buffer-size 1

Insert-Buffer-free-len 0

Insert-Buffer-seg-size 2

Insert-Buffer-inserts 0

Insert-Buffer-merged-recs 0

Insert-Buffer-merges 0

Hash-Index-size 18479729

Hash-Index-buffers 0

Log-age 0.000000

Checkpoint-age 0.000000

Log-age-MB 0.000000

Checkpoint-age-MB 0.000000

Log-writes 10

Log-writes/sec 0.000000

Log-writes-MB 385281.343750

Log-writes-MB/sec 0.000000

Total-memory-MB 8649

Additional-pool-MB 6

Buffer-pool-size 512000

Free-buffers 511539

Database-pages 461

Modified-db-pages 0

Pages-read 462

Pages-read/sec 0.000000

Pages-create 0

Pages-create/sec 0.000000

Pages-write 1

Pages-write/sec 0.000000

Buffer-pool-hit 85

LRU-len 461

LRU-unzip-len 0

Queries-inside 0

Queries-in-queue 0

Rows-insert 0

Rows-insert/sec 0.000000

Rows-update 0

Rows-update/sec 0.000000

Rows-delete 0

Rows-delete/sec 0.000000

Rows-read 360

Rows-read/sec 0.000000

...

In few minutes I've described it as a new Add-On stat command within dim_STAT and was able to monitor and graph all these values in parallel wiht TPS, CPU usage and other...

|

First Observations with Wide Open Eyes :-)

|

To better understand InnoDB internals, I've setup a "stable load" test scenario: 128 users (sessions) are stressing database during one hour on mixed read+write load (RW=1) and zero wait time.

For my big surprise:

- Even if I set innodb_max_dirty_pages_pct=15, number dirty pages is growing up and outpassing 15% value!

- Checkpoint age is growing up and staying pretty the same during all one hour activity...

- Once workload is finished, database is still spending more then 30(!) minutes to completely flush all dirty pages from buffer pool!

After various other experiences based on the same workload I saw a strong dependency of checkpoint age and dirty pages level on InnoDB redo log size: wide space log will leave more room for dirty pages and push more far checkpoint age.. On the same time, I cannot say performance is much more better with a huge log size, specially when you evaluating a danger of crash and spending a long time on recovery...

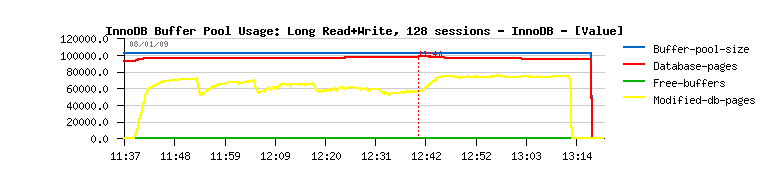

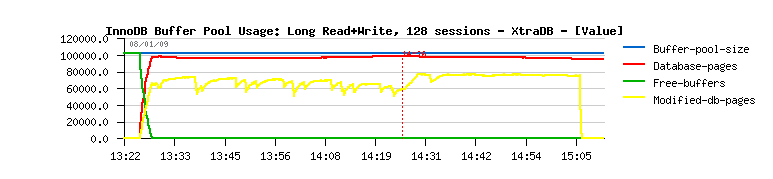

Following graphs are showing a buffer pool usage during one hour workload on InnoDB and XtraDB engines - both after one hour activity (red vertical line points the end) then spent more than 30 minutes to "do something" and finally flush all dirty pages (probably a bug?)...

Dirty Pages and InnoDB

Dirty Pages and XtraDB

Next step was to find the most "optimal" log size in way to keep the same performance level and still having lower number of dirty pages + faster flushing...

I've started from some very low values (4MB, 8MB, ...) and then the most acceptable results showed 128MB log size: stable ~20% level of dirty pages in pool and fast enough checkpoint flushing.

NOTE: Checkpoint design is quite interesting in InnoDB - it's not a "stop-all & flush" action as we may find as most common solution from other database vendors (well, the "stop-all" effect is seen in some cases as a secondary effect of checkpoint flushing (writing too much it blocks any other database activity)).

InnoDB will write few on checkpoint if database activity is high, and will try to write more if database activity is low. And the only one event will really force writing - when you don't have space anymore in your redo logs :-) - part of most old dirty pages should be flushed to free log space and shift checkpoint position more closely to the latest logged transaction. Broadly speaking, if your database activity is quite high you'll mostly see your checkpoint age near equal to the total redo space.

With my 128MB log size and 2 redo files my checkpoint age is usually staying around 220-240MB. Means if my database is crashing at this point, I have to keep in mind a recovery time needed to replay 240MB of changes...

As crash normally is not happening every hour, we may still give more priority for performance :-) but as usual, it's just a question of priorities :-) - 128MB I've found as the most optimal: not too big to get hours of recovery, and not too small to slow down database performance.

On the same time it may still happen to get a blocking checkpoint flushing with InnoDB - it happens when you have in buffer pool too much pages modified nearly in the same time (their modif.timestamp will be near the same) - Adaptive Checkpoint patch is a very interesting solution to solve such kind of situation!

Here are my final MySQL configuration files I used in following tests.

Some points:

- My M5000 was gone and I moved all data to the old but still powerfull M8000 server (16CPU bi-core), and on the same time I'm not interesting on the highest TPS numbers, but the TPS ratio between InnoDB plugin and XtraDB! :-)

- All tests are executed within 8cores processor-set for MySQL (I've also tried 16, but the result is much worse)

# psrset -c 0 2 8 10 16 18 ...

created processor set 2

# psrset -e 2 /apps/mysql5/mysql.server start

...

- I've splitted data and redo logs into 2 different and independent storage LUNs (RAID1) to see I/O traffic separately (that's why innodb_log_group_home_dir value is different from default)

- Until it's not specified explicitly, innodb_flush_log_at_trx_commit=2 was used (tx=2), and only in "production" final tests =1 was used (tx=1)

Observations:

- As you may see from the following graphs, XtraDB performed much more better comparing to the official InnoDB plugin - seems to me a more "realistic" configuration do the difference, as well newer XtraDB version was shipped during this time (but the gain is the same with an old XtraDB too :-))

- Analyzing buffer pool usage, I realized the most of data were read from the database cash due "Anti-Dead-Lock" feature in db_STRESS: using this option will avoid concurrency access to the same OBJECTs (see db schema) from the different users (each user is working with it's own list of OBJECTs) - that's why you'll see also a "True Random" (TrueRND) tests with disabled Anti-Deadlock feature. Theses tests are quite curious and generated other kind of internal locking, but again XtraDB performed better...

- Even on true random workload "Buffer-Hit" value from InnoDB showed 100% hit - however there was a lot of reading of data from disk all of the time... Probably it's just not giving an accurate number? don't know..

- Graphs with Log writes/sec on tx=2 show you a real speed expected from your disk storage if once you'll move to innodb_flush_log_at_trx_commit=1 (tx=1) - quite interesting capacity evaluation/estimation :-)

- Quite interesting to observe and understand a dirty page flushing in live mode from your running database, as well all other kind of stats :-)

Read-Only Stress Test: InnoDB-plugin vs XtraDB

Read+Write (tx=2) Stress Test: InnoDB-plugin vs XtraDB

Read+Write (tx=1) Stress Test: InnoDB-plugin vs XtraDB

Final Test STATS

Finally:

- XtraDB is outperforming InnoDB in my tests!

- More to come: new performance improvements were introduced last days within XtraDB and next releases should scale even better!

- Stay tuned - world is constantly moving! :-))