« MySQL Performance: Percona Live & Few Perf Stories.. | Main | MySQL Performance: Why Performance Schema Overhead?.. »

Monday, 08 July, 2013

MySQL Performance: Analyzing Benchmarks, part 5: TRX list again

It was a long time that I wanted to dig more the solution proposed by

Percona to improve "TRX list" (transactions list) code -- the initial

story by Alexey is starting here,

then my following analyze is here,

and then another test results from Percona are here.

The initial discussion was more related to the READ-ONLY TRANSACTIONS

introduced within MySQL 5.6 (and it was introduced exactly to get a rid

of TRX mutex on read-only requests where transactions are not required

at all). But in the last tests Alexey involved a very good question:

what will be an impact on performance when READ-ONLY TRANSACTIONS

activity is meeting writes from other user sessions?.. - and this is

waked up my curiosity ;-)

Well, I'll try to put things in order,

while unfortunately my analyze cannot be not complete here, as I'm not

allowed to publish results from the MySQL 5.7 latest code until it

became public, so will complete the full puzzle later then ;-)

So,

first of all I'll cover points exchanged within various discussions and

blog comments. One of them was a tested database volume -- from my

observations until now as soon as workload is read-only, and all used

data set in tests is seating in Buffer Pool, and workload queries are

not dependent on the data volume - then there will be near no difference

from the benchmark results on a bigger or a smaller volume.. And

Sysbench POINT-SELECT is a very good example here (as every query is

just reading a single row by its PK). So, on my previous tests I've used

x8 tables of 1M rows. Now I'll replay the same, but with 10M rows (so,

x10 time bigger volume, and filling pretty well my 32GB BP).

To

get the full story shorter, I'll combine it with scalability results.

Don't know why on Percona servers the results on 5.6 and on 5.5 are

looking very similar, but it's pretty different on my 32cores server.

I'm supposing that my server is probably more sensible to any CPU caches

syncs between sockets, but in any case this server is very good for

performance investigations - because once the code is working well on

this host, I'm pretty sure then it'll work very well on any other server

too ;-)

So far, the following graphs are representing the test

results from:

- Workload: Sysbench POINT-SELECT test using x8 tables of 10M rows each, query without transactions

- Concurrent users: 16, 32 ... 1024

- CPU affinity: MySQL Server is running on 16cores, then 16cores-HT, then 32cores, then 32cores-HT (via taskset)

-

Tuning: nothing special, except:

- BP size: 32GB

- BP instances: 32

- innodb_spin_wait_delay=12 (otherwise hitting a regression on Percona-5.5)

Let's get a look on Percona-5.5 results first:

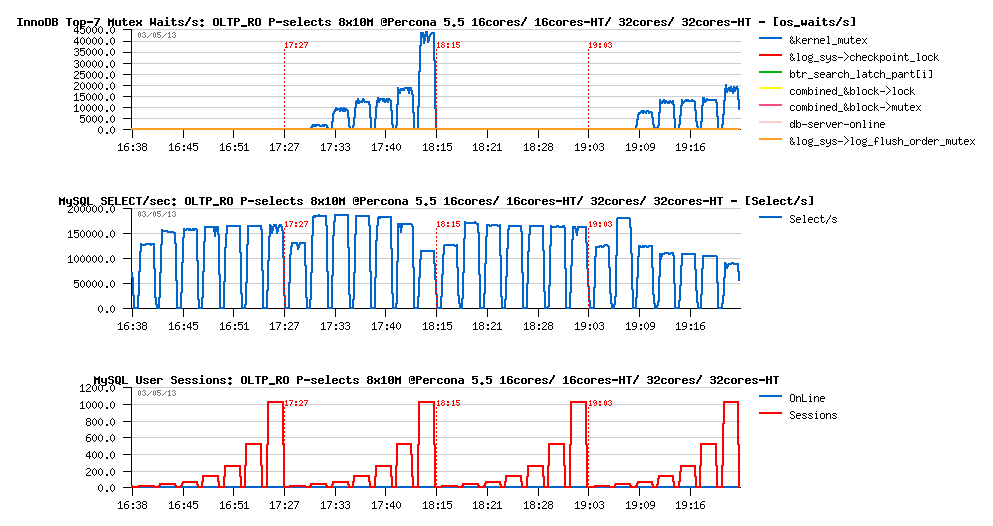

OLTP_RO Point-SELECTs 8-tables x10M @Percona 5.5, 16cores/ 16cores-HT/ 32cores/ 32cores-HT :

Observations :

- The best performance for Percona-5.5 is reached on 16cores-HT configuration

- the max result is 180K QPS

- main bottleneck is on the kernel_mutex contention - interesting that this contention is most seen on configs with HT enabled..

OLTP_RO Point-SELECTs 8-tables x10M @MySQL 5.6, 16cores/ 16cores-HT/ 32cores/ 32cores-HT :

Observations :

- The best performance for MySQL 5.6 is also reached on 16cores-HT config

- the max result is 275K QPS

- performance is lower on 32cores, and then hitting again the trx_sys mutex contention on 32cores-HT..

- so, even if READ-ONLY TRANSACTIONS feature is working well, it hits its limits anyway, and the code needs yet to be improved..

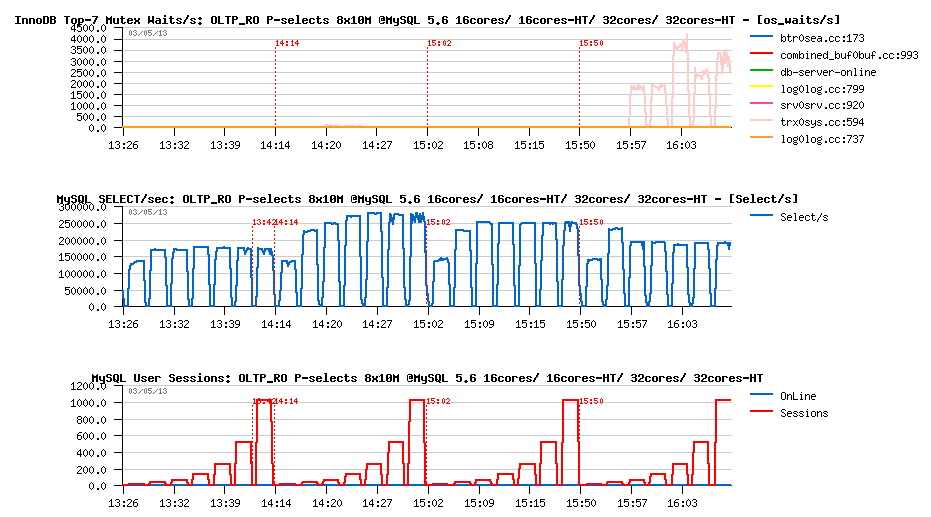

And now to compare apples-to-apples @16cores-HT on the same graph (MySQL-5.6 on the left, Percona-5.5 on the right) :

OLTP_RO Point-SELECTs 8-tables x10M @16cores-HT, MySQL 5.6 -vs- Percona 5.5 :

Observations :

- so far, here you can see a clear benefit of removed kernel_mutex contention in MySQL 5.6

- and benefit of the READ-ONLY TRANSACTIONS introduced in MySQL 5.6 as well

And READ-ONLY TRANSACTIONS were specially introduced to avoid the following situation where the same read-only POINT-SELECT queries are executed within transaction statements:

OLTP_RO Point-SELECTs-TRX 8-tables x10M @16cores-HT, MySQL 5.6 -vs- Percona 5.5 :

Observations :

- so, if transactions are used for RO statements, we're loosing all the benefit of RO transactions feature in MySQL 5.6

- 5.6 is getting a huge regression here (one more reason to use a new feature ;-))

- and since 128 concurrent user sessions the QPS level may only decrease (with increase of trx_sys mutex contention)..

- the "TRX list" patch from Percona is resisting better for Percona-5.5 QPS, while also hitting a regression.. (but not as big as 5.6, and keep better on a high concurrency level)..

- however, seeing regression on Percona-5.5 as well, making me thing that the story is not only about trx_sys contention here..

Well, the next 5.7 code is already auto-discovering READ-ONLY TRANSACTIONS even transaction statements are used, so even in this "non optimal" case there will be no regression (except that CPU time is wasted by transaction statement itself) -- so the question is then only to back port it to 5.6 code or not.. But Alexey is rising a more interesting question: what will be impact on RO transactions if in parallel there will be running a RW workload?.. Because the RO transaction feature is working well just because the "TRX list" is remaining empty.. - what will change for RO users when there will be some active transactions?..

I was unable to reproduce the test used by Alexey due some Sysbench issues (getting deadlocks or internal errors), so I've replaced it by a less artificial and more speaking to me:

- I'm starting a constant OLTP_RO workload with N concurrent users doing the same POINT-SELECT queries on x8 tables

- this OLTP_RO workload is using RO transactions

- and then, in parallel, I'm starting another Sysbench workload doing only OLTP_RW-Updates

- this Updates workload is starting with M concurrent users, where M is 4, 8, 16, 32, 64, 128

- so I'll always have M active transactions within InnoDB + observe the impact of both workloads on each one ;-)

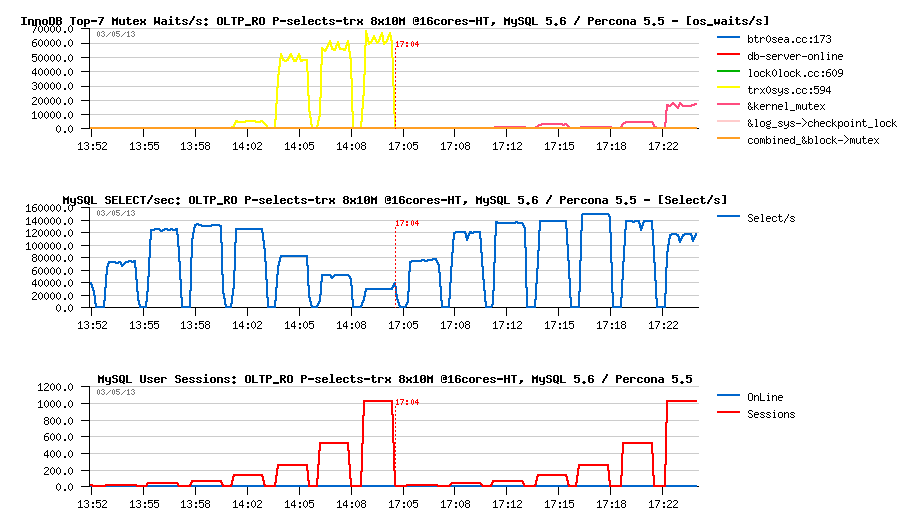

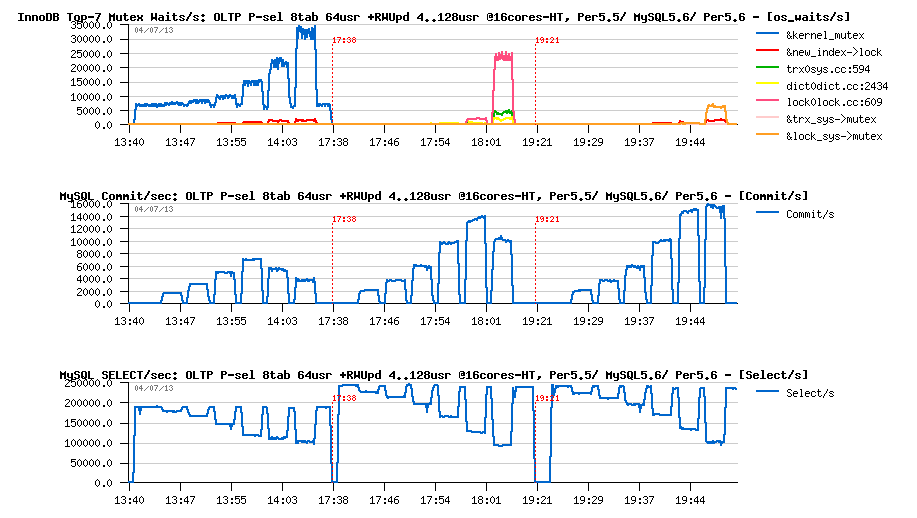

Let's start with 64 concurrent users in OLTP_RO (Percona-5.5 is on the left, MySQL-5.6 on the right).

OLTP_RO 64usr + RW-Updates 4..128usr @16-cores-HT, Percona-5.5 -vs- MySQL 5.6 :

Observations :

- Commit/sec graph is reflecting the RW performance during the test

- While Select/sec graph is showing us the RO performance

- So, initially both engines are doing only RO workload and starting from their top levels on 64 users: 185K QPS for Percona-5.5, and 245K QPS for MySQL-5.6..

- then the first 4 RW users arriving, then more and more up to 128..

- as you can see, regression is impacting both engines..

- and at the end of the test both are regressing on RO up to 100K QPS..

- however, on the same time MySQL-5.6 is doing a way higher TPS on RW workload, and the performance result in fact is limited by lock_sys mutex contention, rather trx_sys... - so, it's not as simple ;-)

- and MySQL-5.6 is still looking better here..

However, things are changed when the same test is executed on 256 concurrent users on OLTP_RO (more concurrent users are creating a more hot contention):

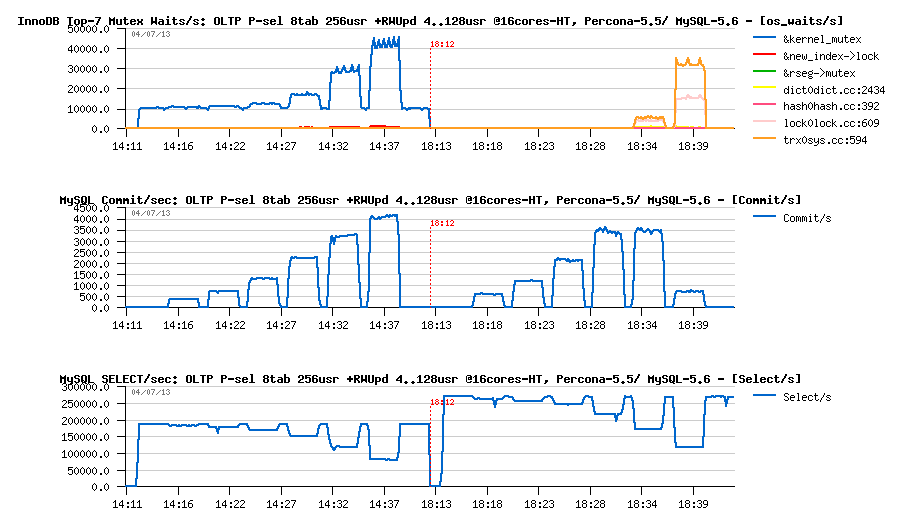

OLTP_RO 256usr + RW-Updates 4..128usr @16-cores-HT, Percona-5.5 -vs- MySQL 5.6 :

Observations :

- MySQL-5.6 is starting from 270K QPS on OLTP_RO and its regression has a less deep then Percona-5.5

- But since 64 concurrent RW users MySQL-5.6 is starting to hit trx_sys mutex contention which is impacting a lot its RW performance..

- and since 128 RW users hitting a dramatic regression on RW workload performance..

Well, all these results were unable anyway to give me a clear picture about what is going internally on all these contentions.. - the difference to day is too huge between the 5.5 and 5.6 code ;-)) So my main expectation was on the apples-to-apples comparison between MySQL-5.6 and Percona-5.6 which is fully based on 5.6 code...

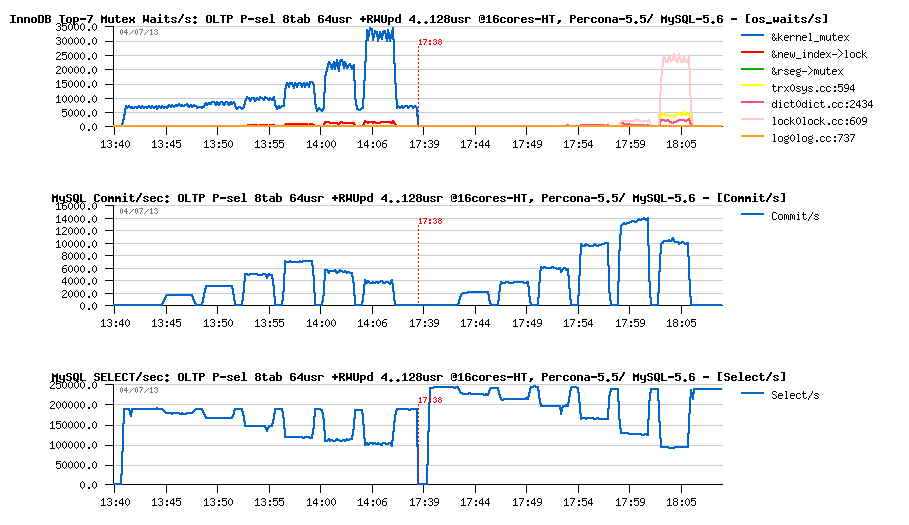

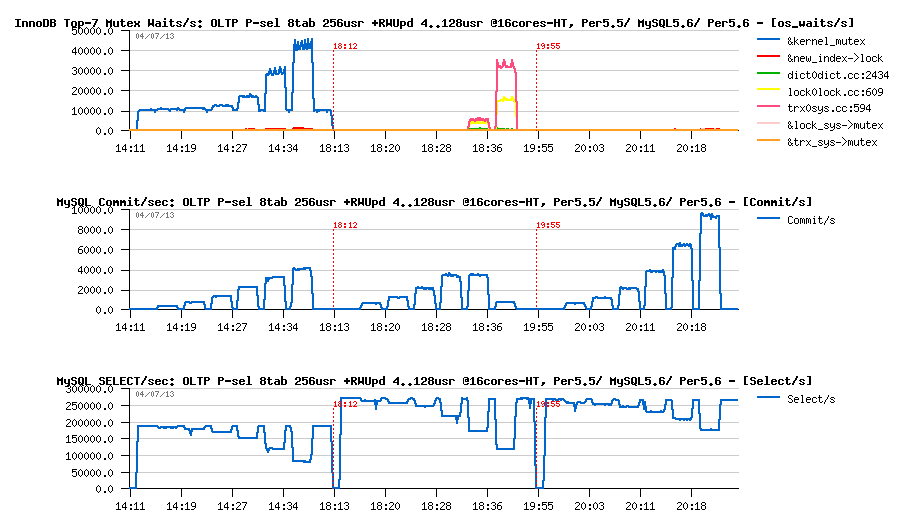

Here are the same tests, but including also results obtained on Percona-5.6-rc within the same configuration:

OLTP_RO 64usr + RW-Updates 4..128usr @16-cores-HT, Percona5.5 -vs- MySQL5.6 -vs- Percona5.6 :

Observations :

- first of all, there is very clearly seen the benefit of the MySQL 5.6 code base - 245K QPS on RO instead of 185K QPS on Percona Server once based on MySQL 5.6 code! ;-))

- then a clear benefit of proposed "TRX list" patch by Percona: by lowering trx_sys mutex contention, it's lowering also as the result the lock_sys mutex contention too..

- which is finally resulting in a better RW performance while keeping exactly the same RO regression..

- however, on this test case we're seeing only a secondary effect of the patch as trx_sys mutex contention is not on the top position..

But on 256 RO users we're starting to see it very well:

OLTP_RO 64usr + RW-Updates 4..128usr @16-cores-HT, Percona5.5 -vs- MySQL5.6 -vs- Percona5.6 :

Observations :

- I'd say "no comments" :-))

- lowered trx_sys mutex contention giving a way better performance here on Percona-5.6

INSTEAD OF SUMMARY

- So far, to "fix" MySQL-5.6 I think we have here two options: backport related to trx_sys mutex (and other) contentions improvements from 5.7, or simply apply a proposed by Percona patch.. (interesting that a very similar "fix" implementation was already tested by InnoDB Team in the past, but was rejected as not giving a complete solution to the problem)..

- For sure, a true fix for all these issues will come with MySQL 5.7..

- But probably as a "short term" the fix used here by Percona will be just "good enough" for MySQL 5.6 right now?..

Well, to get a full picture about the story you'll need to wait until the latest MySQL 5.7 code will become public (and believe me, there is already something to see, and compared to all presented here results as well ;-))

Rgds,

-Dimitri

blog comments powered by DisqusNote: if you don't see any "comment" dialog above, try to access this page with another web browser, or google for known issues on your browser and DISQUS..