« dim_STAT | Main | MySQL »

Saturday, 30 December, 2017

dim_STAT v.9.0 CoreUpdate-17-12 is here !

Year is finishing, and as a kind of "end-of-year" gift, I'm happy to

announce that a freshy new CoreUpdate-17-12 is available from now

! ;-))

IMPORTANT : this is a very "sensible" update, and you

cannot just to apply it on the top of already deployed dim_STAT instance

as before.. -- there was a need to fix several issues within "under

hood" binaries to make the new stuff working properly, so the new code

has simply no chances to work with old binaries.. So far, I decided to

make it all as a "single shot move" -- align a new update shipment with

a moving to "64bit" versions support only :

- e.g. this is not a new version of dim_STAT

- this is just a "remastered" 64bit release + several visible and internal fixes

- any 32bit releases are remained "as it", and it makes no more sense to continue to support them..

- 64bit versions of dim_STAT v.9.0 are available for Linux and MacOSX (macOS)

- any further CoreUpdate will work only with 64bit version having the latest supported binaries..

So, what about this "new stuff" requiring such deep changes to go till the binaries remastering ?.. -- a very long story short, this is all about support of SVG images ! ;-)) -- I've started to develop dim_STAT exactly 20 years (!!) ago.. (hard to believe time is flying so fast..) -- initially dim_STAT used Java Applets (it was very lightweight (yes! it was so 20 years ago ;-)) and it was really cool, "live", etc.) -- but then Java support in a browser became only heavier and heavier, so I've added PNG images support (which could bring back the initial lightweight to dim_STAT and make it "usable" again ;-)) -- and today, things are changing again ;-)) -- "retina" and other "high resolution" screens become more an more popular, and on these screens my previously "good enough" PNG graphs are looking just "ugly" (to be polite ;-)). After testing and analyzing tons of various JS-based (or other) live graphing tools/libs, I've finally stopped my choice on SVG ! -- it's already supported by most of web browsers, still lightweight, and has a huge advantage -- it's extremely well "readable" !! and you can scale (!!) it very easily as much as you want ;-)) (so, no more color confusions and simply ugly graphics ;-))

An SVG graph is looking like this :

well, this is just a snapshot, but hope you already can get an idea about how much "more clean" your graphs could be ;-))

NOTE : some web browsers (for ex. FireFox) may require to not be limited in the "smallest" font size to draw SVG images correctly (as the text you see on your graphs is reproduced by your browser itself).

Now, few words about how SVG is integrated in dim_STAT :

- you have a new option for Graph image now : SVG (default)

- and in fact at any time you're selecting SVG or PNG -- your graph is generated in both formats on the same time

- however, the same image "size" may look differently in PNG vs SVG..

- for this reason there is an additional option : SVG Scale (which is x1.0 by default, but you may change it as you like)

- and most of dim_STAT "modules" are considering SVG option since now ;-))

So far, what is new in CoreUpdate-17-12 :

- SVG support !

- mandatory : remastered dim_STAT v.9.0 64bit version

- always generating PNG & SVG together

- SVG Scale option (web interface)

- default SVG Scale=1.0

- Snapshots :

- always containing PNG & SVG

- editing :

- allow image reordering by mouse dragging

- use SVG or PNG images according to what was recently used..

- redesigned Snapshots list :

- select criteria : Title pattern, order by time / title

- show all || first / last [N]

- option : show first N SVG/PNG images..

- option : show notes..

- click on title link involves snapshot editing

- redesigned Published Snapshots :

- have now also PNG and SVG document versions (HTML)

- HTML documents are now composed from first 2 images of each Snapshot + its notes, then <<More>> link is pointing on a full Snapshot page..

- PDF documents format remains the same (single document starting with a list of links to all included Snapshots)..

- Multi-Line stats :

- "CacheList" feature to speed-up names look-ups ! (generated once, then only re-used, can be re-generated again at any time on demand)

- allow bigger varchar limits for Add-On "name" collumn : 8/ 16/ 24/ 32/ 48/ 64/ 80/ 96/ 128

- Report Tool :

- you can now add Published Snapshots to your reports !

- code remastering / cleanup

- better / more accurate formatting

- remove external / java editors

- minor bug fixes..

- Bookmarks :

- Duplicate existing Preset

- Edit Preset (via checkbox list + reorder)

-

code remastering..

- STAT-service :

- STAT-service script now saves PID on start

- new commands: status, restart

- status : check if process with saved PID is running

- stop : call x.killSTAT with saved PID

- x.killSTAT : remaster to use CMD or PID

- restart : calls itself with stop, then start

- respecting chkconfig standard

- respecting LSB standard

- to setup under systemd :

- # ln -s /apps/STATsrv/STAT-service /etc/init.d

- # systemctl enable STAT-service

- # service STAT-service start

- # service STAT-service status

- # /apps/STATsrv/STAT-service status

- involving ALARM signal : ejecting on x10 Timeout non-activity period in all MySQL/ PG/ ORA scripts !!!

- => this could be very important in case when your monitored database engine becomes "frozen", but still continue to accept new connections..

- so dim_STAT will not see any stats coming from your database and suppose the connection was lost..

- and dim_STAT will then start a new stat command..

- which may still connect to your database, but not go any further, as database is frozen..

- and this may continue endless in loop every time once "connection timeout" is expired..

- and your server will get tons of stat commands started on its side and trying to collect some stats from your frozen database engine..

- so, to avoid this, each stat command is now having its own "non-activity alarm" -- if within x10 times Timeout period there was nothing collected from a given database, the stat command will self-eject by alarm event ;-))

- innodbMUTEX : advanced aggregation with tot@ / max@ / avg@ wait stats !

- Linux netLOAD_v2 : reports also MB/sec + fixed counters overflow

- Linux mysqlSTACK / ProcSTACK (based on quickstack from Yoshi (@FB))

- Linux mysqlCPU / ProcCPU (based on tiptop, not compatible with "perf", use with caution)

- Linux PerfCPUSTAT (based on "perf", reports CPU HW counters)

- Linux PerfSTAT_v2 (improved PerfSTAT)

- extended with Cycles/s numbers !!

- new option : -Event ... (ex: -Event cycles:u)

- EasySTAT :

- extended on Linux with : mysqlSTACK, mysqlCPU, netLOAD_v2, PerfCPUSTAT, PerfSTAT_v2

- now also auto-generating start/end log messages (to simplify post-analyze)

-

LoadDATA.sh is allowing now to pass args from command line (path

to BatchLOAD and DB name)

- General :

- Analyze Top-N values : choice => MIN/ MAX/ AVG/ 95% !

- export data in CSV format via Analyze page & dim_STAT-CLI

- tag LOG Messages with different colors according text pattern !

- on stat names checkbox => popup with note description (when available)

- improved auto-scaling Y-axe values according image size !

- improved default HTML style, re-look, etc.

- New Add-Ons & their Bookmarks :

- mysqlSTACK

- mysqlCPU

- ProcSTACK

- ProcCPU

- netLOAD_v2

- PerfCPUSTAT

- PerfSTAT_v2

- ...

- minor bug fixes..

Hope you'll enjoy all this new stuff as I'm already doing ;-))

For those who already using dim_STAT and want to preserve their collected data -- here are few simple steps to make the migration to the latest 64bit version smooth :

- first of all - no matter if you used 32bit version before, or 64bit (or SPARC, etc.), instructions are exactly the same

- however, even the version remains the same, we're moving to new remastered binaries..

- so the whole process is looking as "migration" (rather a simple CoreUpdate apply)

- (and if your data are critical, better to try you migration steps on another host first ;-))

- so far, let's suppose you already deployed dim_STAT into "/apps" on your host1

- 1) stop dim_STAT : # /apps/ADMIN/dim_STAT-Server stop

- 2) backup your whole (!!) database directory : /apps/mysql/data

- 3) if you created any Reports or Snapshots, then backup also the whole web server docs directory : /apps/httpd/home/docs

- 4) now on host2 install the latest 64bit dim_STAT version (supposing it'll be re-installed to the same default "/apps" path)

- 5) restore your "docs" backup into "/apps/httpd/home/docs" on host2

- 6) from your databases backup restore all to "/apps/mysql/data" on host2 except the following directories :

- /apps/mysql/data/mysql

- /apps/mysql/data/performance_schema

- /apps/mysql/data/dim_00

- 7) start dim_STAT on host2 : # /apps/ADMIN/dim_STAT-Server start

- 8) connect to your newly installed dim_STAT on host2 and check you can find all your previously collected data and created documents / snapshots..

- 9) if something is going odd.. -- then ping me back, let's see what is going wrong ;-))

- 10) if all is fine, then test if for a while, and once everything is really ok, then you're comfortable to migrate your host1 too ;-)) (and don't hesitate to ping me with good news too ;-))

Crossing fingers.. -- hope all will go just fine for you ;-))

And for the end.. -- there is yet "one more thing"..

This "one more thing" was inspired by observing many dim_STAT users doing some completely "unexpected things" (unexpected in my mind, but looking very "natural" for those who are doing) -- honestly, I'm really surprised by all use cases I've seen over past years (even users generating graphs via CLI commands involved from app servers and then grouping them into various documents, charts, etc.) -- but the most painful for me was to see users trying to involve "web oriented" actions in dim_STAT via curl, wget, etc.. -- this could bring to something wrong as there could be tons of options used within each POST/GET order, and all expected calls could be just broken after further CoreUpdates..

And to make your life more simple for such use cases, let me present you the REST-like Interface available since CoreUpdate-17-12 ! ;-))

dim_STAT-REST interface is supporting the following commands right now :

- DB_LIST -- list all available databases

- DB_CREATE -- create a new database

- HOST_LIST -- print current host list in database

- HOST_ADD -- add new hostname into host list in database

- HOST_STATS -- request the list of available STATS from host STAT-service

- COLLECT_LIST -- list all available STAT collects in database

- COLLECT_NEW -- create and start a New Collect in database

- COLLECT_STOP -- stop Active Collect(s) in database

- COLLECT_RESTART -- restart Stopped Collect(s) in database

- LOG_MESSAGE -- add a LOG Message to database

the output of each command is going in simple "pre-formatted" ASCII text, easy to parse and check.

Here is an example of "COLLECT_LIST" output :

==========================================================================================

dim_STAT-REST (dim) v.1.0

==========================================================================================

> CMD: COLLECT_LIST

----------------------------------------------------------------------------------------

1 | goldgate | -OFF- | 1998-12-18 16:28:27 | 15 sec. | Demo 1

6 | test | -OFF- | 2002-10-20 23:37:01 | 15 sec. | test

7 | bezout | -OFF- | 2003-06-26 13:46:51 | 30 sec. | test err

9 | fidji | -OFF- | 2003-09-17 13:23:12 | 10 sec. | test MPXIO + nocanput

12 | test | -OFF- | 2003-10-06 17:15:43 | 20 sec. | test MTB bug

15 | localhost | -OFF- | 2004-09-26 21:48:49 | 20 sec. | test

16 | localhost | -OFF- | 2004-09-26 21:51:59 | 20 sec. | test

17 | gauss | -OFF- | 2004-10-01 12:29:37 | 20 sec. | test RESTART

18 | neel | -OFF- | 2004-10-01 12:30:00 | 20 sec. | test RESTART

19 | monod | -OFF- | 2004-10-01 12:33:52 | 20 sec. | test RESTART

20 | monod | -OFF- | 2004-10-01 20:36:37 | 20 sec. | test RESTART

21 | localhost | -OFF- | 2004-10-12 20:30:51 | 15 sec. | test statOEE

22 | dimitri | -OFF- | 2007-01-21 21:06:43 | 5 sec. | test IObench

23 | dimitri | -OFF- | 2009-06-15 16:36:49 | 10 sec. | System Load...

----------------------------------------------------------------------------------------

> OK

==========================================================================================

but I think more simple is to discover this all by yourself -- right now, try to execute the following command from your shell to test dim_STAT installed on your host2 (port 80) :

$ curl -L "http://host2:80/cgi-bin/WebX.mySQL/dim_STAT/x.REST"

this will print you the following help message :

==========================================================================================

dim_STAT-REST (dim) v.1.0

==========================================================================================

> Usage: curl -L "http://host2:80/cgi-bin/WebX.mySQL/dim_STAT/x.REST?CMD=Command[&..options..]"

CMD=DB_LIST -- list all available databases

CMD=DB_CREATE -- create a new database "Name"

&DB=Name -- db name

&Engine=InnoDB|MyISAM -- db engine (InnoDB or MyISAM)

&Passwd=password -- optional password to protect admin actions

CMD=HOST_LIST -- print current host list in database "Name"

&DB=Name -- db name

CMD=HOST_ADD -- add new hostname into host list in database "Name"

&Host=hostname -- new hostname (format: [alias/]hostname[:Port])

&DB=Name -- db name

&RESET=1 -- optionally: reset hostlist to empty

CMD=HOST_STATS -- request the list of available STATS from host STAT-service

&Host=hostname -- alias OR hostname (format: [alias/]hostname[:Port])

&DB=Name -- db name

CMD=COLLECT_LIST -- list all available STAT collects in database "Name"

&DB=Name -- db name

CMD=LOG_MESSAGE -- add a LOG Message to database "Name"

&DB=Name -- db name

&Message=text -- text message

[&Host=hostname] -- hostname (multiple Host args can be used)

[&ID=id] -- Collect ID (multiple ID args can be used)

* Host and ID are optional :

> if ID is given : use provided ID(s) only

> if no ID nor Host : add the message to all active collects

> if only Host : add the message to active collects matching hostname(s)

CMD=COLLECT_NEW -- create and start a New Collect in database "Name"

&DB=Name -- db name

&Host=hostname -- hostname (only one Host can be user at time)

&Timeout=Nsec -- STATs timeout in seconds

&Title=title -- Collect title

&STATS=list -- list of STATs to collect: stat1[,stat2[,stat3]...] or "all"

all: means all STATs available from Host STAT-service

[&LOG=filename] -- full filename of LOG file to watch

[&Message=text] -- text message to log Collect start

CMD=COLLECT_STOP -- stop Active Collect(s) in database "Name"

&DB=Name -- db name

[&Message=text] -- text message to log on Collect(s) stop

[&Host=hostname] -- hostname (multiple Host args can be used)

[&ID=id] -- Collect ID (multiple ID args can be used)

* Host and ID are optional :

> if ID is given : use provided ID(s) only

> if no ID nor Host : stop all active collects

> if only Host : stop active collects matching hostname(s)

CMD=COLLECT_RESTART -- restart Stopped Collect(s) in database "Name"

&DB=Name -- db name

[&Message=text] -- text message to log on Collect(s) restart

[&Host=hostname] -- hostname (multiple Host args can be used)

[&ID=id] -- Collect ID (multiple ID args can be used)

* Host and ID are optional :

> if ID is given : use provided ID(s) only

> if no ID nor Host : restart all recently stopped collects

> if only Host : restart recently stopped collects matching hostname(s)

...

==========================================================================================

## ERROR:

=> CMD is not filled !!

==========================================================================================

while you can see there many options listed, there are many actions are simplified "by default" -- and to explain this, let me show you a simple use case of the whole workflow by example :

- you're starting a testing with say "Customer 1234"

- so, first you creating a dedicated database CU_1234_YourName

- (adding your name to dbname to be sure the name is unique ;-))

- then you adding to this database the hosts you're wanting to use (say: host_N1, host_N2, host_N3)

- (note: this also can be the same HW server, but running several STAT-services (each one for different db instance, etc.)

- once you're ready, you're :

- starting New Collect for host_N1

- starting New Collect for host_N2

- starting New Collect for host_N3

- NOTE: instead of building the list of stats you want to collect from your hosts, you can use "STATS=all" option, which will collect everything -- this could be dangerous (sometimes too much is too much ;-)) -- but you can easily limited "everything" to "just what you need" by editing the STAT-service "access" file (and leave uncommented there only the stats you'll really need) -- so, again, you can keep your own "pre-configured" STAT-service tarball, deploy & start it on your host(s) before any testings, and then in your scripts always use just "STATS=all" (regardless which system you're using and delegate it to your pre-defined STAT-service config ;-))

- after what, you can run your first test workload..

- your test script may contain in any place a command to send a Log Message

- NOTE: without mentioning any ID in the command, the Message will be automatically added to all currently active Collects !

- (so, in your scripts you even don't need to worry which exactly hosts are used, etc. -- all you need to know is the URL of your dim_STAT server and the dbname you're using ;-))

- supposing you got your first results, and now need a feedback from Customer/Dev about, so no more tests for the moment.. -- then you just involve COLLECT_STOP for your database

- NOTE: without any ID provided the command will stop all active collects within your database (so, no need to worry you forgot anyone ;-))

- then, few days later, you have more info, and need to run other tests within the same conditions and on the same hosts..

- so, all you need to do is just to involve COLLECT_RESTART command, and again, without any ID and by only giving DBNAME the tool will restart the latest most recent existing collects ;-))

- and in case you need to run some tests only on say "host_N2" => you then just giving "DB=CU_1234_YourName&Host=host_N2" and the tool will automatically find the most recently created Collect corresponding to "host_N2" and restart it !

- same, your test script continues to send Log Messages, and if the only host_N2 Collect is active during this time => then only host_N2 Collect will log them, and not other Collects ;-))

- and then again, but involving COLLECT_STOP with no ID, it'll stop all your running collects, no need to worry to miss any one of them ;-))

Well, don't hesitate to ping me if you need any more details !

That's all for the moment. As usual, all the stuff above is available for free download from my site :

- http://dimitrik.free.fr

Rgds,

-Dimitri

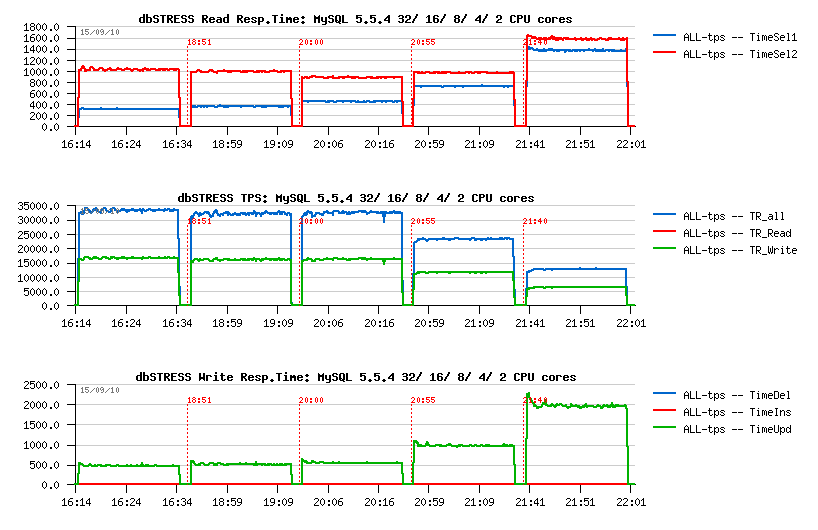

Tuesday, 12 February, 2013

MySQL Performance: MySQL 5.6 GA -vs- MySQL 5.5 tuning details

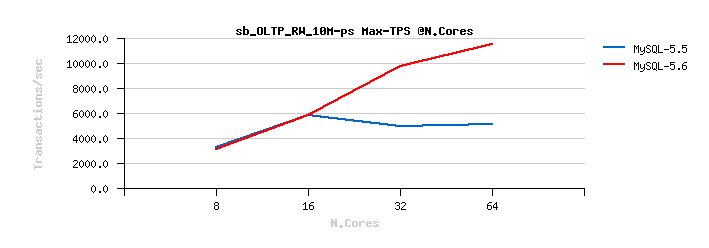

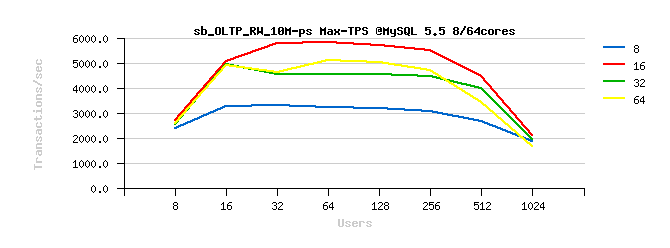

This post is the next part following the initial article about MySQL 5.6 vs 5.5 benchmark results (and MySQL 5.6 scalability).

The focus in this article is on the "tuning impact" used during the published tests, and also I have for you few more test results to present which were missed initially -- Sysbench tests using 8 tables instead of a single one (as it does by default).

All my.conf setting I've used during the tests was already presented within an initial article, so let's go directly to details about special tuning setting. While many parameters are pretty important (like use or not use O_DIRECT, choose a right REDO log and Buffer Pool size, flush or not flush neighbor pages, right I/O capacity, etc.) -- but all these ones less or more predictable, and once chosen right, not giving you many troubles (unless something did not change in your workload or data volume). But there are two of them which are directly related to internal InnoDB contentions and should be very well tested before applied:

Adaptive Index Hashing (AHI, innodb_adaptive_hash_index, default=1) is helping a lot and in many cases, but sometimes supporting very badly concurrent access or concurrent changes which is expressed by growing contention on the "btr_search_latch" rw-lock. Such a contention may happen not only on a mixed Read-Write (RW) activity, but on a Read-Only (RO) as well. And there is no general rule, and the only real test may give you a real answer (while "generally" you may expect a higher contention on a RW workload rather on a RO)..

Spin Wait Delay (SD, innodb_spin_wait_delay, default=6) value is

used on spin wait loops of mutexes and rw-locks in InnoDB. The setting

is giving the "max" value for the random wait delay interval chosen on

spin wait (expecting a mutex or rw-lock will be free soon, InnoDB is

"spinning" (looping) on CPU involving "pause" instructions and trying to

acquire the lock in the next loop). In fact this solution is "hiding"

contention rather solving it, and may use CPU time a lot just for

"spinning" (while we can do some other and more useful work) -- but in

any case it gives you an expected benefit, and InnoDB locking is going

really faster, and usually you're finally obtaining a better performance

within your workload.. However, again, there is no "silver bullet", and

the "right" value cannot be suggested generally, and that's why in MySQL

5.6 the default value remaining the same as in 5.5, while a bigger one

can be more appropriate, but the result may still vary depending on your

workload, CPU number, and CPU frequency on your server, and so on. At

least the variable is dynamic and you can test it live on your workload

(as I've made in RO testing, looking

for the most optimal value).. I know that on my server this value

may vary from 6 (default) to 128, and give a pretty interesting

performance impact! For MySQL 5.6 tuning os this setting is simply a

must, and you'll see how on one of the presented tests performance is

dropping already on 64 concurrent user sessions (when default setting is

used (sd=6)), while with a more appropriated one (sd=96) performance

remains much more stable and much more higher!..

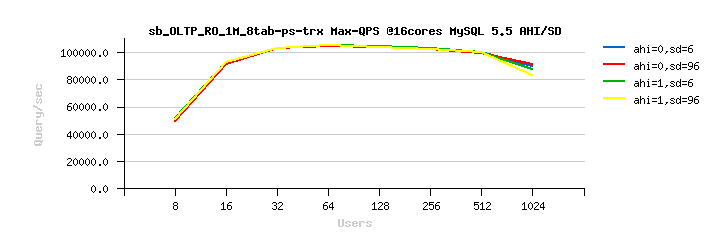

So far :

- the following graphs are representing test results obtaining with variations: AHI= 1 or 0, SD= 6 or 96

- in fact there are 4 combinations, and each one is named like "ahi=n,sd=m", where n is 1 or 0, and m is 6 or 96

- on configurations with few CPU cores having a smaller SD value seems to be better in most of cases, while with more CPU cores a higher SD value is preferable (while there is may be some exceptions as well)

- so, don't hesitate to test and you'll get the right answer for your workload

Then, for every test case the following graphs are presented :

- Best-to-best TPS/QPS performance : there are both, TPS and QPS graphs, so you're able to see a ratio between transactions and queries/sec -- personally I prefer to see queries/sec performance, as it speaks much better (reaching near 300K QPS with all SQL and MySQL layers overhead is telling more than 20K TPS, except if you're aware about what exactly your transactions are doing)..

-

And then impact of SD and AHI pair within each CPU cores configuration:

- Tuning impact @16cores

- Tuning impact @32cores

- Tuning impact @32cores with HT-enabled

Hope I did not forget anything. Here are the results:

Sysbench OLTP_RO

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO 8-tables

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO-trx

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO-trx 8-tables

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO Point-Selects

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO Point-Selects 8-tables

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

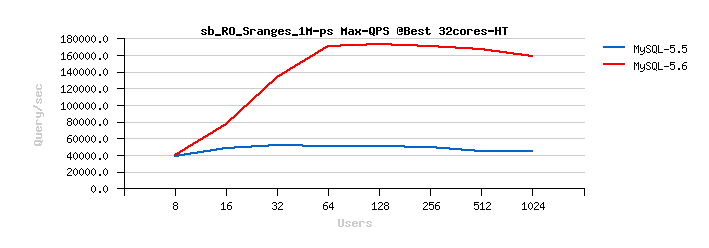

Sysbench OLTP_RO Simple-Ranges

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

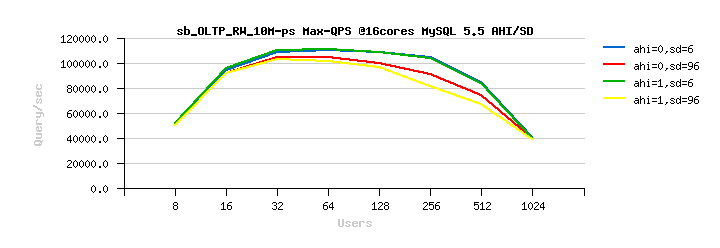

Sysbench OLTP_RW 10M

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RW 10M 8-tables

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

SUMMARY :

- as you can see, Spin Wait Delay is playing a very critical role for performance on your workload..

- as well enabling or disabling AHI may bring some unexpected regression, select it right..

- using or not using Hyper Threading (HT) for MySQL server is very depending on a workload too..

- so, the main rule is: keep in mind all these settings and tune your MySQL 5.6 server to the best! ;-)

- more to come..

to be continued..

Rgds,

-Dimitri

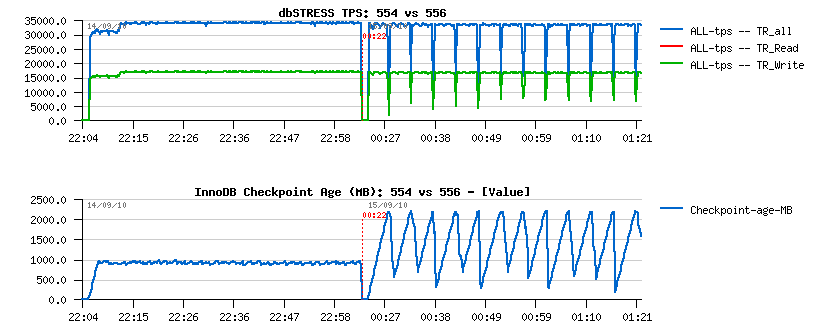

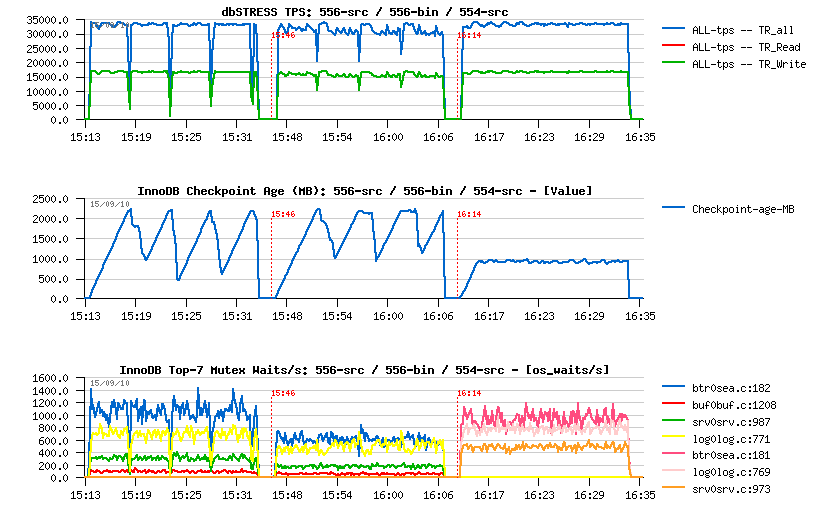

Monday, 11 February, 2013

MySQL Performance: MySQL 5.6 GA and MySQL 5.5 scalability

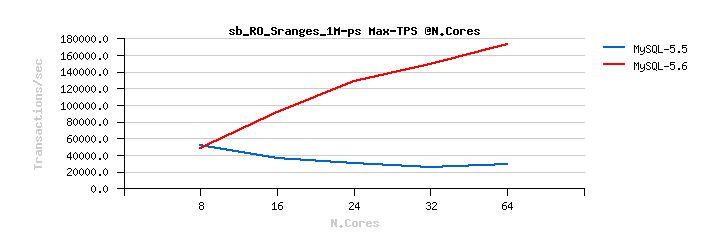

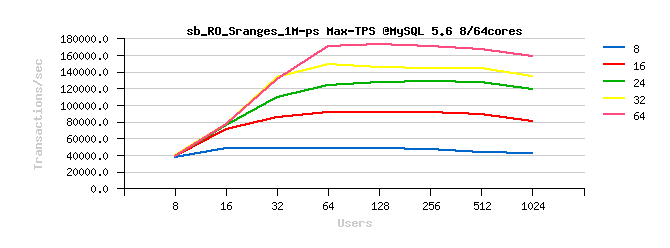

As promised, this is the first part of details about MySQL 5.6 vs 5.5 benchmark results I've published earlier last week. The following graphs are representing scalability results obtained for both MySQL versions on the published tests (and I have yet more test results to present to you, but these test's are still running)..

Few remarks based on comments and discussions I've got since then:

- I'm using a "true" 32cores server (true 32 cores, each one yet has 2 threads (HT), so 64 threads in total)

- I'm not using "CPU threads" terminology as I'm finding it confusing (for ex. when you're reading "16 CPU threads" you may not really know if there were 16cores with HT-disabled, or 8cores with HT-enabled)..

- during all the tests I've disabled HT (as it took days and days more to test every case I'm interesting in)..

- also, in many tests on 5.5 until now I've observed a worse performance when HT is enabled.. (well, depends on a workload, and yes, there was an improvement made too over a time)

- MySQL 5.6 is running more often better when HT is enabled (but again, depends on a workload)

- so, to compare apples-to-apples and reduce my test cycles, HT was always disabled, except on the last one - "64cores" which you should read as "32cores with HT enabled" -- I've made an exception for this one just to see what is waiting us ahead after 32cores ;-)

- so for all tests when you're reading "N cores" it means exactly N physical CPU cores (HT disabled), except for 64cores = 32cores HT-enabled (which was just too long for graph legends)..

- also, during all the tests both MySQL servers are running with "jemalloc" library instead of default malloc, as it's the best malloc on Linux today and I'm using it during all my tests since probably more than 2 years now (but don't think to precise, as it's a part of my "default" config, so did not suppose that somebody is not using it when running MySQL Server on Linux.. - while I always have a dedicated slide in my MySQL Performance presentations ;-))

- for the same reasons the MySQL config parameters I've added in the previous article are not Sysbench specific or oriented -- it's just a "start kit" I'm using by default, and then adapt according a workload.. - and for Sysbench load such a config is more than ok ;-)

- if anything else I forgot to mention - please, just ask ;-)

And now the results.

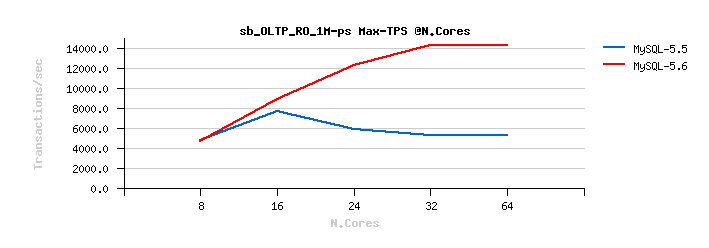

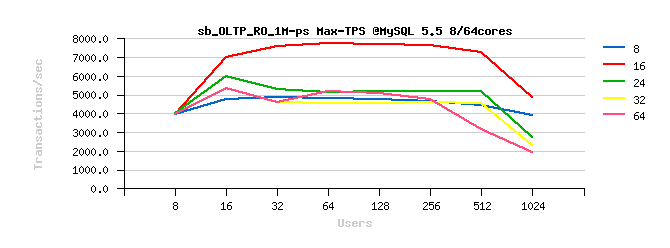

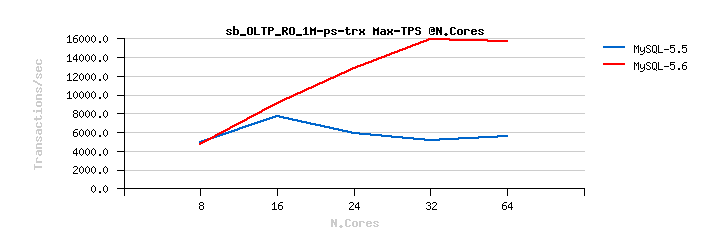

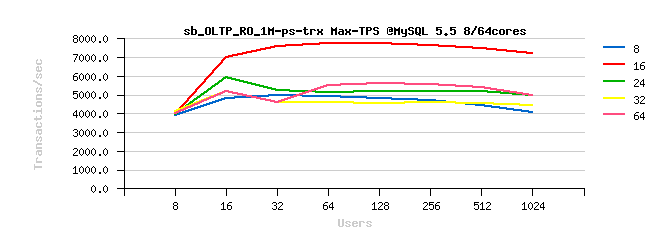

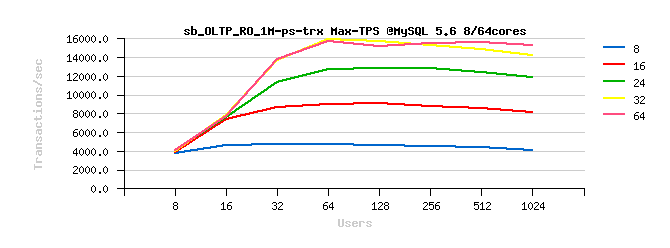

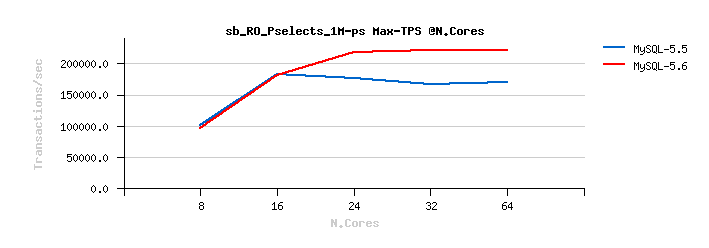

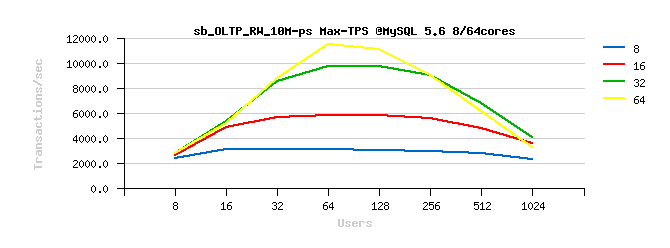

Don't think you'll need any comments.. Except maybe just this one:

- MySQL 5.5 was already scaling less or more well on small servers, up to 16cores..

- except that if you need more power and have more cores available on your host, there was no way to get better just by adding cores.. (but at least there was no dramatic regression anymore as we observed just few years before ;-))

- MySLQ 5.6 is going way further now, and able to show you a pretty visible performance improvement when more cores are available on your server!

- We know, we're not perfect yet.. - but a huge gap between MySQL 5.6 and 5.5 is already here! ;-)

- And it's not only about better performance with more CPU/cores, there are also a lot of new features + improved design in many many places (and if you've missed something, there are long and short lists available, as well a very good DBA & developer guide with many details)

- So, don't wait more, and start to use your servers with MySQL 5.6 on their full power right now!

Sysbench OLTP_RO :

Sysbench OLTP_RO-trx :

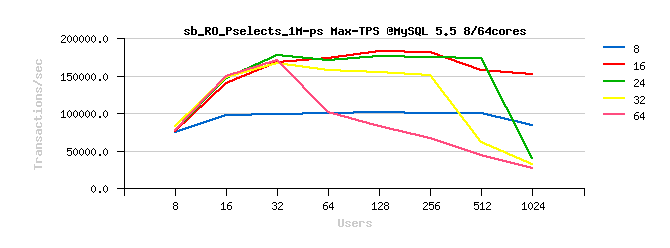

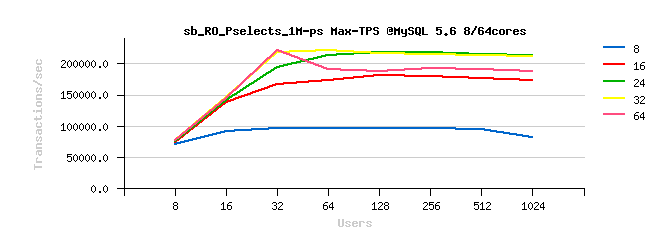

Sysbench OLTP_RO Point-Selects :

Sysbench OLTP_RO Simple-Ranges :

Sysbench OLTP_RW :

INSTEAD OF SUMMARY :

- open your TODO right now..

- and just write on top: Start upgrade to MySQL 5.6 ASAP !!! ;-)

to be continued..

Rgds,

-Dimitri

Monday, 28 January, 2013

MySQL Performance: InnoDB heavy I/O RW workloads limits in 5.6

This article was initially planned to follow the previous posts about RW

(Read+Write) workloads on MySQL & Linux (part#1,

part#2)...

But, when I've started to run real MySQL/InnoDB IO-bound tests on the

Fusion-io card, I've quickly realized that I'm hitting so hot internal

contentions within InnoDB code, that I'm simply unable to use storage

capacity on its full power.. So, I've moved to another server (having

SSD only), and then continued with it (as to explain RW limits it'll be

pretty enough). Also, curiously, on this server XFS performance on

writes is way better than EXT4 (while on the the previous server XFS got

some unexpected problems, seems to be known bugs)..

So far, for

the following tests I've used:

- Server : 32cores bi-thread (HT) Intel 2300Mhz, 128GB RAM

- OS : Oracle Linux 6.2

- FS : XFS mounted with "noatime,nodiratime,nobarrier,logbufs=8"

- MySQL : 5.6

- and as seen as the most optimal from the previous I/O testing, MySQL is using O_DIRECT and AIO for I/O operations (mainly write-oriented here)..

- adaptive hash index feature (AHI) is turned off (AHI lock contentions is a different issue, should be fixed in 5.7)

my.conf:

-------------------------------------------------------------------------

max_connections = 4000

key_buffer_size = 200M

low_priority_updates = 1

sort_buffer_size = 2097152

back_log = 1500

query_cache_type = 0

# files

innodb_file_per_table

innodb_log_file_size = 1024M

innodb_log_files_in_group = 3

innodb_open_files = 4000

table_open_cache = 8000

table_open_cache_instances = 16

# buffers

innodb_buffer_pool_size = 64000M

innodb_buffer_pool_instances = 32

innodb_log_buffer_size = 64M

join_buffer_size = 32K

sort_buffer_size = 32K

# tune

innodb_checksums = 0

innodb_doublewrite = 0

innodb_support_xa = 0

innodb_thread_concurrency = 0

innodb_flush_log_at_trx_commit = 2

innodb_flush_method = O_DIRECT

innodb_max_dirty_pages_pct = 50

innodb_use_native_aio =1

innodb_stats_persistent = 1

innodb_spin_wait_delay =96

# perf special

innodb_adaptive_flushing = 1

innodb_flush_neighbors = 0

innodb_read_io_threads = 16

innodb_write_io_threads = 4

innodb_io_capacity = 10000

innodb_purge_threads =1

innodb_adaptive_hash_index = 0

# Monitoring

innodb_monitor_enable = '%'

performance_schema = ON

performance_schema_instrument = '%=on'

-------------------------------------------------------------------------

An then, let's start from the beginning.. ;-))

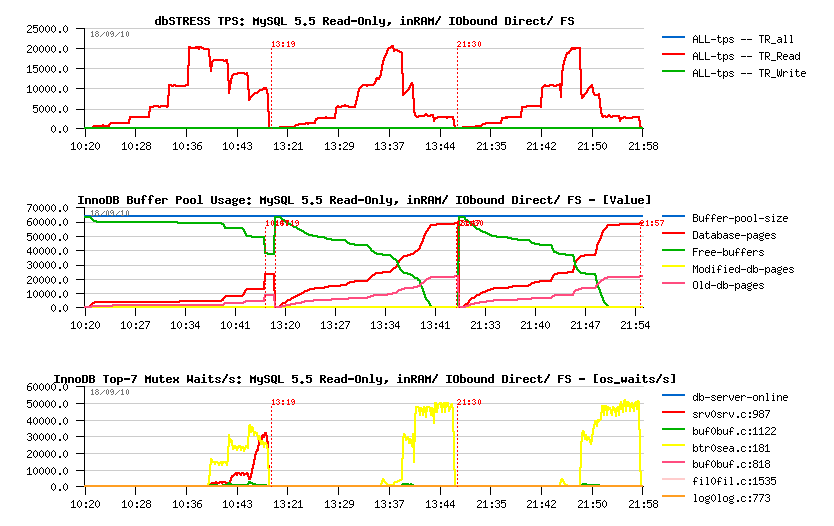

What was wrong with MySQL/InnoDB on RO (Read-Only) workloads?..

- the main goal here is to bring data pages as fast as possible into the Buffer Pool (BP) to get them cached; and if BP is not big enough to keep the whole active data set -- then adding efficient BP management to remove the most unused pages first when reading the new ones..

- the positive thing here that reads in most cases are completely independent between user threads, so all the I/O related activity will most likely depend only of your storage speed..

- except if you're not hitting any InnoDB internal contentions ;-)

- the main one in the past was "BP mutex" contention (InnoDB, fixed in 5.5 by introducing BP instances)

- then "kernel_mutex" (InnoDB, removed in 5.6)

- then "trx_sys" (InnoDB, in 5.6 decreased by introducing of READ-ONLY transactions + lowered by increasing "innodb_spin_wait_delay", real fix is planned for 5.7)

- then MDL related locks (MySQL server, partially fixed in 5.6, real fix is planned for 5.7)

- however, even having all these contentions, it's still possible to keep performance level stable up to 1024 concurrent users sessions and more (see these posts for details) and without involving any throttling (like InnoDB thread concurrency) or special thread management like (Thread Pool extension)..

While, thinking well, the use of the Thread Pool is simply the must for any system having a big number of active user sessions -- OS scheduler will simply unable to manage them right (it has no idea who is doing what, who is locking what, who is waiting for what, and so on.. -- most of these things are internal to the MySQL/InnoDB code and not really visible to OS). Then, usually once your number of active threads out-passing x3 time the number of available CPU cores on your server, your CPU time will be very likely spent more and more to simply manage them (OS scheduling), rather to get a real work done.. As well, don't forget that N cores are able to run on the same time only N threads, and not more ;-) So, Thread Pool is really helping here and let you use your server HW more optimally..

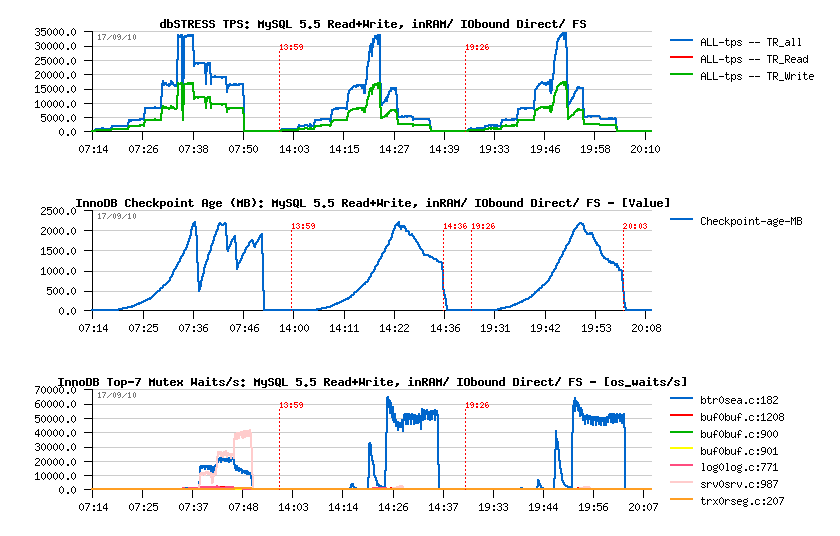

Well, and what about RW workloads now?..

- MySQL 5.6 brings many improvement to get thing working right (improved Adaptive Flushing, Page Cleaner thread, Multiple Purge Threads, over 4GB REDO logs, etc.)

- but as you're constantly writing, you're very I/O dependent on RW workloads..

- with increasing number of concurrent users your I/O activity will be increased yet more faster (more REDO log records, more dirty pages flushing, more purge activity, etc.)..

- and combined with the increased internal concurrency contentions in the code, you may be even unable to involve the same amount of I/O write requests as on a lower load..

- Thread Pool may sill help here to keep contentions level constant..

- but things are changing A LOT not even with increased number of concurrent users, but with increased size of your database! - and here only a proper fix will help.. -- means we have many challenges for performance improvement in MySQL 5.7 ;-))

Let's start with a simple case first..

Test details:

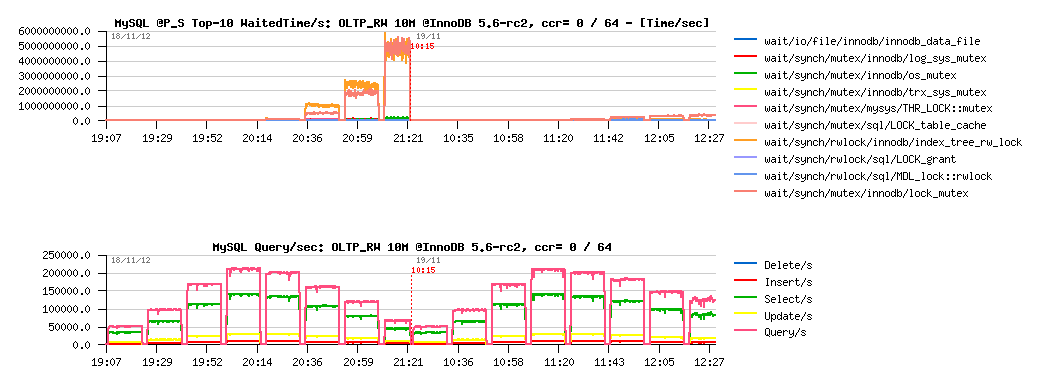

- Sysbench OLTP_RW (default, single table), 10M records, InnoDB engine

- workload is growing from 8, 16, 32 .. 1024 concurrent users

- innodb_thread_concurrency = 0 / 64 (just to see if it still helps)

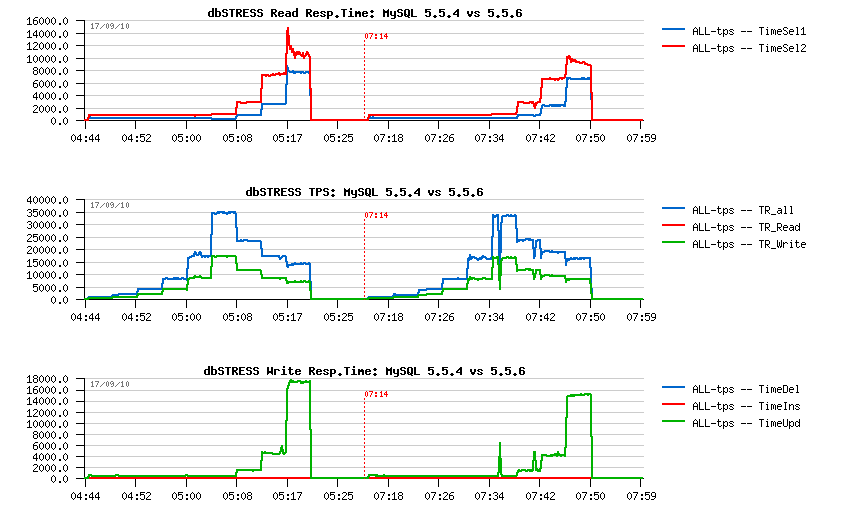

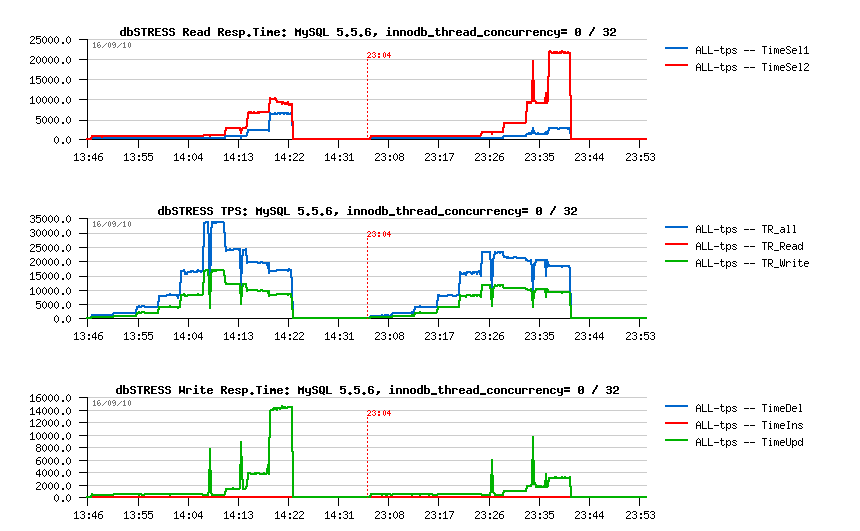

OLTP_RW 10M, concurrency = 0 / 64 :

Observations :

- left part of the graph is corresponding to the same test using innodb_thread_concurrency=0, and the right one = 64

- main bottlenecks here are on InnoDB "index lock" and "lock mutex"

- using InnoDB thread concurrency is still helping here! (result on 1024 users is near twice better)

- but index lock seems to be the most hot, so it lets expect that using partitioned table, or simple several tables should decrease this contention a lot..

Let's see:

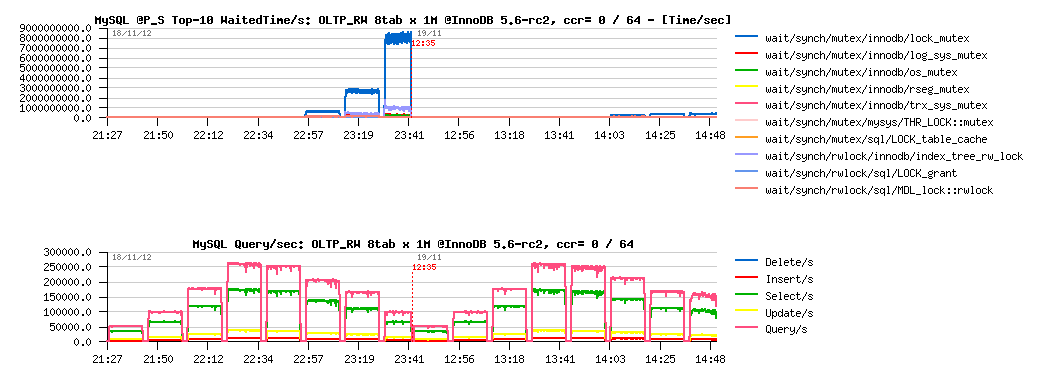

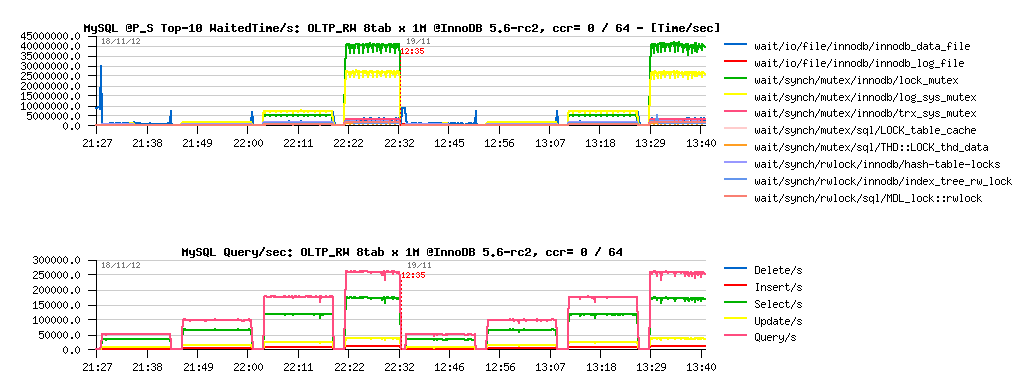

Test details:

- Sysbench OLTP_RW, 8 tables 1M records each, InnoDB engine

- workload is growing from 8, 16, 32 .. 1024 concurrent users

- innodb_thread_concurrency = 0 / 64

OLTP_RW 8-tables x 1M, ccr= 0 / 64 :

Observations :

- main contention is on the "lock mutex" only now, and as the result max QPS level is much higher too! (255K vs 210K QPS)

- but InnoDB thread concurrency is helping only on 1024 concurrent users load now.. interesting..

- and what exactly contention is blocking performance on 250K QPS?..

What is blocking us on 250K QPS ?..

Observations :

- main contentions are on the "lock mutex" and "log sys mutex"

- lock mutex contention is dominating..

- and the question is: why log mutex is getting a contention?.. - specially how high it's jumping from 32 to 64 users level..

- gives impression to be blocked by IO speed on REDO log writes.. - but my SSDs are yet far to be even fully busy% on that moment, so the problem is not really related to I/O... and well, it's not yet the main contention here, so..

Well, let's keep the 64 users workload as the "peak level", and focus on it while analyzing more heavy loads ;-))

Now: What will be changed if instead of 10M, I'll run the same load on the 100M database size?.. 200M? more?..

- first of all the volume (of course ;-)) -- and if your workload data set will no more feet the BP size, there will be more and more data reads involved, and finally the whole database activity may become I/O bound, and we'll no more test the MySQL performance, but the storage..

-

but supposing we have enough RAM and workload data set may still

remain cached and SQL queries are not depending on the volume (we're

still operating with the same amount of rows in read and write

operations within every query).. -- what will be changed then?

- reads related response time should remain the same (of course index trees will be much more bigger, need more operations in access, but as most of the data remaining cached (as we supposed) it should still go on the same or near the same speed)..

- while if your access pattern is completely random, writes will become much more wider and will touch much more different data pages!

- the query/sec (QPS) level may pretty remain the same, and as the results, the REDO logs writes speed too..

- however, to follow these REDO log writes will become more harder now, because if on a small data volume there is a lot of chances that by flushing one dirty page you'll match several REDO records corresponding to this page, while on a bigger volume - there is more and more chances that more pages should be flushed to cover the same amount of REDO records..

- to summarize: with a growing volume your dirty pages flushing activity will grow too!.. - which will demand to revisit your storage performance hosting data (while REDO writes will remain the same)..

- And the question then: Will be MySQL/InnoDB able to follow your storage capacity?..

Let's continue with "standard" Sysbench OLTP_RW test, but use now 100M and 200M data volumes.

My main interest is focused around the following test conditions:

- Read+Write workload with 64 concurrent users (user threads)

- InnoDB Buffer Pool: 64GB vs 16GB (to see the impact in each test case when the dataset is not fitting the BP size)

- InnoDB REDO log total size: 8GB vs 3GB (to see the impact between less and more aggressive flushing conditions)

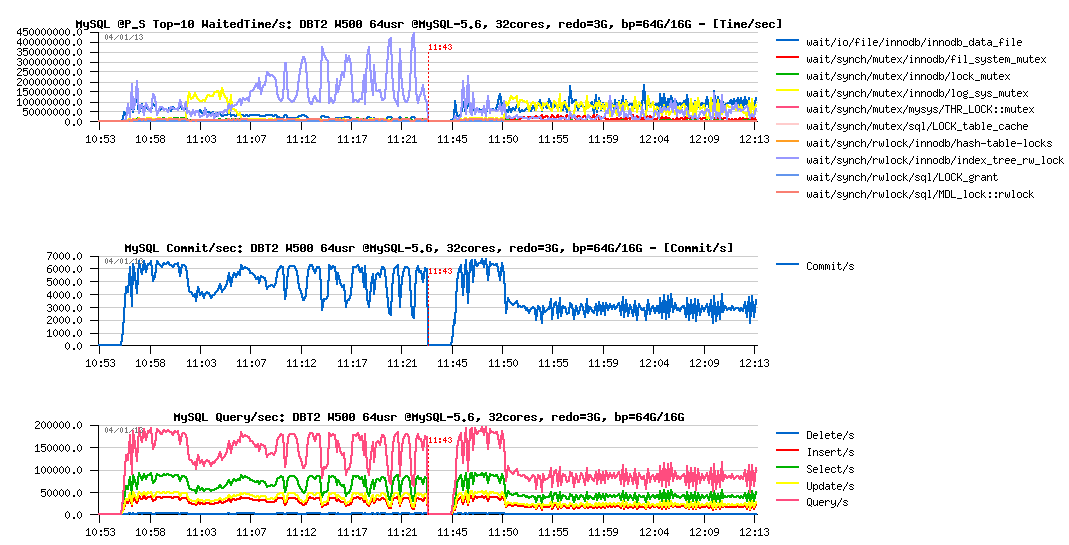

As usually, MySQL Performance Schema (PFS) will help here to analyze wait events + I/O waits too.

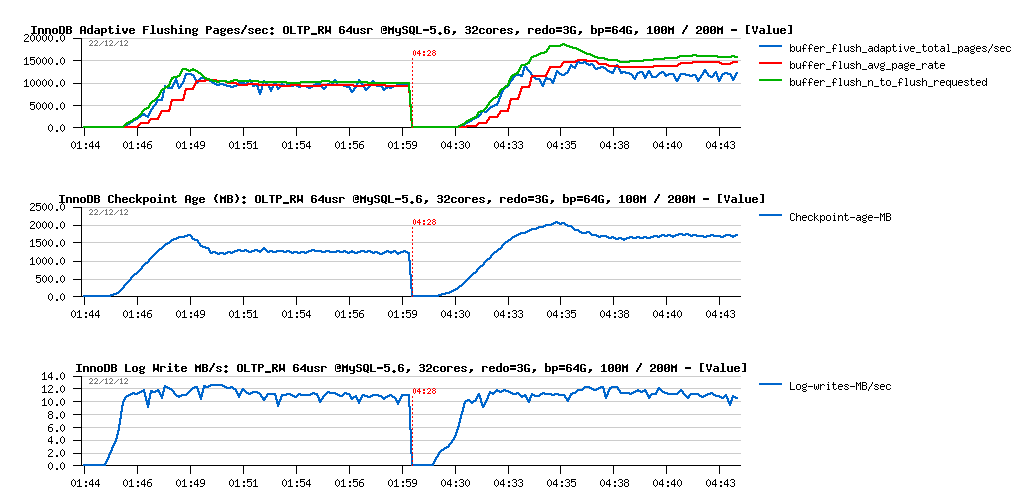

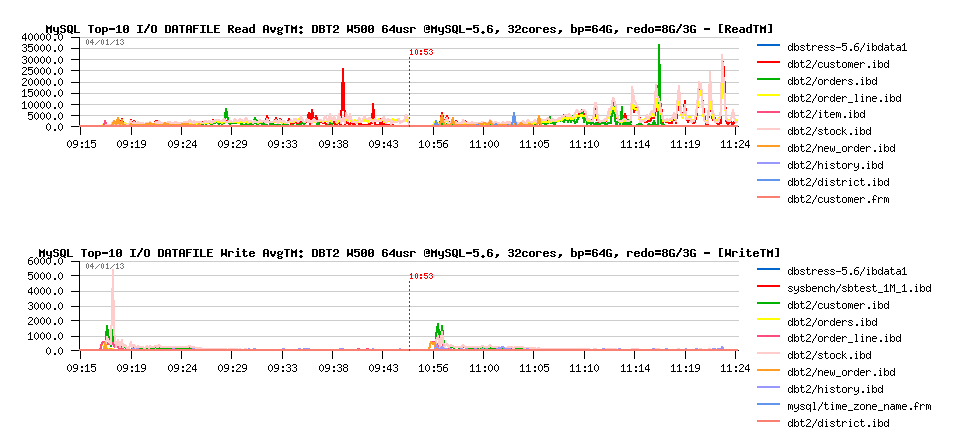

So, on OLTP_RW 10M it was enough to flush 1500-2000 pages/sec to keep the 200K QPS level. What will change on the 100M and 200M volumes within "good conditions" (BP=64GB and REDO=8GB):

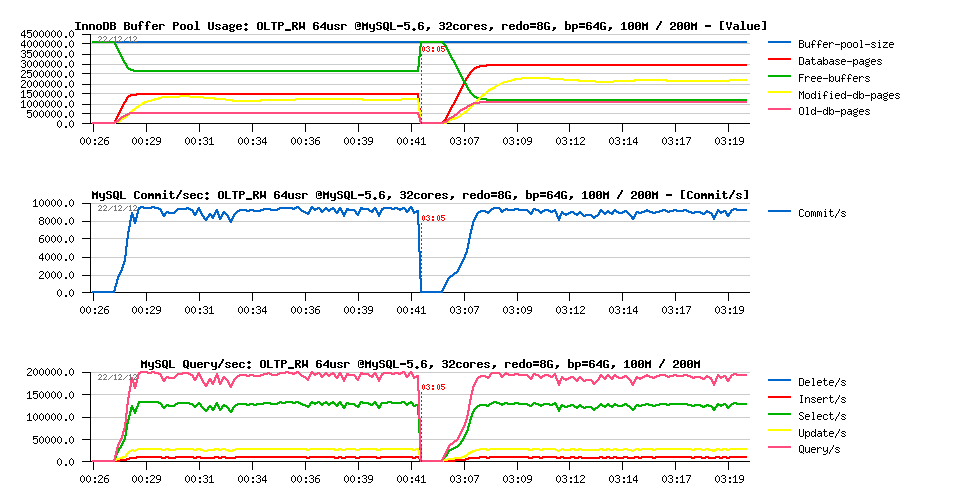

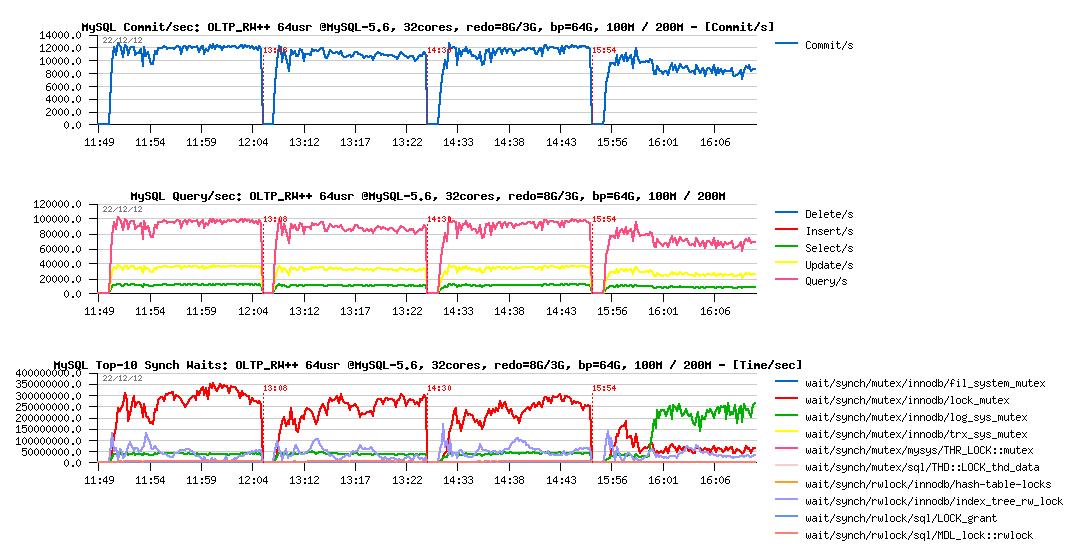

OLTP_RW 100M vs 200M, bp=64G, redo=8G :

Observations :

- On both test cases the same near 200K QPS level and over 9000 commit/sec

- and all data are fully cached within BP

Flushing activity :

Observations :

- dirty page flushing became stable on 6000 pages/sec on 100M, and 8500 pages/sec on 200M - and as you can see it's not twice higher because of a twice bigger data volume

- Checkpoint Age remains far from 7GB limit, but still lower on 100M vs 200M

- while REDO log write rate remains near the same for both cases..

Waits reported by PFS :

Observations :

- on the test start the I/O related waits are dominating (data reads)

- this also increasing index lock contention (due data reads), more higher on 200M

- then, main contention is moving to the lock_mutex (THR_LOCK::mutex wait can be ignored as it's only self-thread lock, not related to the concurrency)..

Let's reduce now the REDO log size to 3GB:

OLTP_RW 100M vs 200M, bp=64G, redo=3G :

Observations :

- performance is slightly lower now on 200M comparing to the 100M volume..

- while on 100M volume the result remaining the same as before

What about flushing?

Observations :

- nothing abnormal on REDO log write speed, remains similar and stable in both cases

- Checkpoint Age became stable pretty quickly and then remains under 2500MB critical level

- then on 100M test we're running with a stable 10K flushed pages/sec

- while on the 200M test - you may see that flushing is reaching 15K pages/sec first, and than running on around of 12K pages/sec

- and what is interesting within 200M case: the flush avg page rate (red line on the graph) is no more matching the real pages/sec level (blue line).. - why? -- in fact the "avg page rate" is calculated internally within Adaptive FLushing code regardless the real flushing page/sec rate and indicates what should happen according Adaptive Flushing expectations (all requested writes should be finished during expected time); so, when it's not happening, and we're observing a deviation like here, it means that storage I/O level is unable to follow the write requests coming from InnoDB..

- And, of course, the main question since then is it really because of the storage the flushing rate is unable to follow the requested speed, or there may be some other reasons as well?..

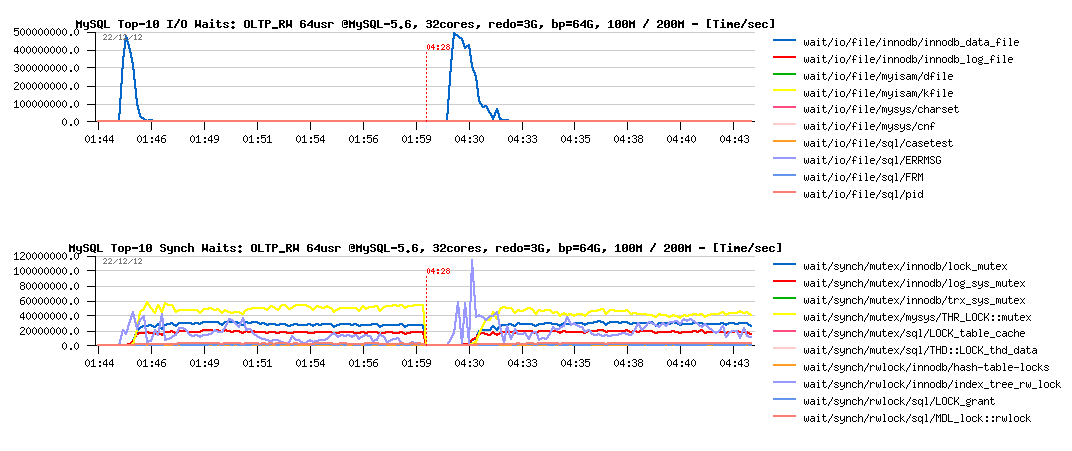

Let's check our wait events :

Observations :

- well, there is no really any difference between 100M and 200M test case..

- but probably it's more different from the previous test with 8GB REDO logs?..

More in depth with wait events, all 4 tests together :

Observations :

- hm.. all 4 tests are looking exactly the same..

- maybe we're simply missing some critical instrumentations here?

What is great the in MySQL 5.6 there are additional instrumentation counters available for InnoDB via its METRICS table (from where I'm getting data currently presented for flushing rate). Another interesting data to monitor from there is the number of pages which were scanned during flushing process! Let's compare scanned pages/sec levels during all these 4 tests:

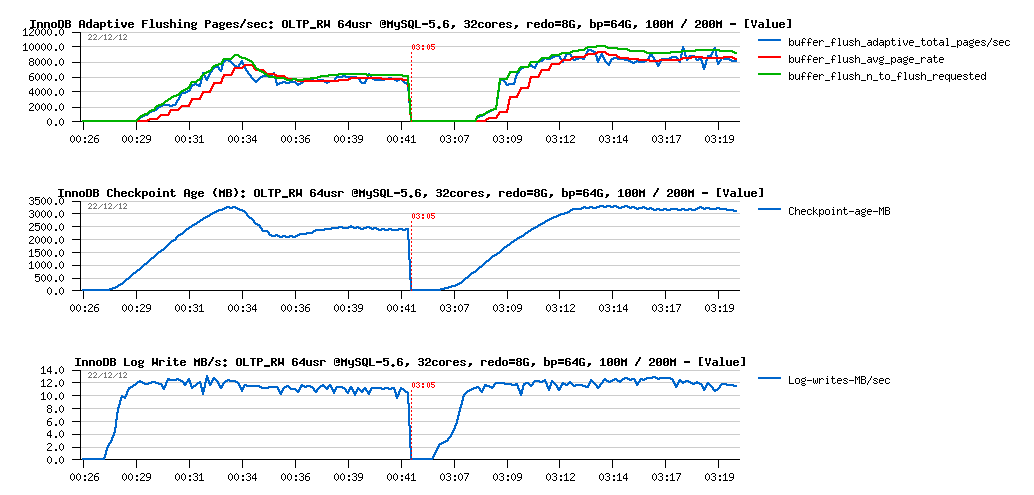

More in depth with flushing :

Observations :

- first of all you may see that we have a problem here: there is no reason to scan 200K pages/sec to flush only 5K pages/sec!..

- then, as you see, with a higher flushing level, yet more pages are scanned/sec, and this is simply wasting CPU time ;-)

- so, we have here the first problem to fix..

PROBLEM #1 : Too much pages scanned on flushing

- why it may happen?

- in fact this is related to the InnoDB design, and coming from a long date now: the flush list is supposed to be accessed concurrently, and in some situations the "previous" pointer in the list may become no more valid, so the flushing code should to rescan the list from the tail again to find next pages to flush..

- the fix is not trivial, and may require some in depth remastering of the code..

- but I've already tried to hack it little bit, and may confirm you we can remove this gap completely and save a lot of CPU cycles ;-)

- then, the real improvement will come once a parallel flushing will be implemented in 5.7 !..

But well, this is not the only problem ;-))

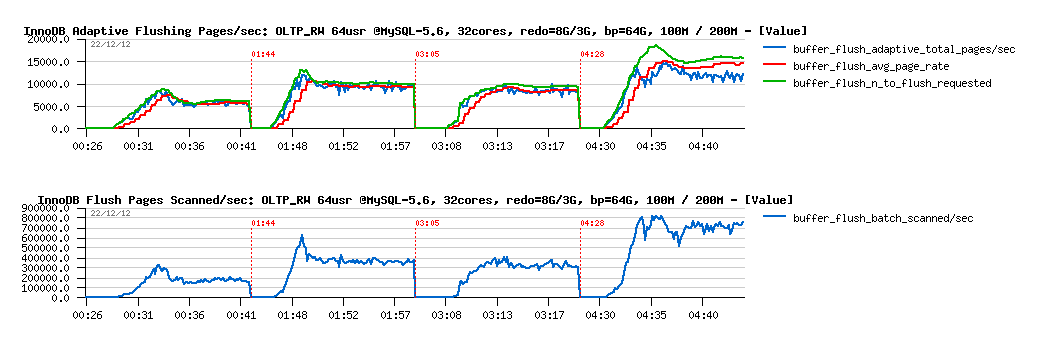

Let's see now what will happen if I'll change little bit test conditions to be more write aggressive on the same data volumes? -- I'll change Sysbench options now to keep only one "read" per RW transaction: only a single POINT-SELECT, this will completely change Read/Write operations ratio and involve much more writes than before. Let's call it OLTP_RW++ :-)

The following graphs are reflecting the changes.

and just to remind you about the order of tests on graphs from left to right:

- 100M, redo=8GB

- 100M, redo= 3GB

- 200M, redo= 8GB

- 200M, redo= 3GB

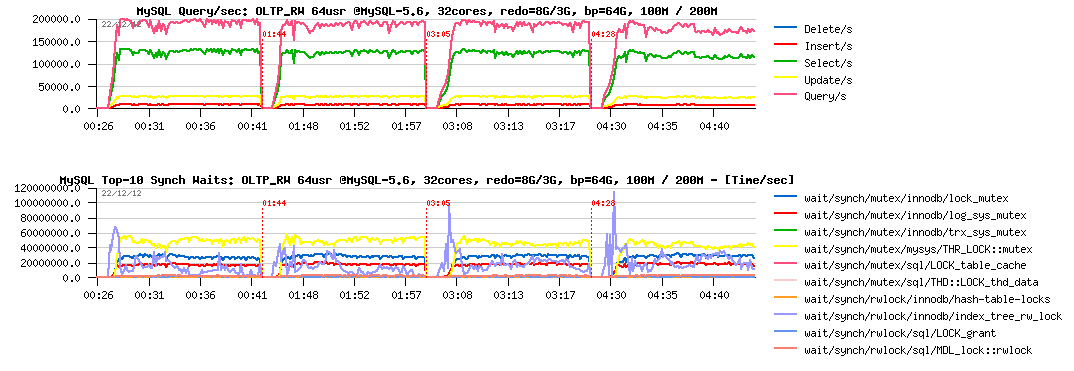

OLTP_RW++ 100M/200M, redo=8GB/3GB :

Observations :

- interesting that with 8GB REDO logs space both test cases (100M and 200M) having the same level of performance (and you may remark the higher number of commit/sec comparing to the previous tests, so even QPS is lower, we're writing much more now, see also the Updates/sec level)..

-

but with 3GB REDO logs things become more harder:

- 100M case seems to have a lower performance now

- and 200M performance is decreased even yet more and hitting log_sys_mutex contention (seems like flushing is not going fast enough to keep Checkpoint Age under critical level (2500M in this case), or REDO is hitting the read-on-write issue (verified: not hitting)

- interesting that on the 100M test case the levels of wait events are pretty similar

- however comparing to previous OLTP_RW tests, here the lock_mutex contention is really dominating

What about flushing?

Flushing details :

Observations :

- as expected, there is no problems on both volumes when REDO logs space is 8GB

- curiously the 10K pages/sec flushing level is looking here like the I/O border limit.. (while it's not really possible, as my SSD(s) are able to do more (verified before)..

-

then on 3GB REDO:

- 200M case is unable to follow the REDO writes and rising the 2500M limit, involving waits on a sync flush (as seen on the graph)

- 100M case is yet more interesting: we're fine with a flushing speed here, lock contentions times are not higher than with 8GB REDO, but performance is lower.. - why? - the answer is simple: each involved I/O is representing a cost + combined with lock_mutex contention resulting in performance decrease.. As well, I'm pretty sure that lock_mutex contention here is also having its effect on deviation of the flush_avg_page_rate, needs to be more investigated..

PROBLEM #2 : lock_mutex contention - Well, this one is just needed to be fixed ;-) (and I've also started this article by observing this contention - seems to be one of the next hot bottlenecks, depending, of course, on a workload).

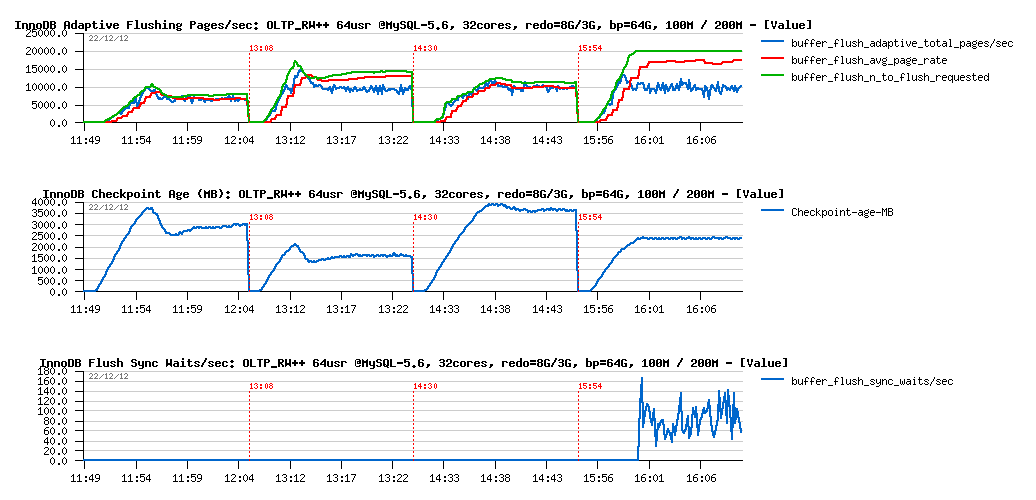

And what is changing when we're short in memory? and instead of 64GB may allow only 16GB Buffer Pool size?..

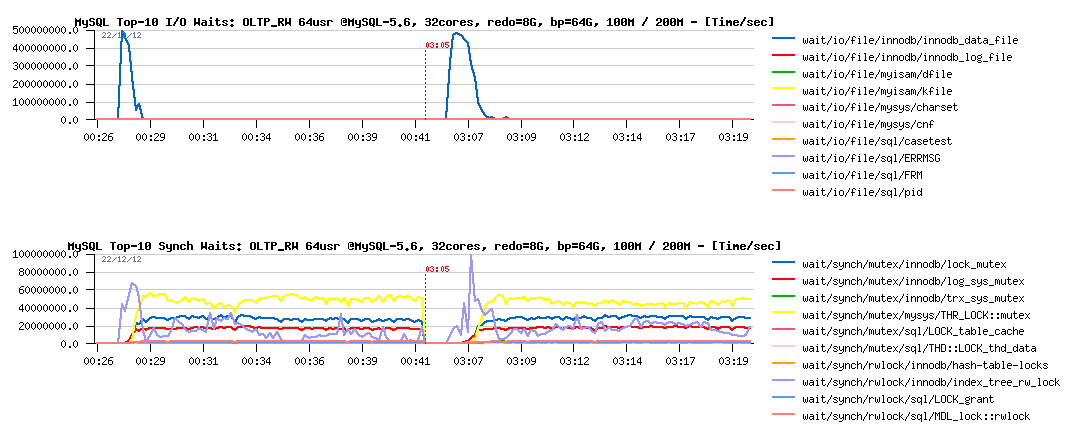

The next graphs are representing the following workloads:

- OLTP_RW 100M, pool=16GB, redo=3GB

- OLTP_RW 200M, pool=16GB, redo=3GB

- OLTP_RW++ 100M, pool=16GB, redo=3GB

- OLTP_RW++ 200M, pool=16GB, redo=3GB

all the following tests used 3GB REDO logs size, no need a bigger one, and you'll see why:

OLTP_RW and OLTP_RW++ with 16GB Buffer Pool :

Observations :

- Checkpoint Age remains very low within all of the tests, so even 2GB REDO was more than enough here ;-),

- however flushing activity is now including also "LRU flushing" (happening mainly when InnoDB is looking for a free page in the Buffer Pool and need to recycle the most less used ones, but they are appearing to be dirty, so should be flushed first)

- and as well, bigger data volume involving a more intensive LRU flushing

- keeping in mind a low Checkpoint Age, we may already suspect performance regression (lower REDO write rate may only mean lower transactions rate)..

So, what about performance:

Observations :

- performance is something like x5 times worse now..

- however PFS is explaining very well why it happens: the main wait time is spent on the data reads! (and as PFS is also reporting now FILE_IO events too, I was able to go more in detail and see that for the particular Sysbench data file the read service time was several milliseconds, while OS is reporting not more than 0.3ms - so, there is something to investigate for sure)

But well, this workload is exactly the case when only faster disks may help you or using/buying more RAM (as you saw, with 64GB pool the result is x5 times better, while finding here yet more faster disks than SSD would probably not help as much)..

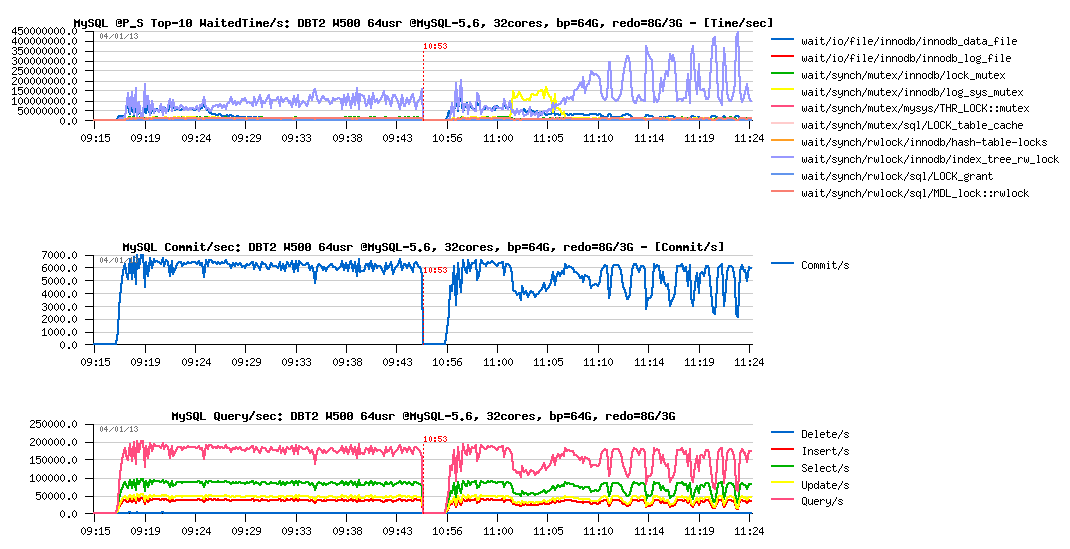

Then, to finish the story, let's replay something similar also on the DBT2 (very similar to TPCC-like) workload.

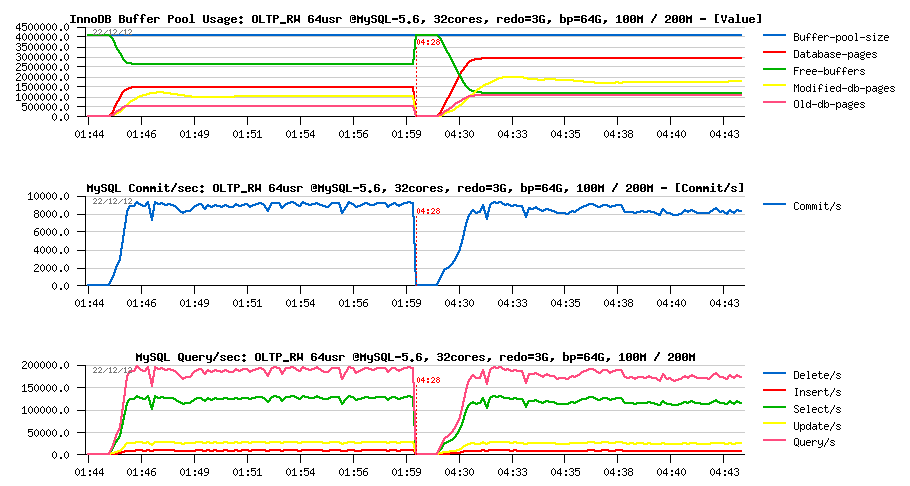

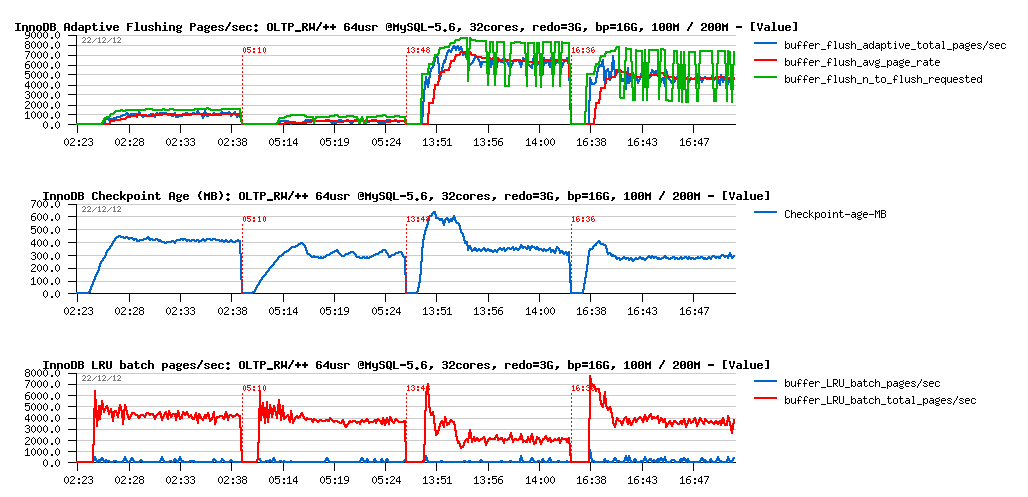

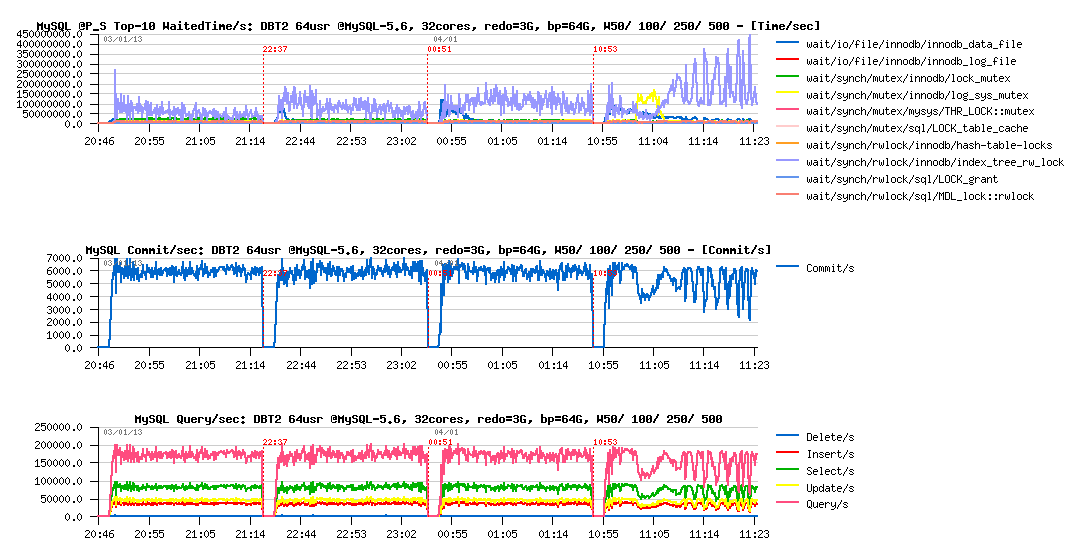

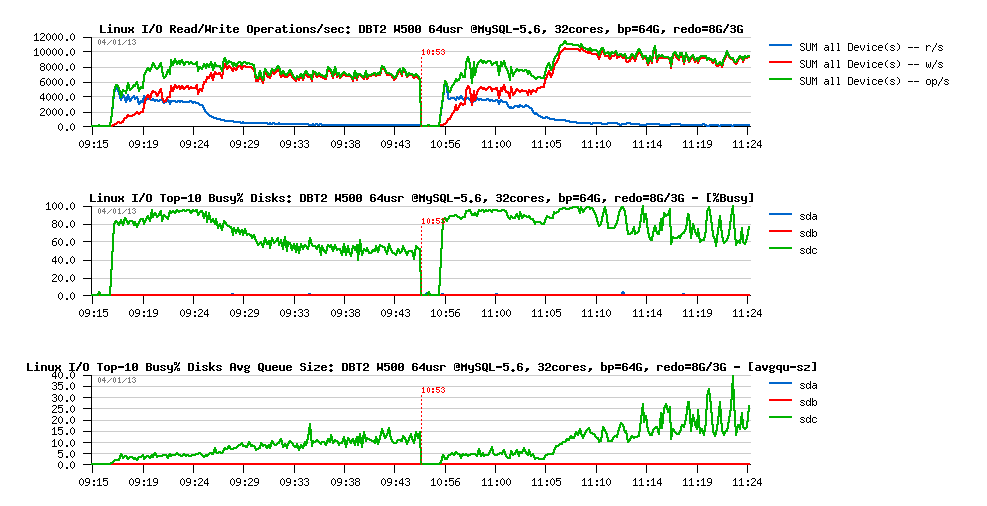

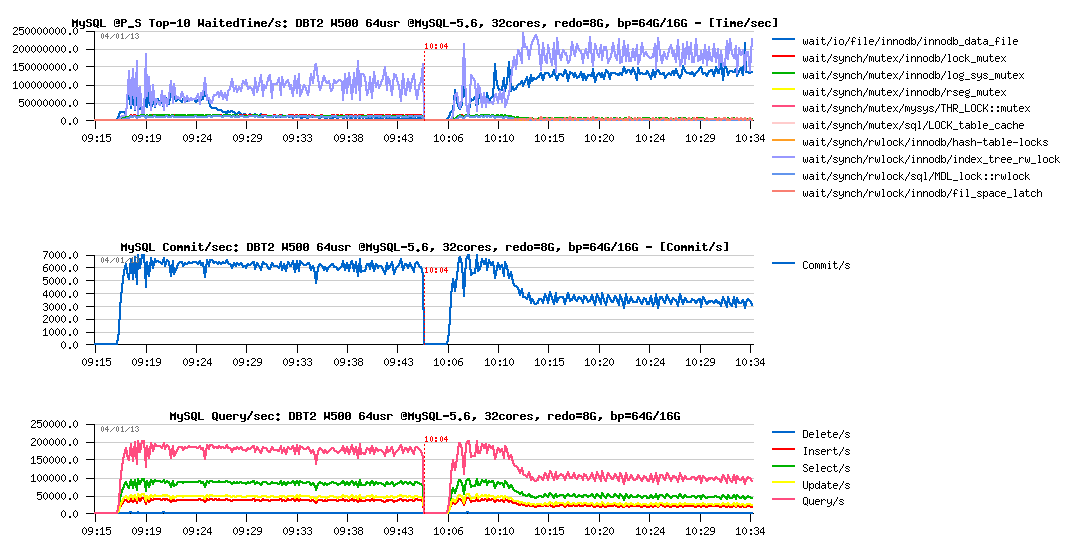

The next graphs are corresponding to the following test cases on DBT2 with 64 concurrent users:

- redo= 3GB, bp= 64GB, W50 (50 warehouses)

- redo= 3GB, bp= 64GB, W100

- redo= 3GB, bp= 64GB, W250

- redo= 3GB, bp= 64GB, W500

DBT2 redo=3GB, bp=64GB, W50/ W100/ W250/ W500 :

Observations :

- mutex lock contention is dominating here, and constantly growing with a bigger data volume

- up to W500 volume, performance is still remaining stable

-

but since W500:

- index lock contention seems to have a direct impact on QPS level

- looks like on the workload startup we're hitting a log_sys_mutex waits: seems flushing is not going fast enough, and Checkpoint Age is reaching the critical level, and the sync ("furious") flushing is involved (BTW, in 5.6 even "furious flushing" is not so furious as before ;-))

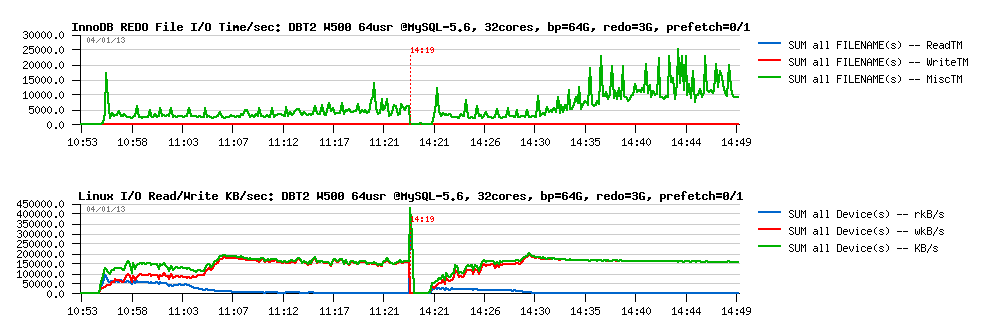

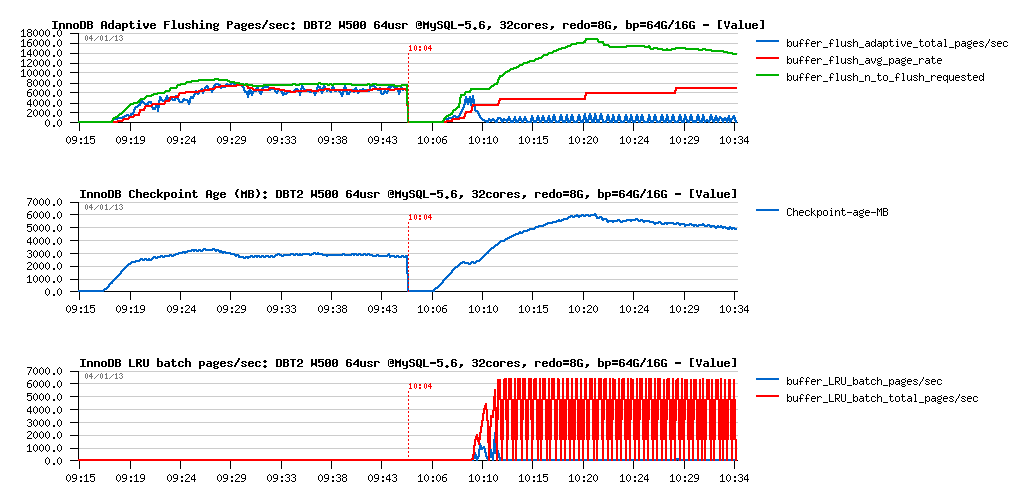

Let's get a look on the flashing activity now:

Observations :

- same here, up to W500 volume all things are looking pretty well..

-

and on W500 it becomes much more interesting:

- looks like on the beginning the flushing rate is stuck on 5K pages/sec level and don't willing to grow even we're requested up to 20K pages/sec to flush! (look on the green line with flush "requested" and blue line with adaptive total pages/sec )..

- which is leaving Checkpoint Age growing unprotectedly, reaching 2500M critical level, and involving sync flushing (and sync flushing waits)..

- once the critical period of startup is passed, flushing is reaching a stable 10K pages/sec rate (again 10K?? why 10K?? :-))

- no deviation then with avg page rate (while here too scanned pages/sec level is many times higher than flushing)

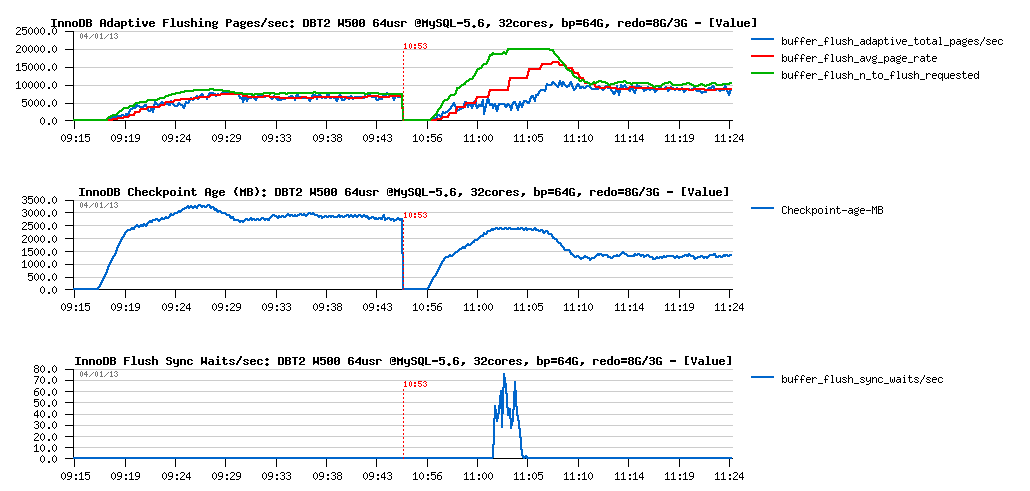

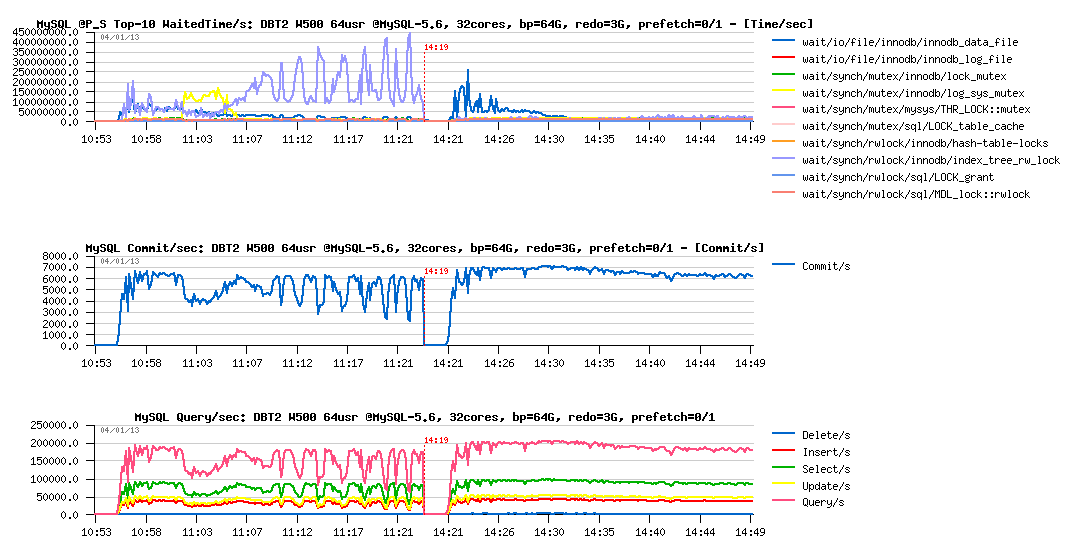

Let's focus more closely on the W500 case then.

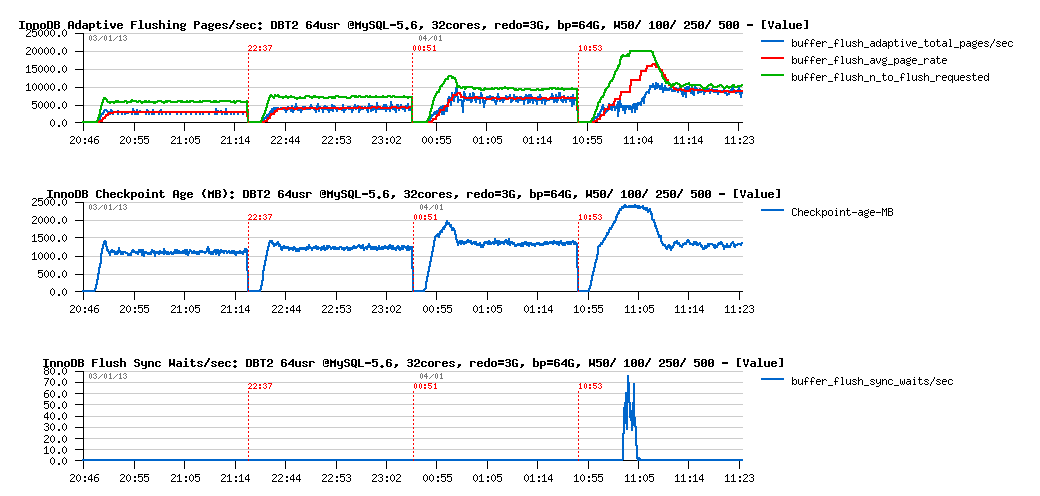

We may easily expect that with a bigger REDO log size we will avoid sync flushing waits at least, but will it protect the workload from the QPS "waves" due mutex lock contentions?..

DBT2 W500 bp=64GB, redo= 8GB vs 3GB :

Observations :

- there is no more QPS drops or "waves" when 8GB REDO logs size is used (left part of graphs)

- seems like it's very directly depending on the index lock contention..

- as well there is no more log_sys_mutex contention, as expected: 8GB REDO space is big enough here to leave more marge for flushing on the test startup (note: on W1000 or W2000 volume it may be not enough again, depends on contention and storage performance)..

What about flushing:

Observations :

- things are radically different with 8GB REDO here.. - flushing rate is remaining stable on the 7K pages/sec

- but well, if index lock contentions are workload related, then we should also observe them on the 8GB REDO test too, right?..

- the feeling I have that the "waves" we observed are related to some I/O related limits.. We're hitting here something..

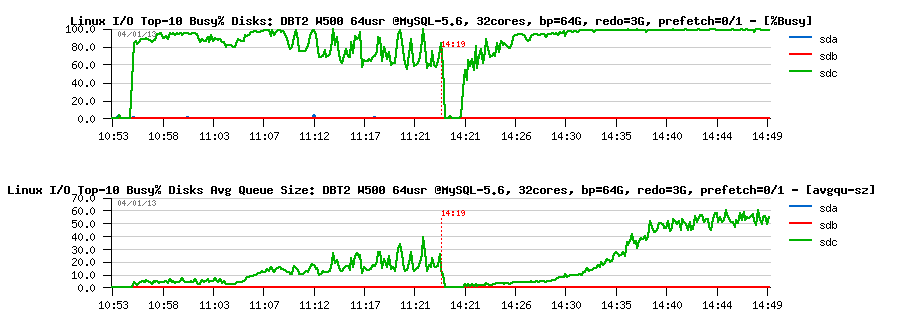

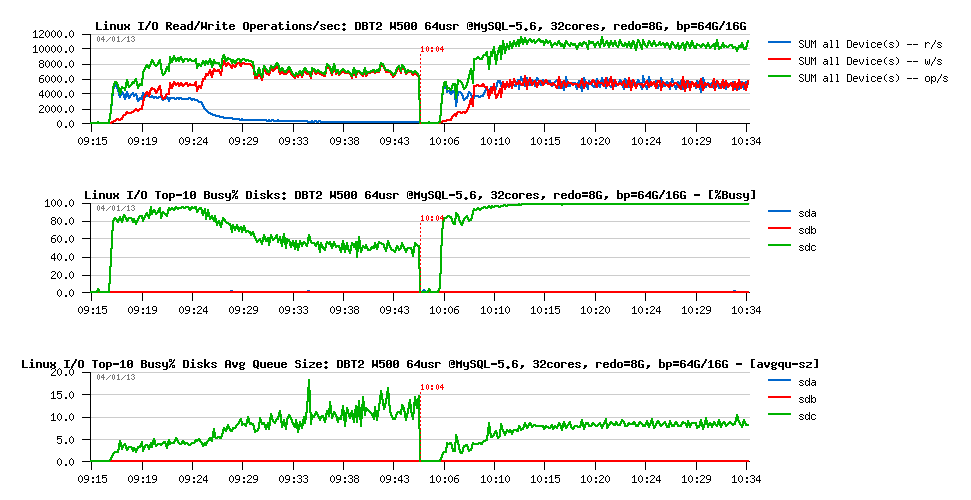

Let's get a look on the I/O operations stats during these both workloads:

Observations :

-

we can see here clearly here the 2 phases on each workload:

- startup, when most of pages are yet reading from the disk

- and then a stable phase when most of the data arrived to the Buffer Pool, and writing become the main I/O activity

- and what is interesting that the "waves" phase is coming during a "stable" period

- however, the level of I/O operations rate is remaining pretty constant and has no such "waves"..

- so, why the "waves" are coming?..

-

the answer is coming from the I/O queue graph:

- it has "waves" (avgqu-sz), which means the number of I/O operations queued per second is varying over a time

- but the number of executed I/O operations remains the same.. - what does it mean?

- it may mean only that only a part of I/O requests from the queue was executed on time, and some of them may wait longer!

- however, OS is reporting that avg I/O service time (response time) was stable and around 0.3ms.. - but probably it's only because of writes?..

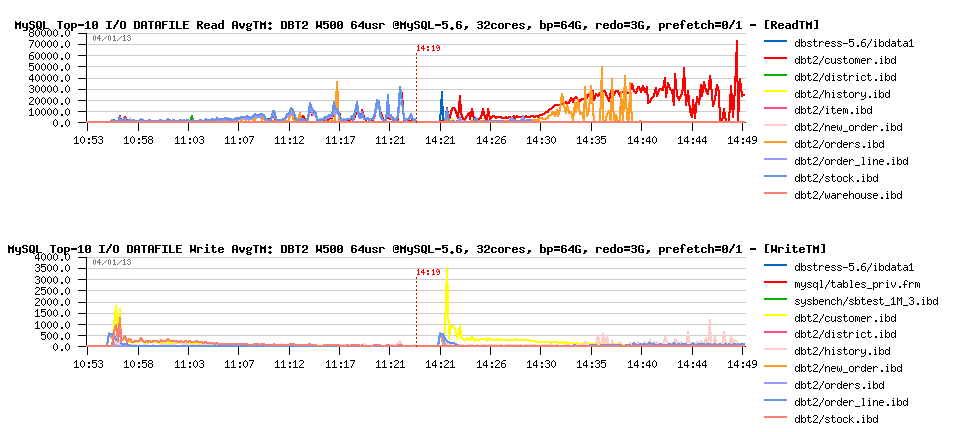

To understand if it's true or not, we may now get a help from MySQL Performance Schema (PFS) - remember that since MySQL 5.6 it reports now also various details about file I/O operations and times, and let's see it in action:

Observations :

- Bingo! if there is no any big waits on write operations, there are pretty important waits reported on reads!

- note that times are reported here in usec, means we have some spikes up to 35ms on reads... - hard to believe (specially when SSD is used), but cannot be ignored in any case (while probably a more detailed instrumentation is needed here to understand the problem better)..

However the question here is what is a main and what is a result event?

- do we have index lock "waves" because of the overloaded I/O queue?

- or because of index locks we're involving I/O requests in "burst" mode wave by wave?..

- there is something to dig here, and I'm impatient to see index lock just fixed (planned for MySQL 5.7) - it will resolve so many questions ;-))

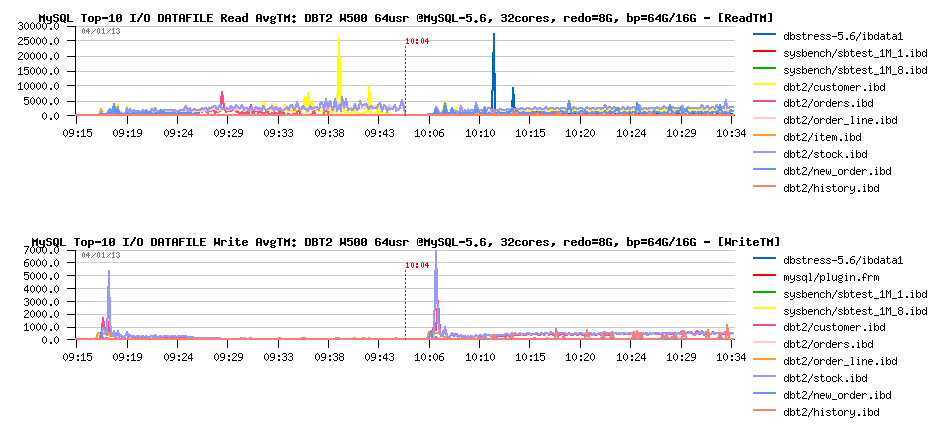

But well, what I can try now is simply to prefetch most of the data before the test workload is started and then see what will be changed:

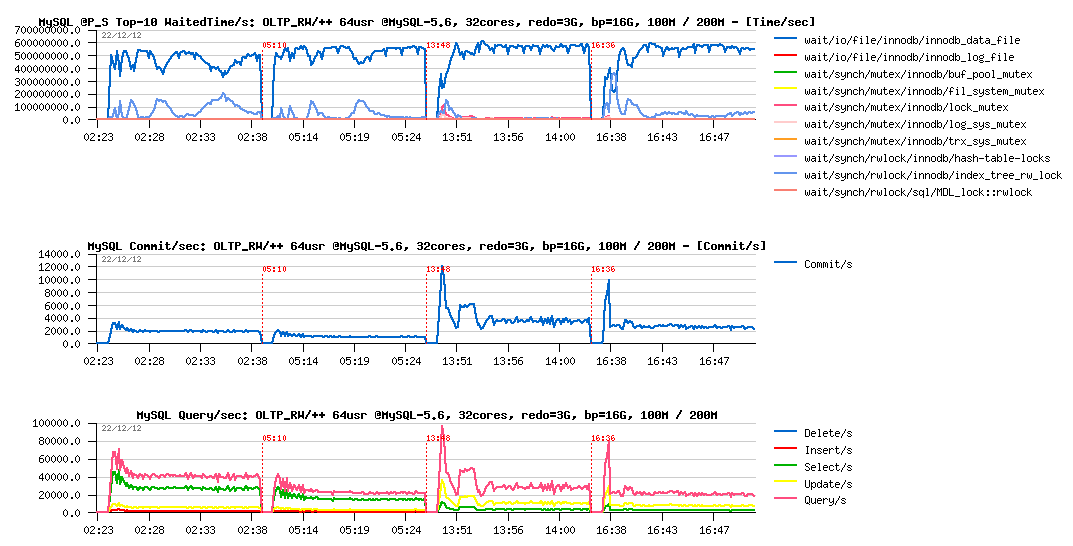

DBT2 W500 bp=64GB, redo=3GB, prefetch= off / on :

Observations :

- wow! did not expect it'll become so fun ;-)

- QPS level is just stable like never!

- performance is better (you may see that we out-passed 7000 commit/sec)

- and what is completely incredible: index lock waits are disappeared!.. - how it's even possible?..

Let's get a look on the flushing now:

Observations :

- another surprise: flush rate is the same 10K pages/sec (again 10K? ;-), but avg page rate is deviating now..

- the flush scanned pages/sec rate increased from 300K to 400K, but may 100K more scanned pages/sec explain deviation?

- seems to me until we'll not get it fixed, we'll not have an answer..

- or maybe is nome load conditions 10K random writes/sec become a real limit of the I/O level?.. (while on pure I/O test I've observed much more)

What about I/O stats?

Observations :

- looks like my SSD(s) are fully occupied now (sdc - is /dev/sdc device corresponding to my RAID-0 from x3 SSD LUN)

- while I/O queue is out-passed 50 I/O operations and not jumping anymore..

Did we increase some I/O waits reported by PFS?

Observations :

- wow! avg read time is increased a lot on some of the data files!

- does it explain disappearing of the index lock contentions? (slower reads making less concurrency?) - very interesting ;-)

- seems to me here too a proper fix for index lock contention will really help a lot!

And what PFS is saying about I/O waits on the REDO log files?

Observations :

- wow! another surprise: we're spending more "Misc" time now on REDO logs I/O (I suppose it's about fsync(), but PFS currently grouping all non-read and non-write times under "Misc" time, so will be great to see them separately (at least fsync(), and may be some other?)

- interesting that I/O activity did not change.. -- do we really reaching the I/O limit? -- I still have impression that wait are coming not because we're hitting the I/O limitations.. - seems to me I have to trace the wait events yet more in depth..

PROBLEM #3 : index lock contention - this is not a new one either, very long dated, and the fix is pretty complex.. - so it was too short to get it ready for MySQL 5.6 timeframe. But once we'll remove this contention, I hope it'll clarify a lot of things ;-)

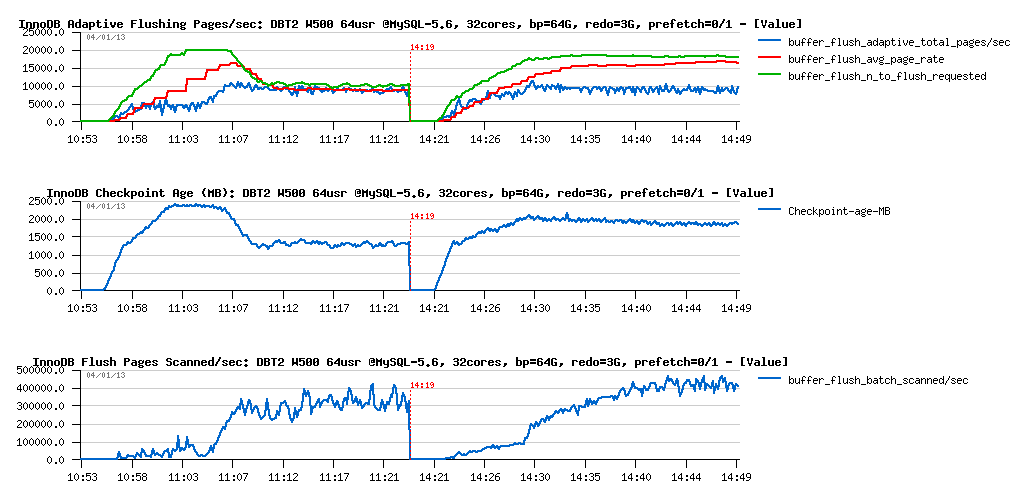

Well, let's not forget the test case when Buffer Pool was reduced from 64GB to 16GB. What we'll have on DBT2 W500?

DBT2 W500 redo= 3GB, bp= 64GB / 16GB :

Observations :

- ok, performance is x2 times worse (but only x2 times, so better than on Sysbench, once again confirms that regression depends on a workload)..

- however, the REDO log size is really small here, as over all test duration the log_sys_mutex contention is on the top waits position..

Better to replay it with 8GB REDO:

DBT2 W500 redo= 8GB, bp= 64GB / 16GB :

Observations :

- still x2 times performance regression

- but the main wait times now moved to the index lock contention and InnoDB data file reads (and seems to me each one depending on each other)

Flushing activity:

Observations :

- as expected, LRU flushing became dominating

- Checkpoint Age remaining stable, yet under critical level, so 8GB for REDO was enough

But well, looks like adding constant data reads now having the most important impact on performance regression:

However PFS is not reporting any important FILE IO waits:

So, looks like the I/O operations are less important here rather an overall lock contentions and LRU flushing design.. - and we definitively have something to do here (probably also implement a parallel flushing from LRU as for flushing list, or redesign it more optimally, let's see ;-))

NOTE: since MySQL 5.6 there is an option in InnoDB to tune the LRU scan depth (innodb_lru_scan_depth) to improve LRU flushing and make user thread waits mush shorter, but in the current test case it did not help due very intensive I/O reads..

INSTEAD OF SUMMARY

- While there is some mystery flying around current RW limits in MySQL, the one thing you can be sure: if your InnoDB "buffer_flush_avg_rate" constantly out-passing your "buffer_flush_adaptive_total_pages/sec" then you're hitting some limits on your dirty pages flushing!

- how to see it? - simple SELECT from InnoDB METRICS table (the avg rate is showing the current value, while the total pages is incremental, so you'll just need to do some simple maths to get pages/sec from here, probably I should write an article one day how I'm analyzing all this stuff, as every few days somebody is asking..)

- also, keep in mind that you may always reduce your flushing lag by increasing REDO logs size (while, of course, having too big REDO logs is not the only solution - just until we'll get it fixed ;-))

- then, if you're observing "lock mutex" or "index lock" (or other) contentions during your test or production workloads - please, bring us your details, it'll help us to fix them in the most optimal way (and not keeping in mind only our test cases)..

- well, MySQL 5.6 has already a lot of performance improvement, but yet more are waiting for us again to be done..

- some problems are known today, some new one yet will come (and I'm pretty sure Vadim and Peter will bring us yet more of them ;-)

- some will be fixed in MySQL 5.6, some will be fixed only in 5.7..

- but well, be sure we're taking all of them seriously, and working on them..

- so, stay tuned ;-)

to be continued...

Rgds,

-Dimitri

Friday, 16 November, 2012

MySQL Performance: InnoDB vs MyISAM in 5.6

Since the latest changes made recently within InnoDB code (MySQL 5.6) to

improve OLTP Read-Only performance + support of full text search (FTS),

I was curious to compare it now with MyISAM..

While there was no

doubt that using MyISAM as a storage engine for a heavy RW workloads may

become very quickly problematic due its table locking on write design,

the Read-Only workloads were still remaining favorable for MyISAM due

it's extreme simplicity in data management (no transaction read views

overhead, etc.), and specially when FTS was required, where MyISAM until

now was the only MySQL engine capable to cover this need.. But then FTS

came into InnoDB, and the open question for me is now: is there still

any reason to use MyISAM for RO OLTP or FTS wokloads from performance

point of view, or InnoDB may now cover this stuff as well..

For

my test I will use:

- Sysbench for OLTP RO workloads

- for FTS - slightly remastered test case with "OHSUMED" data set (freely available on Internet)

- All the tests are executed on the 32cores Linux box

- As due internal MySQL / InnoDB / MyISAM contentions some workloads may give a better results if MySQL is running within a less CPU cores, I've used Linux "taskset" to bind mysqld process to a fixed number of cores (32, 24, 16, 8, 4)

Let's get a look on the FTS performance first.

The OHSUMED test contains a less than 1GB data set and 30 FTS similar queries, different only by the key value they are using. However not every query is returning the same number of rows, so to keep the avg load more comparable between different tests, I'm executing the queries in a loop rather to involve them randomly.

The schema is the following:

CREATE TABLE `ohsumed_innodb` ( `docid` int(11) NOT NULL, `content` text, PRIMARY KEY (`docid`) ) ENGINE=InnoDB DEFAULT CHARSET=latin1; CREATE TABLE `ohsumed_myisam` ( `docid` int(11) NOT NULL, `content` text, PRIMARY KEY (`docid`) ) ENGINE=MyISAM DEFAULT CHARSET=latin1; alter table ohsumed_innodb add fulltext index ohsumed_innodb_fts(content); alter table ohsumed_myisam add fulltext index ohsumed_myisam_fts(content);

And the FTS query is looking like this:

SQL> SELECT count(*) as cnt FROM $(Table) WHERE match(content) against( '$(Word)' );

The $(Table) and $(Word) variables are replaced on fly during the test

depending which table (innoDB or MyISAM) and which key word is used

during the given query.

And there are 30 key words, each one

bringing the following number of records in the query result:

------------------------------------------------------------ Table: ohsumed_innodb ------------------------------------------------------------ 1. Pietersz : 6 2. REPORTS : 4011 3. Shvero : 4 4. Couret : 2 5. eburnated : 1 6. Fison : 1 7. Grahovac : 1 8. Hylorin : 1 9. functionalized : 4 10. phase : 6676 11. Meyers : 157 12. Lecso : 0 13. Tsukamoto : 34 14. Smogorzewski : 5 15. Favaro : 1 16. Germall : 1 17. microliter : 170 18. peroxy : 5 19. Krakuer : 1 20. APTTL : 2 21. jejuni : 60 22. Heilbrun : 9 23. athletes : 412 24. Odensten : 4 25. anticomplement : 5 26. Beria : 1 27. coliplay : 1 28. Earlier : 2900 29. Gintere : 0 30. Abdelhamid : 4 ------------------------------------------------------------

Results are exactly the same for MyISAM and InnoDB, while the response

times are not. Let's go in details now.

FTS : InnoDB vs MyISAM

The following graphs are representing the results obtained with:

- MySQL is running on 32, 24, 16, 8, 4 cores

- Same FTS queries are executed non-stop in a loop by 1, 2, 4, .. 256 concurrent users

- So, the first part of graphs is representing 1-256 users test on 32 cores

- The second one the same, but on 24 cores, and so on..

- On the first graph, once again, Performance Schema (PFS) is helping us to understand internal bottlenecks - you'll see the wait events reported by PFS

- And query/sec (QPS) reported by MySQL on the second one

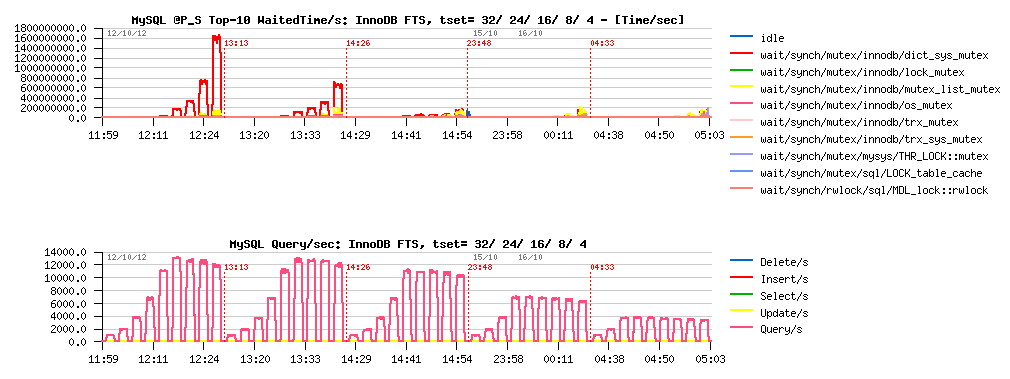

InnoDB FTS :

Observations :

- InnoDB FTS is scaling well from 4 to 16 cores, then performance is only slightly increased due contention on the dictionary mutex..

- However, there is no regression up to 32 cores, and performance continues to increase

- The best result is 13000 QPS on 24 or 32 cores

MyISAM FTS :

Observations :

- MyISAM FTS is scaling only from 4 to 8 cores, and then drop in regression with more cores..

- The main contention is on the LOCK_status mutex

- The best result is 3900 QPS on 8 cores

What about this LOCK_status mutex contention?.. - it gives an impression of a killing bottleneck, and if was resolved, would give an expectation to see MyISAM scale much more high and maybe see 16000 QPS on 32 cores?..

Well, I'd prefere a real result rather an expectation here ;-) So, I've opened MyISAM source code and seek for the LOCK_status mutex usage. In fact this mutex is mainly used to protect table status and other counters. Sure this code can be implemented better to avoid any blocking on counters at all. But my goal here is just to validate the potential impact of potential fix -- supposing there is no more contention on this mutex, what kind of the result may we expect then??

So, I've compiled an experimental MySQL binary having call to LOCK_status mutex commented within MyISAM code, and here is the result:

MyISAM-noLock FTS :

Observations :

- LOCK_status contention is gone

- But its place is taken now by data file read waits... - keeping in mind that all data are already in the file system cache...

- So, the result is slightly better, but data file contention is killing scalability

- Seems like absence of its own cache buffer for data is the main show-stopper for MyISAM here (while FTS index is well cached and key buffer is bigger than enough)..

- The best result now is 4050 QPS still obtained on 8 cores

-

NOTE :

- using mmap() (myisam_use_mmap=1) did not help here, and yet added MyISAM mmap_lock contention

- interesting that during this RO test performance on MyISAM was better when XFS was used and worse on EXT4 (just thinking about another point in XFS vs EXT4 discussion for MySQL) -- particularly curious because whole data set was cached by the filesystem..

So far:

- InnoDB FTS is at least x3 times faster on this test vs MyISAM

- As well x1.5 times faster on 8 cores where MyISAM shows its top result, and x2 times faster on 4cores too..

- And once dictionary mutex lock contention will be fixed, InnoDB FTS performance will be yet better!

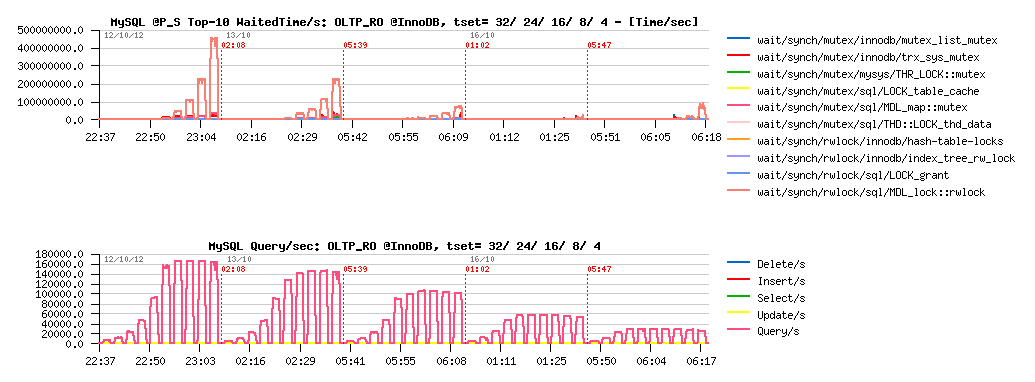

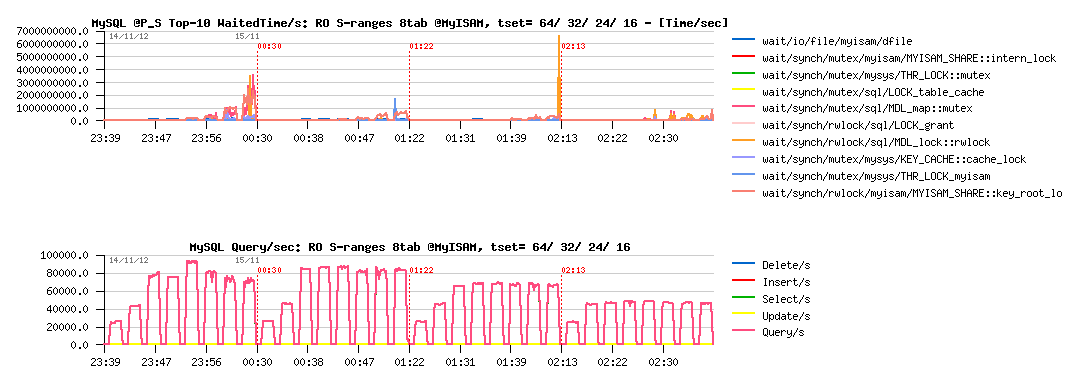

OLTP Read-Only : InnoDB vs MyISAM

As a start point, I've used "classic" Sysbench OLTP workloads, which are accessing a single table in a database. Single table access is not favorable for MyISAM, so I will even not comment each result, will just note that:

- the main bottleneck in MyISAM during this test is on the "key_root_lock" and "cache_lock" mutex

- if I understood well, the solution to fix "cache_lock" contention in such a workload was proposed with cache segments in MariaDB

- however, it may work only in the POINT SELECTS test (where cache_lock contention is the main bottleneck)

- while in all other tests the "key_root_lock" contention is dominating and for the moment remains not fixed..

- using partitioned table + having per partition key buffer should help here MyISAM, but I'll simply use several tables in the next tests

- InnoDB performance is only limited by MDL locks (MySQL layer), so expected to be yet better once MDL code will be improved

- in the following tests InnoDB is x3-6 times faster than MyISAM..

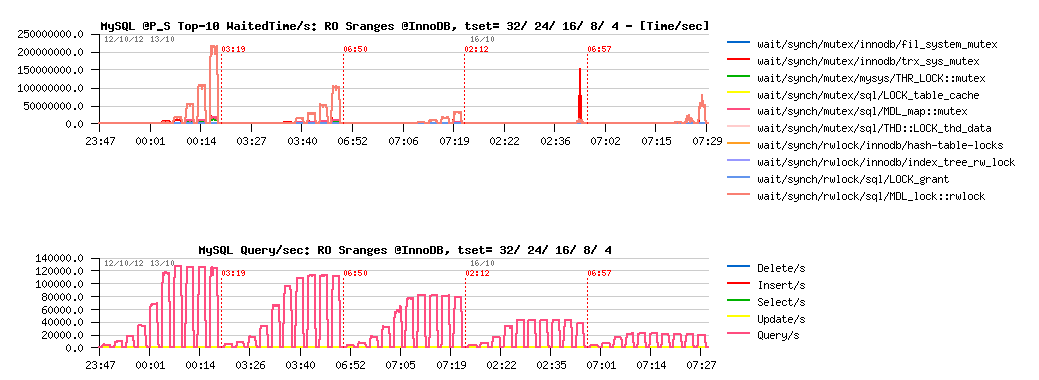

Sysbench OLTP_RO @InnoDB :

Sysbench OLTP_RO @MyISAM :

Sysbench Simple-Ranges @InnoDB :

Sysbench Simple-Ranges @MyISAM :

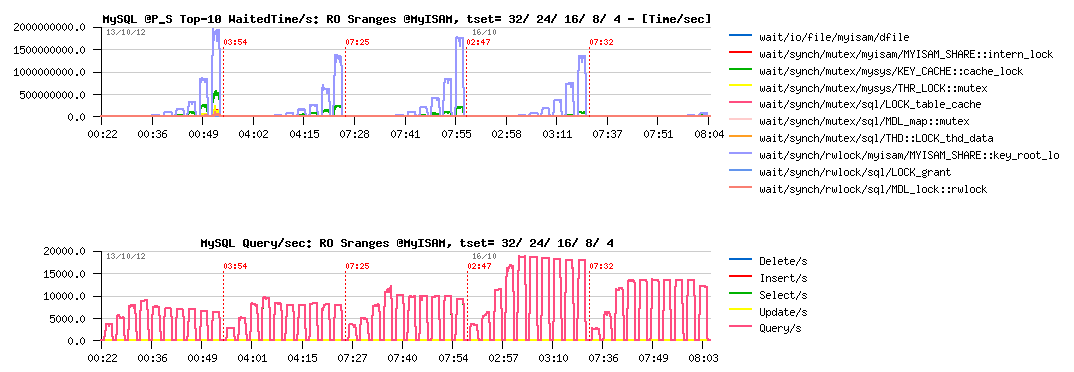

Sysbench Point-Selects @InnoDB :

Sysbench Point-Selects @MyISAM :

OLTP Read-Only with 8 tables : InnoDB vs MyISAM

Test with 8 tables become much more interesting, as it'll dramatically lower key_root_lock contention in MyISAM, and MDL contentions as well. However, we're hitting in MyISAM the key cache mutex contention, so there are 8 key buffers used (one per table) to avoid it. Then, scalability is pretty good on all these tests, so I'm limiting test cases to 64, 32, 24 and 16 cores (64 - means 32cores with both threads enabled (HT)). As well, concurrent users are starting from 8 to use all 8 tables at time.

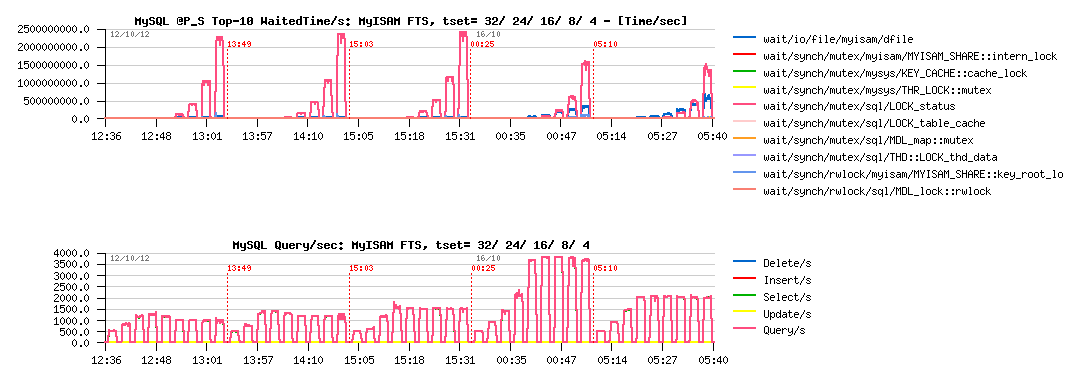

Let's get a look on OLTP_RO workload first :

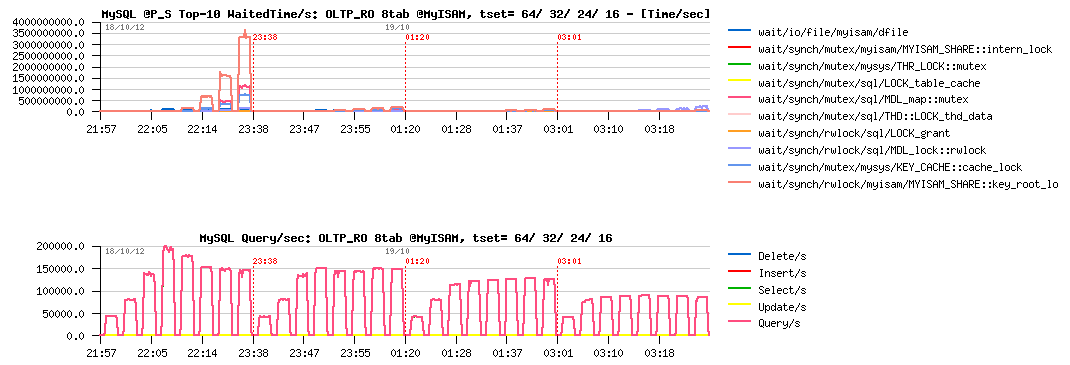

Sysbench OLTP_RO 8-tables @InnoDB :

Sysbench OLTP_RO 8-tables @MyISAM :

Observations :

- InnoDB is still better on OLTP_RO than MyISAM..

- for InnoDB, the main bottleneck seems to be on the MDL related part

- for MyISAM - key_root_lock is still here (not as much as before, but still blocking)

- InnoDB is reaching 215K QPS max, and MyISAM 200K QPS

- As you see, speed-up is very significant for both storage engines when activity is not focused on a single table..

And to finish with this workload, let me present you the "most curious" case ;-) -- this test is getting a profit from the fact that within auto-commit mode MySQL code is opening and closing table(s) on every query, while if BEGIN / END transactions statements are used, table(s) are opened since BEGIN and closed only at the END statement, and as OLTP_RO "transaction" contains several queries, this is giving a pretty visible speep-up! Which is even visible on MyISAM tables as well ;-)

So, I'm just turning transactions option "on" within Sysbench OLTP_RO:

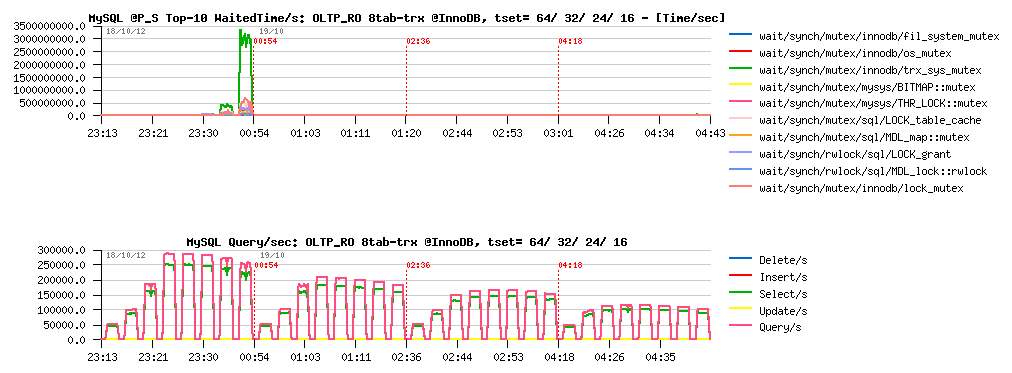

Sysbench OLTP_RO 8-tables TRX=on @InnoDB :

Sysbench OLTP_RO 8-tables TRX=on @MyISAM :

Observations :

- InnoDB is going from 215K to 250K QPS

- MyISAM is going from 200K to 220K QPS

- there is definitively something to do with it.. ;-))

Now, what about SIMPLE-RANGES workload?

Sysbench RO Simple-Ranges 8-tables @InnoDB :

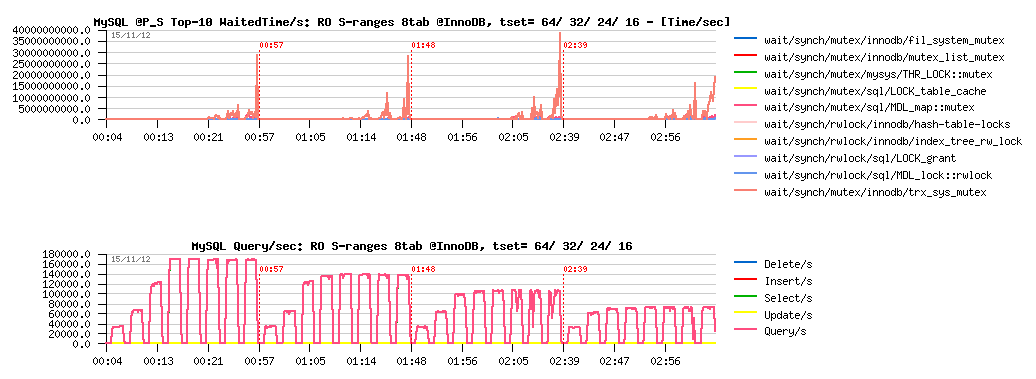

Sysbench RO Simple-Ranges 8-tables @MyISAM :

Observations :

- InnoDB is reaching 170K QPS here, mainly blocked by MDL related stuff..

- MyISAM is getting only 95K QPS max, seems to be limited by key_root_lock contention..

So far, InnoDB won over MyISAM on every presented test cases until here.

But get a look now on one case where MyISAM is still better..

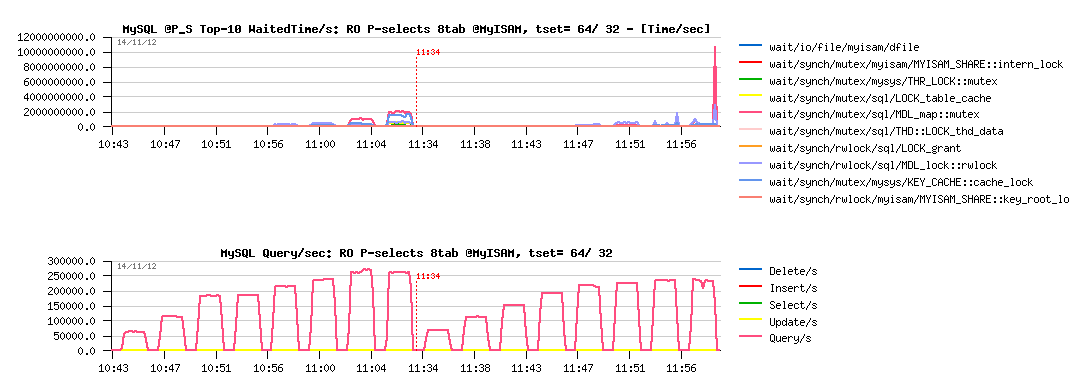

POINT-SELECTS WITH 8 TABLES

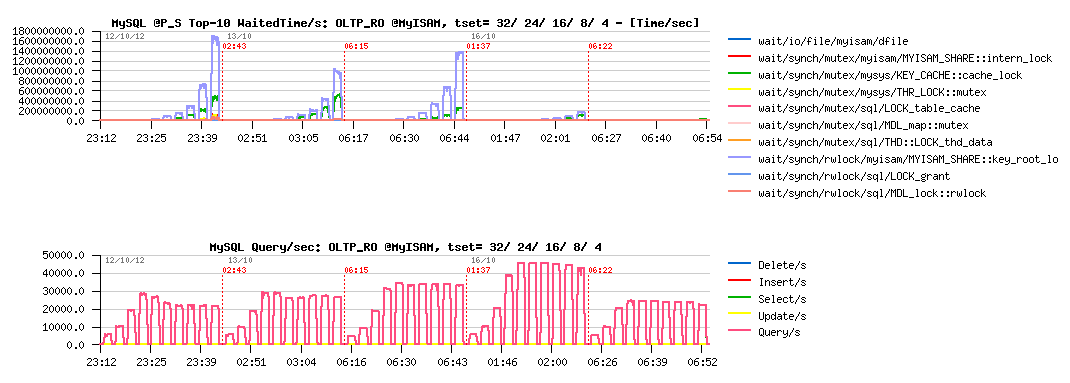

I'm dedicating a separate chapter for this particular test workload, as it was the only case I've tested where MyISAM out-passed InnoDB in performance, so required more detailed analyze here.. Both storage engines are scaling really well on this test, so I'm limiting result graphs to 64 (HT) and 32 cores configurations only.

Let's get a look on MyISAM results on MySQL 5.6-rc1 :

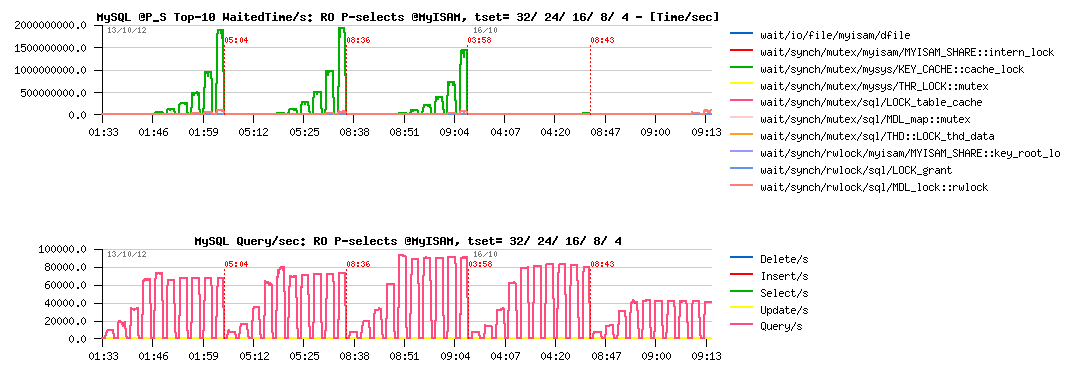

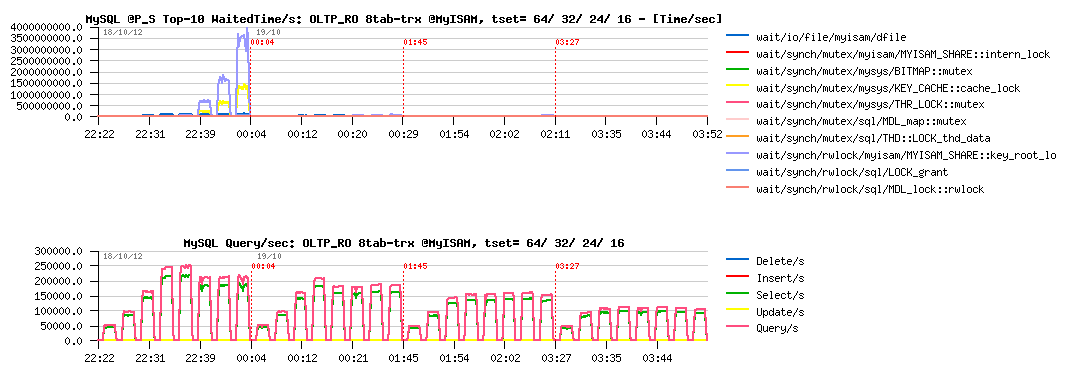

Sysbench RO Point-Selects 8-tables @MyISAM 5.6-rc1 :

Observations :

- MyISAM is reaching 270K QPS max on this workload

- and starting to hit MDL-related contentions here!

While MySQL 5.6-rc2 already contains the first part of MDL optimizations ("metadata_locks_hash_instances"), and we may expect a better results now on workloads having MDL_map::mutex contention in the top position. So, let's see hot it helps MyISAM here.

Sysbench RO Point-Selects 8-tables @MyISAM 5.6-rc2 :

Observations :

- Wow! - 360K QPS max(!) - this is a very impressive difference :-)

- then key cache lock contention is blocking MyISAM from going more high..

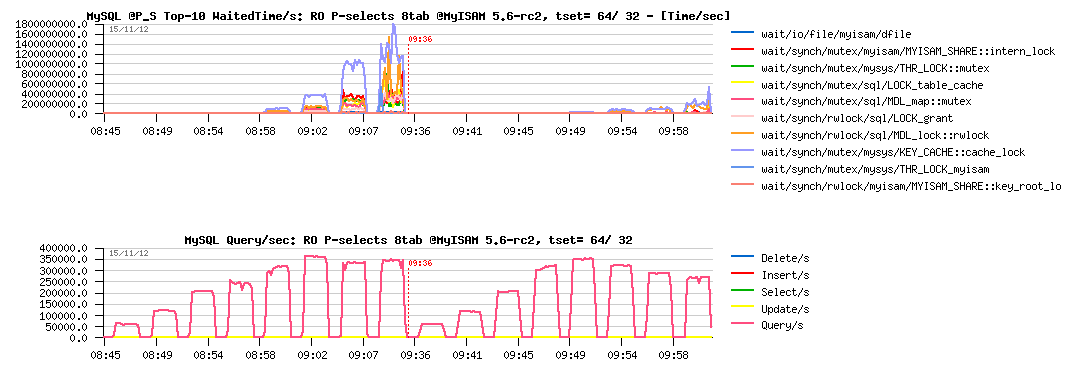

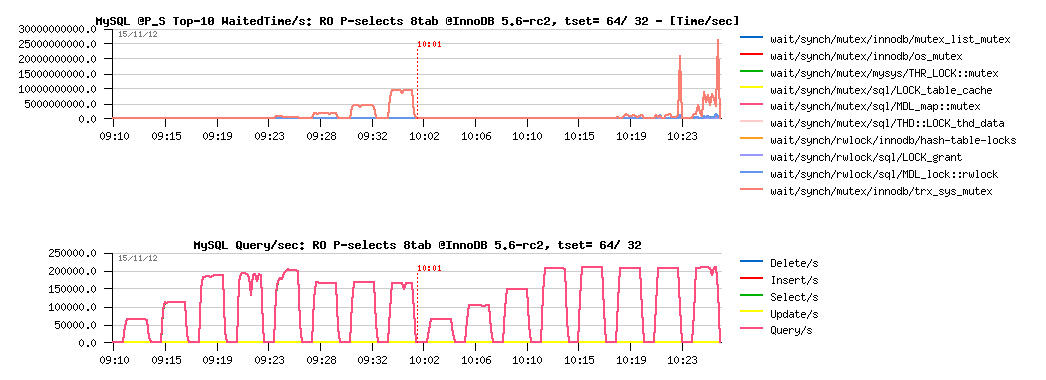

Then, what about InnoDB here?.. - the problem with InnoDB that even with getting a more light code path with READ ONLY transactions it'll still create/destroy read-view, and on such a workload with short and fast queries such an overhead will be seen very quickly:

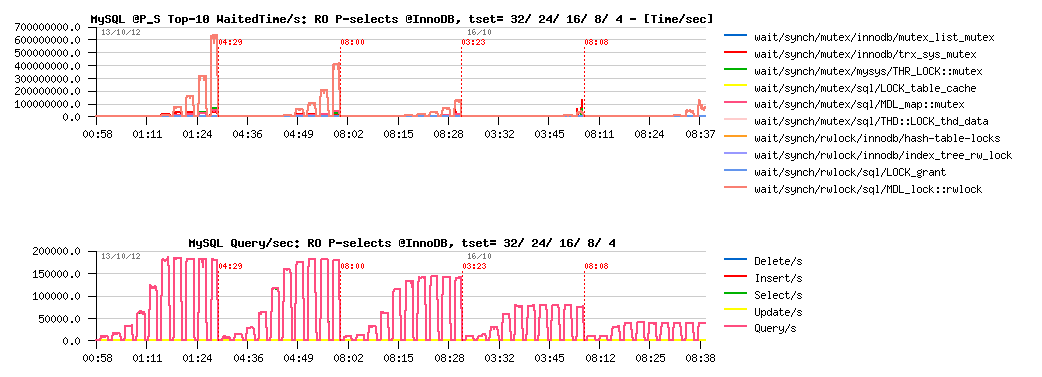

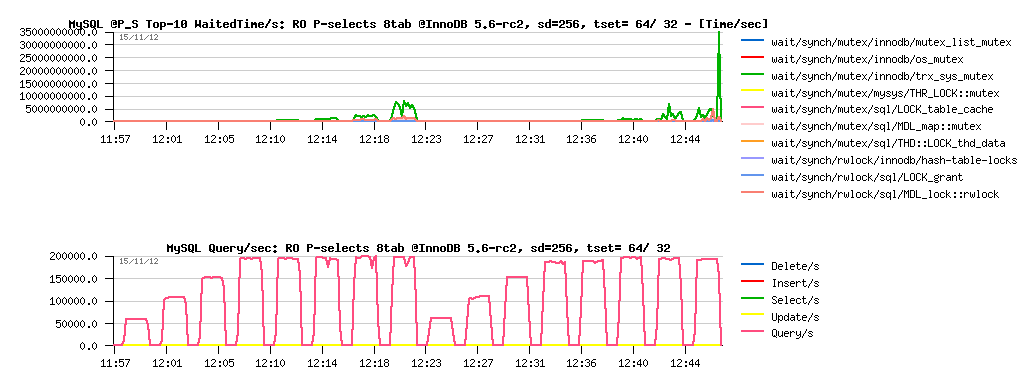

Sysbench RO Point-Selects 8-tables @InnoDB 5.6-rc2 :

Observations :

- InnoDB is reaching only 210K QPS max on this workload

- the main bottleneck is coming from trx_sys::mutex contention (related to read-views)

- this contention is even making a QPS drop on 64 cores threads (HT), so the result is better on pure 32cores..

Such a contention is still possible to hide (yes, "hide", which is different from "fix" ;-)) -- we may try to use a bigger "innodb_spin_wait_delay" value. The changes can be applied live on a running system as the setting is dynamic. Let's try now innodb_spin_wait_delay=256 instead of 96 that I'm using usually :

Sysbench RO Point-Selects 8-tables @InnoDB 5.6-rc2 sd=256 :

Observations :

- as you can see, the load is more stable now

- but we got a regression from 210K to 200K QPS..

So, a true fix for trx_sys mutex contention is really needing here to go more far. This work is in progress, so stay tuned ;-) Personally I'm expecting at least 400K QPS here on InnoDB or more (keeping in mind that MyISAM is going throw the same code path to communicate with MySQL server, having syscalls overhead on reading data from the FS cache, and still reaching 360K QPS ;-))

However, before to finish, let's see what are the max QPS numbers may be obtained on this server by reducing some overheads on internals:

- I'll disable Performance Schema instrumentation

- and use prepared statements to reduce SQL parser time..

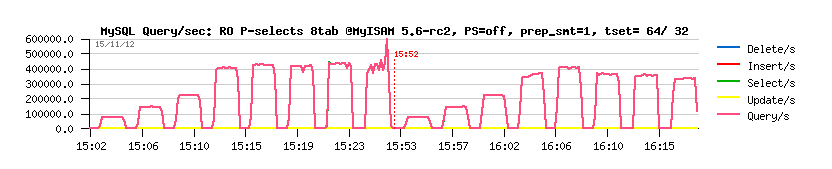

Sysbench RO Point-Selects 8-tables @MyISAM 5.6-rc2 PFS=off prep_smt=1 :

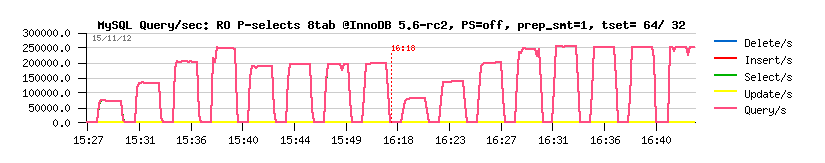

Sysbench RO Point-Selects 8-tables @InnoDB 5.6-rc2 PFS=off prep_smt=1 :

Observations :

- Wow! 430K (!) QPS max on MyISAM!...

- and 250K (!) QPS on InnoDB!

These results are great!.. - and both are coming due the great improvement made in MySQL 5.6 code.

(specially keeping in mind that just one year ago on the same server I was unable to get more than 100K QPS on InnoDB ;-))

While, anyway, I'm still willing to see something more better from InnoDB (even if I understand all these transactional related stuff constrains, and so on)..

So far, let me show you something ;-))

Starting from the latest MySQL 5.6 version, InnoDB has a "read-only" option -- to switch off all database writes globally for a whole InnoDB instance (innodb_read_only=1).. This option is working very similar to READ ONLY transactions today, while it should do much more better in the near future (because when we know there is no changes possible in the data, then any transaction related constraints may be ignored). And I think the READ ONLY transactions may yet work much more better than today too ;-))

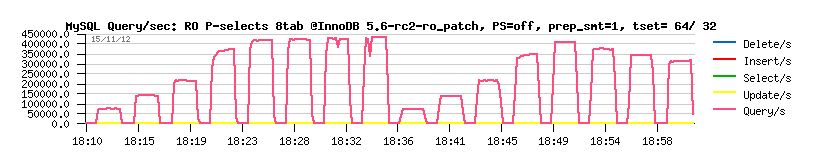

Sunny is working hard on improvement of all this part of code, and currently we have a prototype which is giving us the following on the same workload :

Sysbench RO Point-Selects 8-tables @InnoDB 5.6-rc2-ro_patch PFS=off prep_smt=1 :

Observations :

- as you can see, we're rising 450K (!) QPS within the same test conditions!!! :-)

- and it's yet on an old 32cores bi-thread server..

- it reminds me the famous 750K QPS on "Handler Socket".. - as you see, we become more and more close to it ;-)

- and still passing by a normal SQL and keeping all other RDBMS benefits ;-)

- so, for all users hesitating to use MySQL or move to noSQL land.. - you'll yet be surprised by MySQL power ;-))

INSTEAD OF SUMMARY

- InnoDB seems to be today way faster on FTS than MyISAM

- on OLTP RO workloads InnoDB is also faster than MyISAM, except on point selects, but this gap should be removed too in the near future ;-)

- if you did not try MySQL 5.6 yet -- please, do! -- it's already great, but with your feedback will be yet better! ;-)

And what kind of performance difference you're observing in your workloads?..

Please, share!..

Monday, 05 November, 2012

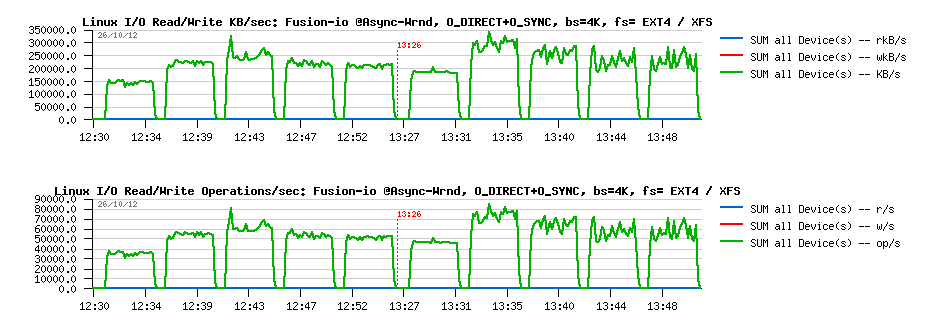

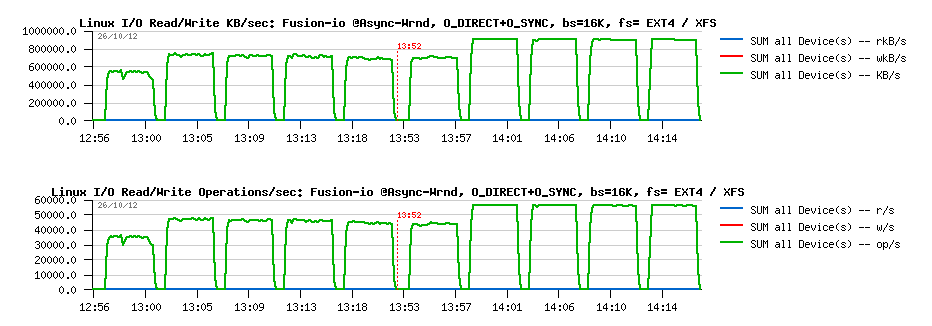

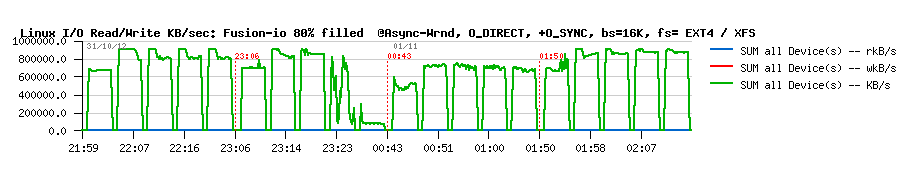

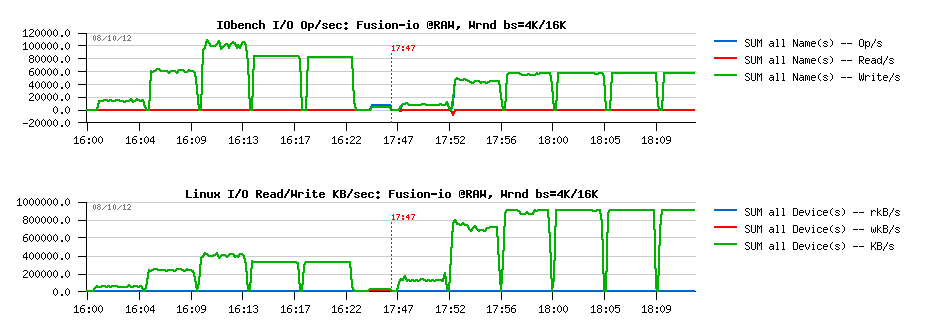

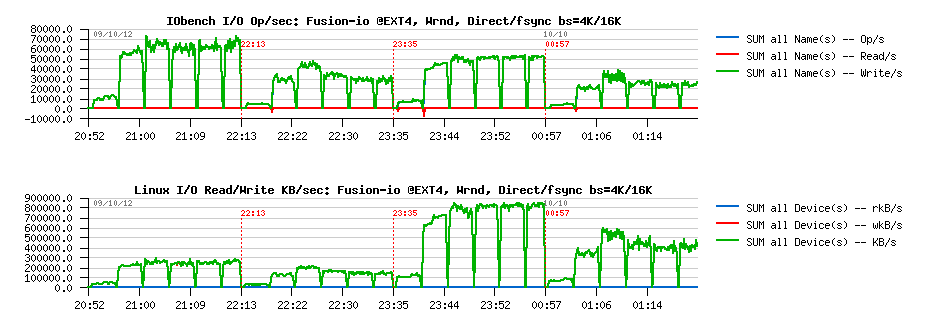

MySQL Performance: Linux I/O and Fusion-IO, Part #2

This post is the following part #2 of the previous

one - in fact Vadim's comments bring me in some doubts about the

possible radical difference in implementation of AIO vs normal I/O in

Linux and filesystems. As well I've never used Sysbench for I/O testing

until now, and was curious to see it in action. From the previous tests

the main suspect point was about random writes (Wrnd) performance on a

single data file, so I'm focusing only on this case within the following

tests. On XFS performance issues started since 16 concurrent IO write

processes, so I'm limiting the test cases only to 1, 2, 4, 8 and 16

concurrent write threads (Sysbench is multi-threaded), and for AIO

writes seems 2 or 4 write threads may be more than enough as each thread

by default is managing 128 AIO write requests..

Few words about

Sysbench "fileio" test options :

- As already mentioned, it's multithreaded, so all the following tests were executed with 1, 2, 4, 8, 16 threads

- Single 128GB data file is used for all workloads

- Random write is used as workload option ("rndwr")

- It has "sync" and "async" mode options for file I/O, and optional "direct" flag to use O_DIRECT

- For "async" there is also a "backlog" parameter to say how many AIO requests should be managed by a single thread (default is 128, and what is InnoDB is using too)

So, lets try with "sync" + "direct" random writes first, just to check if I will observe the same things as in my previous tests with IObench before:

Sync I/O

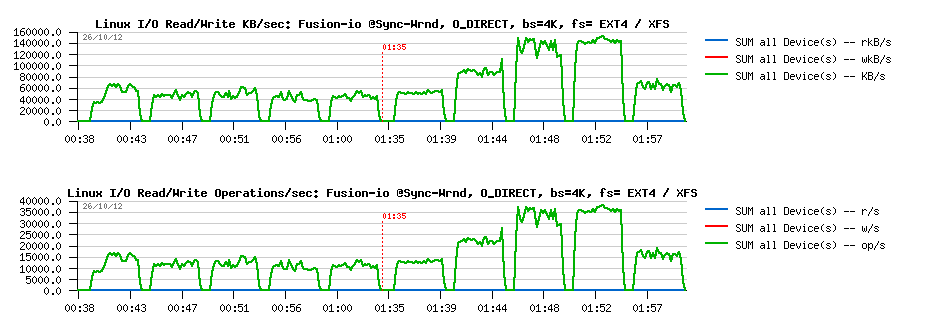

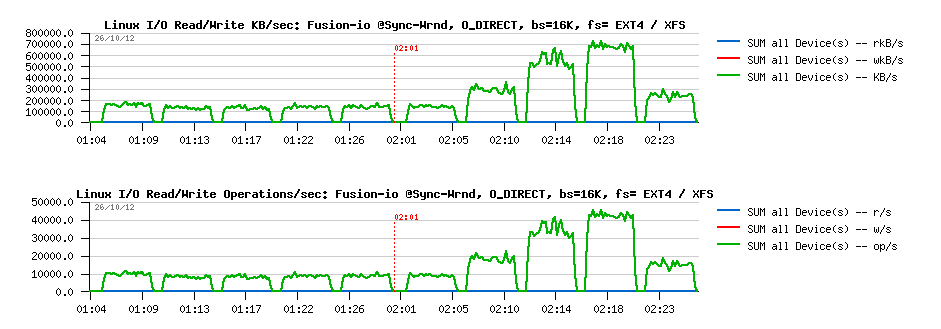

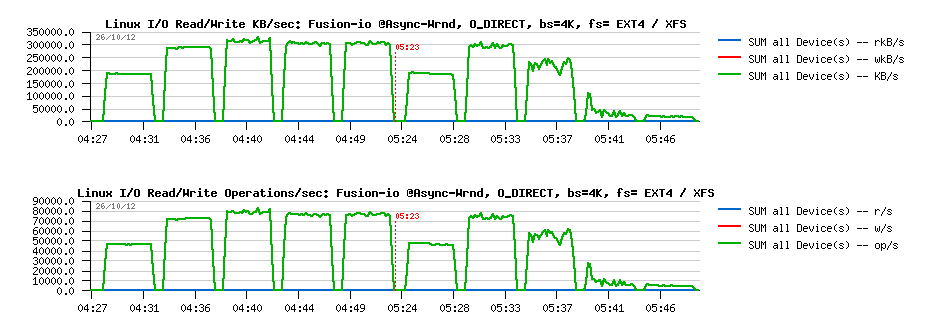

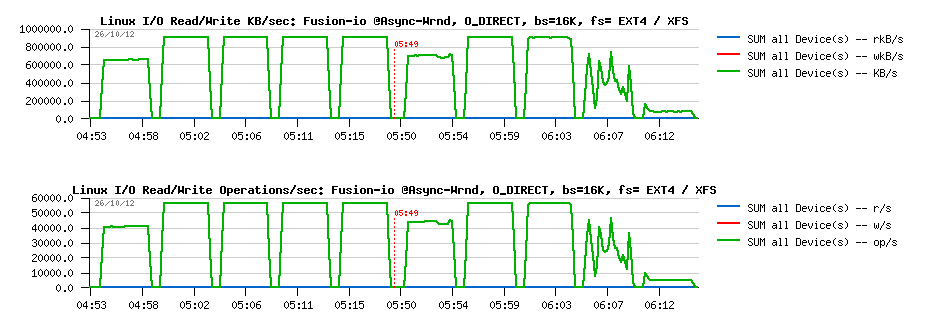

Wrnd "sync"+"direct" with 4K block size:

Observations :

- Ok, the result is looking very similar to before: