« March 2013 | Main | January 2013 »

Thursday, 28 February, 2013

MySQL Performance: Analyzing Benchmarks, part-2 : MDL contentions and PFS overhead in MySQL 5.6

Following the previous post, this article is focused on performance problems reported by Mark Callaghan during MySQL 5.6 GA testings, which was initially about MDL contentions, and then also Performance Schema (PFS) overhead observed within the same OLTP Read-Only workload..

Let's split these 2 problems in 2 parts, and start with MDL related

contentions first.

MDL in MySQL 5.5 and 5.6

What is MDL?.. - I'm strongly inviting you to read Dmitry Lenev's comments within the same bug report to get familiar with MDL feature and its implementation design (http://bugs.mysql.com/bug.php?id=66473)

Quoting Dmitry Lenev:

"First of all I would like to emphasize that MDL was added to solve some problems with correctness of isolation between normal transactions and DDL, like infamous MySQL bug #989. It is not a replacement for the Table Definition Cache and its mutexes/locks are not a replacement for LOCK_open (though it has allowed to reduce the size of some of LOCK_open's critical sections).

So it should be expected that this new synchronization mechanism introduces some extra overhead compared to 5.1. Especially in the case with just 1 connection since in this case the new synchronization layer just adds a pure overhead...."

And then continue with MySQL 5.5 documentation

(while I think that this chapter should be extended with details given

by Dmitry Lenev in the bug report) and there are several real-life

examples pretty well explained in Ovais article.

In

short:

- MDL is must to have feature in MySQL!

- but from performance point of view it represents a pure additional overhead..

Let's get a more close look on this MDL related performance overhead:

- so, first of all additional code is executed on every MDL access

-

then additional synchronization code is used during MDL operation

(read: potential contentions)

- every MDL_lock data are protected by RW-lock

- all MDL_lock entities are living in a HASH

- any acces to a HASH requiring MDL_map mutex lock..

- so, as long as your queries are taking time, or bringing many rows, or living inside of transactions with several queries -- you may even not remark any "contention" related MDL overhead (specially if you're hitting more hot locks like it was in MySQL 5.5)..

- however, as soon as you're running very short and very fast queries, you're starting to hit MDL_map mutex contention on every query! (e.g. on every table access)

- and what is fun: until you're having only one table "hot", contentions ratio between MDL_map mutex and MDL_lock rw-lock will be less or more equal (we're not keeping both on the same time, while every concurrent session will need to acquire a lock on the same MDL_lock for every query (as they are all accessing the same "hot" table))..

- but if you're having several(!) "hot" tables, then MDL_map lock becomes a real problem, as it'll be a single point entry to many MDL_lock entities (as there are several "hot" tables)...

And it's exactly what Mark observed when reported initially in his bug report - using Sysbench OLTP RO Point-Selects scenario with 8 tables bombarded by many concurrent users..

NOTE: of course, this contention became visible in MySQL 5.6 only since all other contentions were already lowered ;-)

But well, this bug was reported in Aug.2012 and fixed in Sep.2012 (Dmitry Lenev) by introducing of a new configuration option: metadata_locks_hash_instances. Which is giving a possibility to split initial single hash entry (protected by a single MDL_map mutex) into several ones - "hash instances" (8 by default), each one protected now by its own MDL_map mutex. The choice of which hash instance should be used for a given "object" (here: table) was delegated to my_hash_sort_bin() function and based on the "object" name. In short for the given bug report case:

my_hash_sort_bin( "db_name.table_name" ) % metadata_locks_hash_instances ==> index of hash instance to use

And I was able to test this solution, confirm obtained performance improvements, and even present the results during MySQL Connect conference ;-))

So, what happened wrong since then, if Mark re-opened again the same bug in Feb.2013?.. - for out big surprise, according Mark's test results the bug was still here... While Mark used only 8 tables in his test and there was already 8 hash instances in MySQL 5.6 GA configured even by default..

Thanks to PFS (yes, Performance Schema again), we were able to understand the real source of the problem.. - and I'd say it was tragical and comical on the same time ;-)) -- for some unknown reason the famous my_hash_sort_bin() function gave very closer keys for different table names Mark used in his test... - As a result, always the same hash instance was used for all tables, likely there was only one single hash instance as it was before... Lack of luck? ;-) -- another hash function taken from InnoDB code worked just perfectly with Mark's table names.. (while my_hash_sort_bin() did not get any trouble to dispatch my table names ind different hash instances too ;-))... So, don't know how much InnoDB hash function will be better for a "final" solution, because hash key is hash key -- even completely different table names may still come with pretty close key numbers (and Dmitry is working on yet more improved solution).. But for a time being, if you're hitting MDL_map mutex contention, just think to increase the default metadata_locks_hash_instances=8 value to something more big (like 256 worked better for Mark).

Now, how to know that you're hitting a non-equilibrated access to your hash instances?

A simple PFS query (yes, again Performance Schema! ;-)) gives you the answer :

mysql> select event_name, count_star from events_waits_summary_by_instance

where event_name like '%mdl%mutex%';

+-------------------------------------+------------+

| event_name | count_star |

+-------------------------------------+------------+

| wait/synch/mutex/sql/MDL_map::mutex | 2840960 |

| wait/synch/mutex/sql/MDL_map::mutex | 2767663 |

| wait/synch/mutex/sql/MDL_map::mutex | 2777470 |

| wait/synch/mutex/sql/MDL_map::mutex | 2835248 |

| wait/synch/mutex/sql/MDL_map::mutex | 2850254 |

| wait/synch/mutex/sql/MDL_map::mutex | 2766048 |

| wait/synch/mutex/sql/MDL_map::mutex | 2796438 |

| wait/synch/mutex/sql/MDL_map::mutex | 3004758 |

+-------------------------------------+------------+

8 rows in set (0.00 sec)

And as you can see from the output, it was pretty ok for me with my 8

tables and default 8 hash instances ;-)

(if the difference between

counter values is bigger, think to increase the

metadata_locks_hash_instances setting, but be sure first that MDL_map

mutex contention is present and remains significant, otherwise

increasing a number of metadata_locks_hash_instances will not give you

any visible improvement)..

Let's see now how big may be the

impact of such a lock contention.

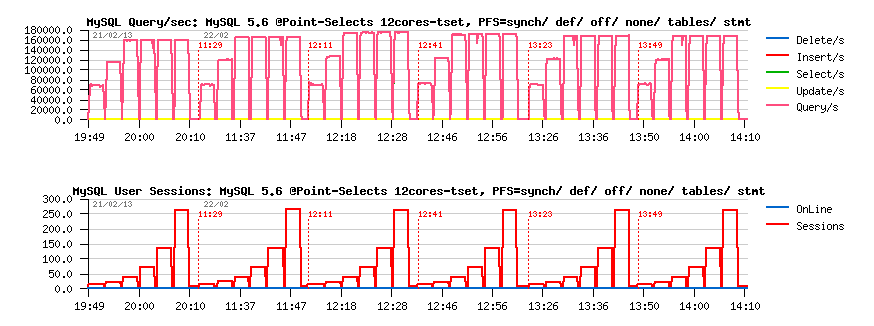

For the following test I've

used a "true" 12cores server, which seems to be very similar to Mark's

server:

- 12cores-HT 2.9Ghz, 96GB RAM

- OEL6.2

- MySQL 5.6 GA

- Sysbench 0.4.13 OLTP_RO Point-Selects scenario with 8 tables

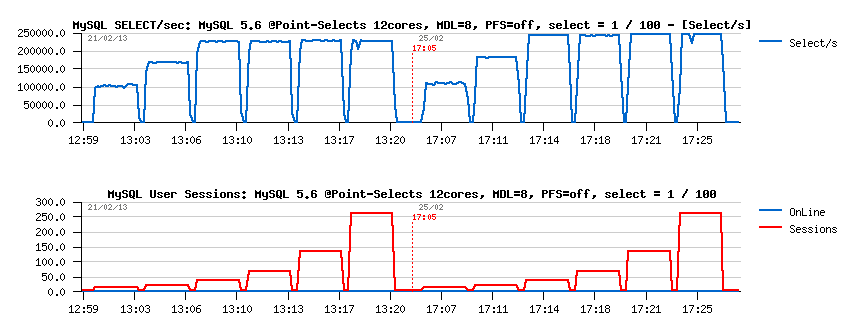

Let's start with a small number of concurrent users (8-32):

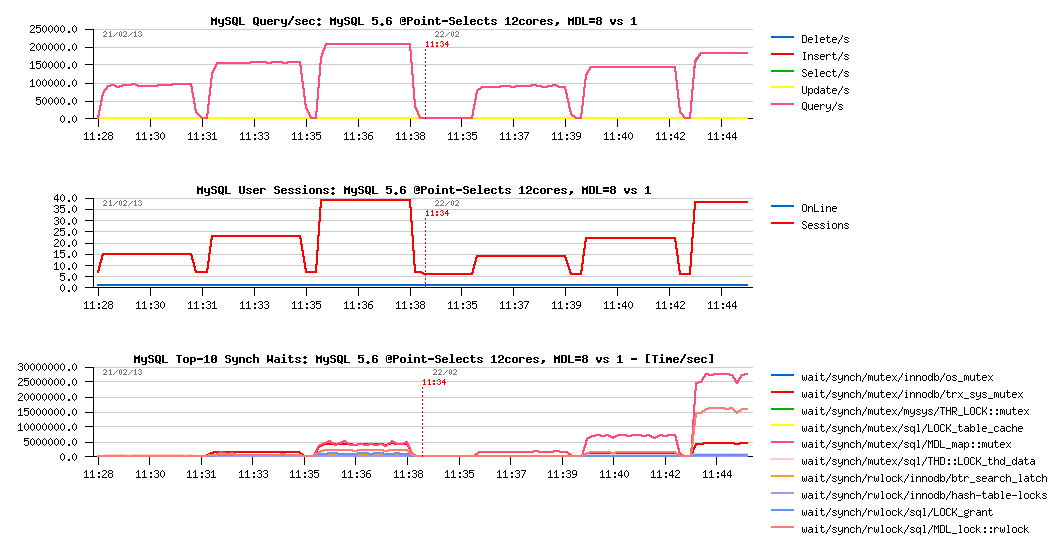

Impact MDL hash instances = 8 vs 1 :

Observations :

- So, the left part of graphs representing the results with metadata_locks_hash_instances=8, while the right with metadata_locks_hash_instances=1 (like it was initially, and showing what Mark should observe in his tests last year and this year once again ;-))

- as you can see, the real impact in performance is only seen since 32 concurrent users (~210K QPS vs 185K QPS)

- and the difference in MDL mutex wait times reported by PFS (yes, again ;-)) is simply spectacular..

And as we're speaking about monitoring, and if you're still have some doubts in PFS power, let me show you something.. - the following graphs are corresponding to the same workload, but now with the same QPS levels you'll also see lock waits reported by InnoDB and waits events/sec (not time!) reported by PFS:

Monitoring on MDL hash instances = 8 vs 1 :

Observations :

- from the InnoDB lock waits report you may see that the number of waits/sec on trx_sys mutex decreased when metadata_locks_hash_instances setting was changed from 1 to 8, right?..

- however, only PFS gives you the right answer on the graph before (with time waited per second) -- you can see that in both test cases the waited time on trx_sys mutex remained exactly the same!

- and looking on the graph above with PFS reported waits/sec you may see that trx_sys mutex is more often involved when metadata_locks_hash_instances=8 is used (which is as expected, as we're obtaining a higher QPS)..

- BTW, from the same graph you may see that instrumentation code on locks is executed several millions times/sec, so, of course, impossible to have it with zero overhead..

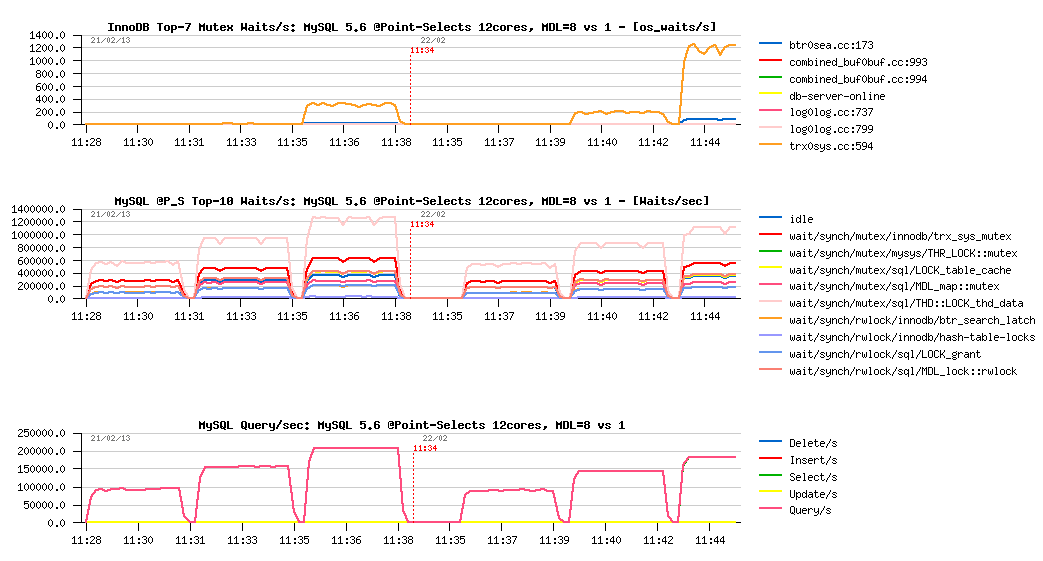

Let's get a full picture now of the same test, but with 8 to 256 concurrent users now:

MySQL 5.6 Point-Selects @12cores, MDL hash instances = 8 vs 1 :

Observations :

- despite high contention on MDL_map mutex with metadata_locks_hash_instances=1, it's still reaching 200K QPS at the end of the test..

- however, metadata_locks_hash_instances=8 is giving us stable 210K QPS since 16 and up to 256 concurrent users

What will be the "final" result now if I'll disable PFS? (and as the discussion about 5.6 vs 5.5 performance also in the air currently, let's include MySQL 5.5 results on the same graph too):

Comparing MySQL 5.6 MDL hi= 8 / 1, MySQL 5.5, PFS=off :

Observations :

- so far, MySQL 5.6 with metadata_locks_hash_instances=8 reaching 230K QPS

- with metadata_locks_hash_instances=1 - 220K QPS

- and MySQL 5.5 is getting 205K QPS..

- interesting that MySQL 5.6 with metadata_locks_hash_instances=8 results become already better than MySQL 5.5 since 16 concurrent user sessions..

- as well we may note 9% PFS overhead between PFS=off and PFS=synch setting (synch: when all synch event instrumentation is enabled (mutexes, rw-locks, etc. - in fact the most "heavy" instrumentation))

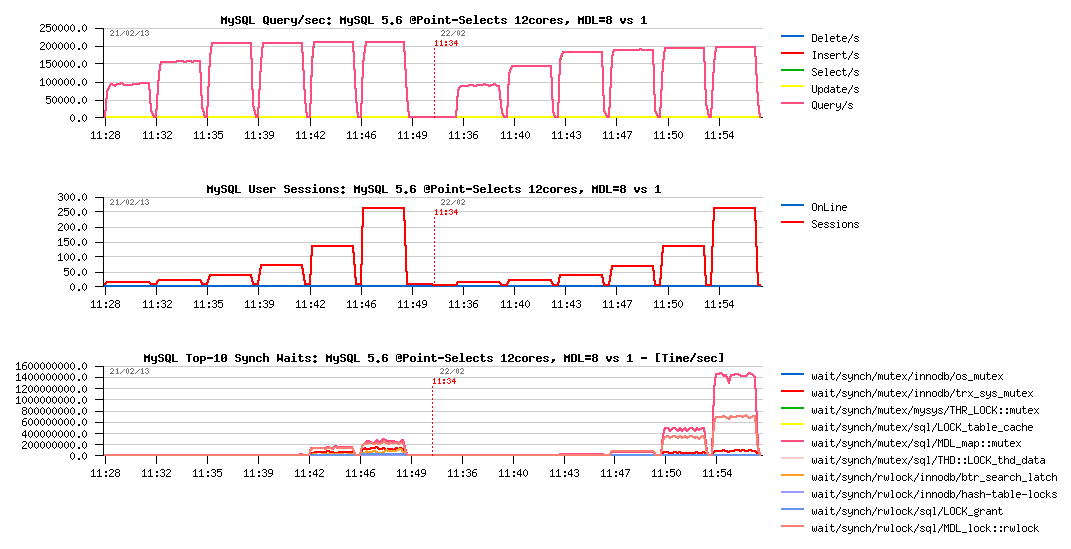

And to finish with MDL, let's see how far we may go by lowering MDL locks even more... - to do this all we need is just to keep in mind that within a transaction MDL lock for the same table is acquired only once (and not for every query as in auto-commit)..

So, I'll just change Sysbench option to execute 100 Point-Selects queries within a single transaction (instead of one query in auto-commit).. - and here is the result:

Going further with MDL :

Observations :

- as you can see, instead of 230K QPS before we're getting 250K QPS now!

- and probably it's the max for this server, as it also lowered trx_sys mutex contention as well, and the hottest wait time moved to GRANT lock.. pretty fun ;-)

Hope everything is clear so far with MDL locking, and let's get a look now on PFS overhead..

PFS Overhead in MySQL 5.6

Before even start to discuss about, let me bring your attention to the article I've intentionally published last year about the Game of contentions - this show you by example that there is no "pre-defined" PFS overhead.. - as soon as you're hitting internal lock contentions within MySQL server code or InnoDB engine, you cannot say that PFS overhead will remain on some expected level.. - All depends which levels of instrumentation you've enabled, and which level of MySQL code you're touching..

Last year I've observed not more than 5% overhead on various test workloads when PFS was enabled by defaults (like it's today in MySLQ 5.6 GA). However, MySQL 5.6 performance was yet more improved since then, means PFS instrumentation code is executed yet more often too.. - so, when you're reaching a peak QPS level, and your performance is limited by internal MySQL contentions, then PFS overhead will be the most visible.. However, you may always go step by step, and enable PFS instrumentation according your needs.

On the tested workload Mark reported 10% overhead between PFS=off and PFS=def (default) configs.. Let's see what I'm obtaining on my server on the same tests.

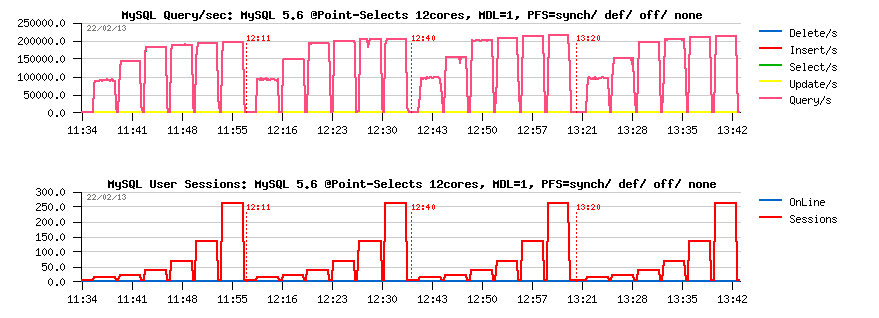

let's get a look on "initial" PFS overhead (before the fix with hash instances, like metadata_locks_hash_instances=1):

PFS overhead, MDL hash instances =1 :

Observations :

- the first test is executed with PFS=synch (mutexes, rw-locks, plus all "default") : 200K QPS

- then with PFS=def (just default instrumentation enabled) : 205K QPS

- then PFS=off : 220K QPS

- then with PFS=none (PFS is enabled, but all instrumentation disabled) : 215K QPS

- so, the difference in QPS levels is: 200K -vs- 205K -vs- 220K -vs- 215K

-

and regression comparing to PFS=off:

- PFS=synch : 10%

- PFS=def : 7%

- PFS=none : 2%

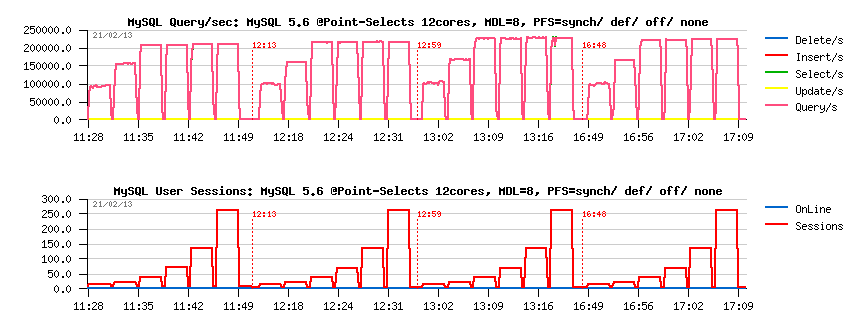

What is changing when MDL lock contention is lowered (with metadata_locks_hash_instances=8)

PFS overhead, MDL hash instances =8 :

Observations :

- here PFS=off performance is 230K QPS

-

so, the overhead vs PFS=off:

- PFS=synch : 9.5% (210K QPS)

- PFS=def : 4.5% (220K QPS)

- PFS=none: 2% (225K QPS)

As you can see, PFS regression is decreasing as far as we're fixing internal MySQL code contentions ;-)

And performance level of PFS=def with metadata_locks_hash_instances=8 is equal now to metadata_locks_hash_instances=1 and PFS=off.. - just think about ;-))

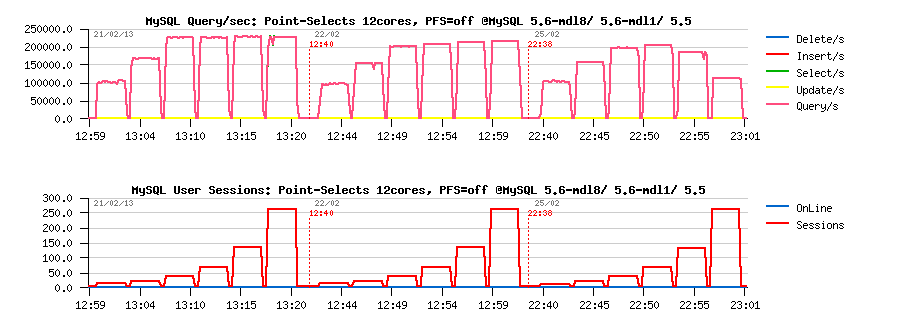

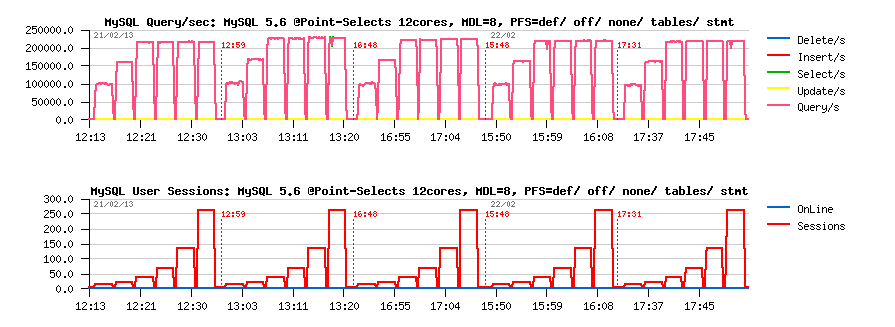

However, let's go more in details of the PFS=def overhead. In fact, by default we're enabling tables+I/O related and statements related PFS instrumentations (all can be dynamically disabled/enabled at any moment), but what is the overhead of each one alone?..

PFS=def overhead details, MDL hash instances = 8 :

Observations :

- PFS=off : 230K QPS

-

overhead vs PFS=off:

- PFS=def : 4.5% (220K QPS)

- PFS=none : 2% (225K QPS)

- PFS=tables: 3% (223K QPS)

- PFS=statements : 3% (223K QPS)

So, as you see, you can keep PFS overhead as low as 2% and still have PFS in "enabled" state.. Then enable on demand tables/IO or statements instrumentation for 3% overhead.. (or keep enabled for 3%, or both for 4.5%, etc.) - As you see, you have several options ;-)

NOTE: PFS collecting most of the data "lock free" (most of collected data are saved within user thread local data) -- this is avoiding any potential contentions on instrumentation code itself. But when you're requesting these data via PFS query, these data should be summarized before printed, so such a request will easily monopolize one CPU core on your server during execution (even for a short period), so try to avoid to send several PFS queries on the same time ;-)

However, I did not meet 10% PFS=def overhead reported by Mark.. - let's try replay the same test case on a different server. I'll try to reproduce the same issue by keeping MySQL server running within a 12cores taskset on 32cores server.

Config:

- 32cores-HT 2300Mhz

- OEL 6.2

- MySQL 5.6 GA running within 12cores-HT taskset, metadata_locks_hash_instances=8

-

Sysbench 0.4.13 Point-Selects 8 tables workload

- running within the same 12cores taskset as MySQL server

- running on all 32cores

PFS overhead @12cores-HT taskset, Sysbench on the same 12cores :

Observations :

- PFS=off performance is 178K QPS..

-

overhead vs PFS=off :

- PFS=sync : 11% (160K QPS)

- PFS=def: 6.5% (167K QPS)

- PFS=none : 2% (174K QPS)

- PFS=tables : 4.7% (170K QPS)

- PFS=statements : 4.7% (170 QPS)

PFS overhead @12cores-HT taskset, Sysbench on all 32cores :

Observations :

- PFS=off performance is 220K QPS..

-

overhead vs PFS=off :

- PFS=sync : 10% (200K QPS)

- PFS=def: 6% (207K QPS)

- PFS=none : 2% (215K QPS)

- PFS=tables : 4% (211K QPS)

- PFS=statements : 4.7% (210 QPS)

So far, PFS overhead is slightly bigger on this server for some reasons.. (probably its higher sensibility to cache coherency playing a role here, don't know).. However, the PFS=none overhead is still 2%, while other instrumentations are still remaining available to be enabled dynamically on demand.. So, I still see more potential benefit to keep PFS=on (even as PFS=none), rather simply switch it off ;-) -- And again, all these overheads are known today and can be measured just because PFS instrumentation is dynamic and also configurable on compiler level (you may compile MySQL server without PFS code at all, or just without any mutex/rw-lock instrumentation, etc.) -- pretty different comparing to "static" solutions where stats collections are hard coded and we may just expect that their overhead is small enough.. (as well we're far today from initial 20% PFS overhead and other surprises)..

But well, as said before, PFS overhead is mainly dependent on your HW, your workload, internal MySQL contentions you're hitting, and, of course, on instrumentation level you're enabling.. - nothing coming for free ;-) So, if you're suspecting any unexpected PFS overhead on your workload - just log a bug, create a test case that we're able to reproduce, and we'll work on it ;-)

APPENDIX

PFS=off :

- performance_schema=OFF

PFS=def :

- performance_schema=ON

PFS=sync :

- performance_schema=ON

- performance_schema_instrument='%synch%=on'

PFS=none :

- performance_schema=ON

-

SQL>

- use performance_schema;

- update setup_consumers set enabled = 'no';

- update setup_instruments set enabled = 'no', timed = 'no';

PFS=tables :

- performance_schema=ON

-

SQL>

- update performance_schema.setup_instruments set enabled='NO', timed='NO';

- update performance_schema.setup_instruments set enabled='YES', timed='YES' where name like "wait/%/table/%";

- update performance_schema.setup_instruments set enabled='YES', timed='YES' where name like "wait/io/file%";

- update performance_schema.setup_consumers set enabled='NO';

- update performance_schema.setup_consumers set enabled='YES' where name='global_instrumentation';

- update performance_schema.setup_consumers set enabled='YES' where name='thread_instrumentation';

PFS=statements :

- performance_schema=ON

-

SQL>

- update performance_schema.setup_instruments set enabled='NO', timed='NO';

- update performance_schema.setup_instruments set enabled='YES', timed='YES' where name like "statement/%";

- update performance_schema.setup_consumers set enabled='NO';

- update performance_schema.setup_consumers set enabled='YES' where name='global_instrumentation';

- update performance_schema.setup_consumers set enabled='YES' where name='thread_instrumentation';

- update performance_schema.setup_consumers set enabled='YES' where name='statements_digest';

Rgds,

-Dimitri

Tuesday, 19 February, 2013

MySQL Performance: Analyzing Benchmarks, part-1 : MySQL 5.6 and MariaDB 5.5

Inspired by long debates about differences in my benchmark results on MySQL 5.6, MySQL 5.5 and MariaDB 5.5 and MariaDB benchmark results, I've decided to start a series of articles helping people to reproduce various test cases and better understand some of fun stuff around.. ;-)

This article is fully dedicated to the MySQL 5.6/5.5 vs MariaDB tests.

So far, generally, when you're trying to understand a source of gap in performance results, you should try to start from the most simple case for both "solutions", "platforms", and so on.. For databases the most "simple" case is Read-Only (RO) workload, specially the OLTP RO workload which is fully cached by RDBMS buffers and no more depending on your storage performance, redo/undo design/options, and so on..

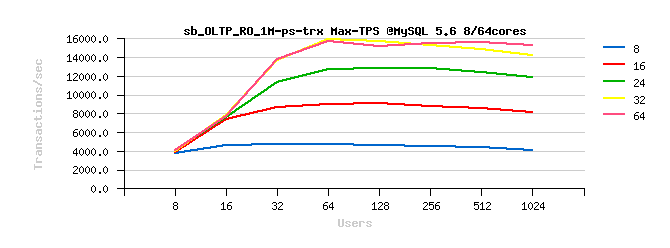

So, MariaDB is publishing the result of Sysbench-0.5 OLTP_RO test with 8 tables, running on a 16cores server and showing on MySQL 5.6 performance lower than 4500 TPS . And the most "closest" test I have in my list to match the same test conditions is OLTP_RO-trx 8-tables (also with 8 tables), but using Sysbench-0.4.13 version, and on my server on 16cores it showing more then 7500 TPS. Of course, I was surprised.. And even more surprised that MySQL 5.5 is performing better than MySQL 5.6..

However, here I should make an important remark :

- there is no miracle ;-) the code path in MySQL 5.6 is more longer then in MySQL 5.5 (due all new features added)

- so, we may expect some additional overhead here, and 5.6 may run slightly slower in workloads with a low level of contentions (such a single user session, or small/old servers (8cores and less)..

- but such an overhead should not be bigger than 3%, and all our QA tests on 8cores servers confirmed it..

- now, if we meet today some cases showing a bigger overhead - just log a bug and we'll analyze and fix it ;-)

- however, on bigger servers, with 16cores and more, you'll see the gap between MySQL 5.6 and MySQL 5.5 more an more bigger!

- our goal in MySQL 5.6 was to scale more far than 5.5.. - keeping in mind that 24cores (and bigger) servers become a today's commodity, it was really pity if you have a "big" server and unable to use it on its full power.. (and many users reported it)..

Well, let's come back to MariaDB test.. - we're not using the same HW, we're not using the same Sysbench versions, and so on.. And, discussions about these differences may be endless.. So, to understand exactly what is going on, I've decided to replay exactly the same OLTP RO scripts provided by MariaDB on my own server.

Remark regarding PFS :

- if you're comparing MySQL 5.6 and MySQL 5.5 keep in mind Performance Schema (PFS) overhead..

- in MySQL 5.5 this overhead is very big, and by default PFS in 5.5 is turned off..

- in MySQL 5.6 PFS overhead is much lower, and that's why by default PFS is turned on in 5.6 with a minimal instrumentation enabled..

- however, this overhead is still present! ;-) (normally should be around 5%, but depends on a workload)

- so, for a honest comparison PFS should be disabled on both engines..

So, keeping this in mind, I've started the same OLTP_RO test via MariaDB's script, same test conditions (12cores attributed to MySQL server, 4cores to sysbench), but still keeping my own my.conf setting as before (and PFS=off). Here is the result:

Users MySQL 5.6 MySQL 5.5 MariaDB 5.5

---------------------------------------------------------

8 : 3460 3680 3430

16 : 5270 5479 4860

32 : 5990 6120 5250

64 : 6000 6150 5260

128 : 5900 6030 5200

256 : 5800 5900 5140

512 : 5500 5640 4940

---------------------------------------------------------

So :

- yes, there is a small regression here in MySQL 5.6 results comparing to 5.5..

- but regression is not out-passing 3%..

- and, curiously, TPS is still better than under 4500 TPS level published by MariaDB..

Now : if you're really looking to get the Max performance from your 16cores server, you should not limit MySQL server to only 12cores (except if your goal is to see the max TPS on 12cores ;-)) -- the best performance will be usually seen when all 16cores are fully available to both: "server" and "client"! (as they're working in ping-pong: query/answer each one on its turn, and so on)..

So, here is the result when both MySQL server and sysbench are using the same 16cores :

Users MySQL 5.6 MySQL 5.5 MariaDB 5.5

---------------------------------------------------------

8 : 3460 3620 3330

16 : 6740 6730 5350

32 : 6680 6650 5310

64 : 6670 6640 5350

128 : 6570 6550 5330

256 : 6510 6510 5300

512 : 6460 6450 5280

---------------------------------------------------------

Ok, looks better:

- now you can see that MySQL 5.6 is running most of the time same or better than MySQL 5.5 ;-)

- and if you'll look attentively on my initial graphs with benchmark results, you'll see exactly the same (pretty similar performance on 8 and 16cores, then growing TPS gap since 16cores)..

What about 24cores now within the same test case?..

Here is the result:

Users MySQL 5.6 MySQL 5.5 MariaDB 5.5

---------------------------------------------------------

8 : 3470 3600 3210

16 : 6790 6040 3940

32 : 9980 5320 3640

64 : 9880 5370 3640

128 : 9830 5390 3650

256 : 9690 5480 3680

512 : 9610 5390 3670

---------------------------------------------------------

As you can see :

- near 10,000 TPS and over 45% improvement comparing to 16cores result (6740 TPS Max)..

- while MySQL 5.5 is unable to follow...

NOTE:

- all these results are obtained on the same OLTP RO test case provided by MariaDB..

- but using my own MySQL configuration settings..

Now, what will be changed if I'll use the my.conf file used by MariaDB in the testing?..

Bingo!...

- as soon as I've started to use "original" my.conf from MariaDB my performance was dropped to 4000 TPS only on 16cores..

- which is matching perfectly the numbers reported by MariaDB..

- so, for sure there is something wrong in the my.conf file.. but what?..

- ... after some tweaking and testing.. ;-)) ...

- I've found that the problem is coming from the sort_buffer_size value !!!

- I've used 32K for both sort_buffer_size and join_buffer_size

- while MariaDB used 4M for both..

- don't think it was intentional, but seems to me the main error was here..

- once leaving it by default (256K) or setting to mine (32K), I've quickly reached the same near 7000 TPS level as I've observed before!

The problem with "sort_buffer_size" is not new, and if you want to read more about, I'm inviting you to start from the "More on understanding sort_buffer_size" article by Ronald Bradford. This article also containing a lot of links to other posts (and I remember there was also an article testing impact of different sort_buffer_size values on OLTP workload, but I did not find it.. - but from a while I'm using 32K values for both "sort_buffer_size" and "join_buffer_size" on OLTP tests)..

INSTEAD OF SUMMARY :

- don't forget to turn Performance Schema off in MySQL 5.6 if you're comparing it with previous MySQL versions (otherwise it'll not be apples-to-apples comparison)..

- if you're looking for a full power TPS results on your HW server - share the same CPU cores for both "client" and "server" (or use another server for "client", but be sure your "client" activity (here sysbench) is not blocked by any HW or SW limits other than MySQL server itself)..

- check your MySQL Server configuration settings (mine is here, but I'm not pretending to have the most adapted one, and believe me, you will always be able to find a better tuning for your workload by yourself ;-))

- adopt jemalloc library from the beginning -- it may save you a lot of headache time! ;-)

So, the "mystery" with MariaDB benchmark results is finished for me now.. Focusing now on Peter's and Mark's problems..

Rgds,

-Dimitri

Wednesday, 13 February, 2013

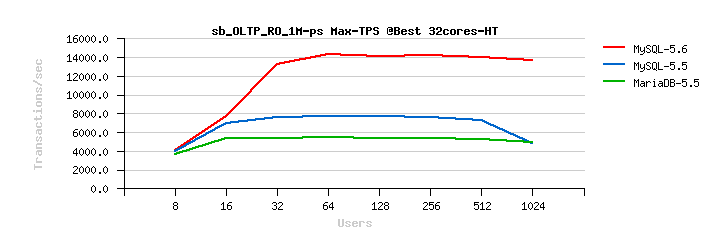

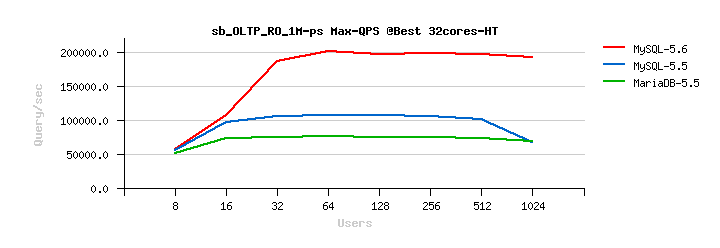

MySQL Performance: MySQL 5.6 -vs- MySQL 5.5 -vs- MariaDB 5.5

Finalizing my series of MySQL 5.6 -vs- MySQL 5.5 benchmarks (best-to-best results and config settings (1), scalability (2), tuning details and yet more test results (3)), I've realized that it just needs a one point more - MariaDB results..

One of my friends called me to tell a story about an article in one of

computer magazines saying that "If you're having performance issues

with MySQL 5.5, you should try MariaDB 5.5 and you'll be surprised!"..

It waked up my curiosity. MariaDB-10 seems to be only in alpha stage for

the moment, and the latest stable MariaDB version in 5.5. So I've

installed the latest for today MariaDB-5.5.29, and executed exactly the

same Sysbench workloads on MariaDB too.

However, the results I've observed reminded me the old heavy days in the past years while we were fighting agains all the bottlenecks in MySQL 5.5.. Situation is greatly improved now in MySQL 5.6, and from the following you'll see why you need to upgrade to MySQL 5.6 asap! ;-)

I will not spend more time on it, but just sharing here my results.

Note:

- I've used the same "original" Sysbench

- same test cases

- same MySQL 5.6 Community Edition (GPL) and same MySQL 5.5 Community Edition (GPL)

- same config settings as in previous posts

- so, exactly the same conditions for everything..

- just added MariaDB 5.5 into a game..

As before, best-to-best obtained results are presented in TPS and QPS. As well, I'm also including here corresponding "scalability" graphs to confirm you that on 16cores MySQL 5.6 is still same or faster than 5.5, but, of course, on a bigger server with 24, 32 or more cores you'll see a real MySQL 5.6 power in action! ;-))

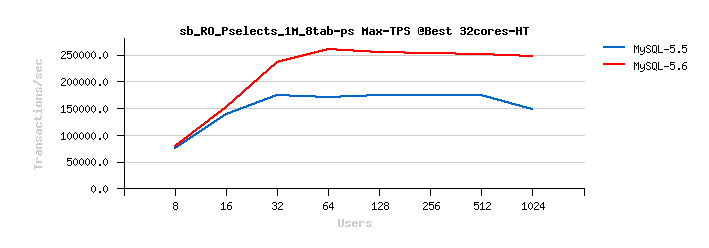

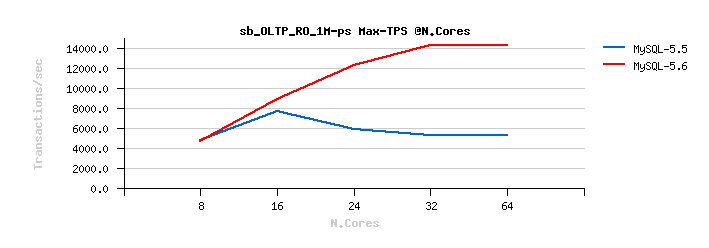

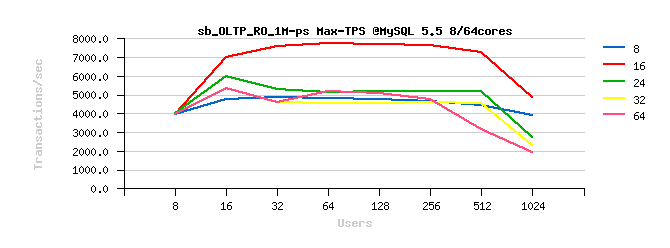

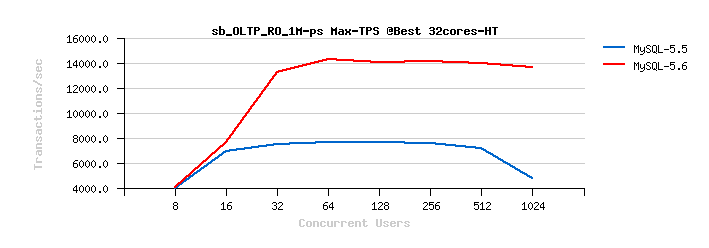

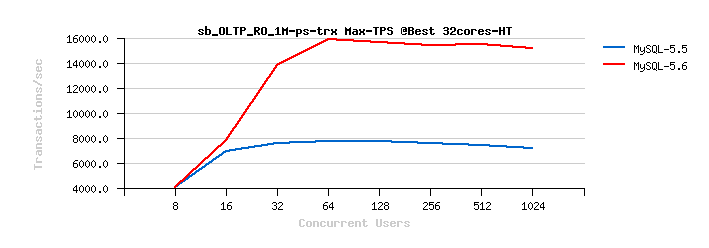

Sysbench OLTP_RO :

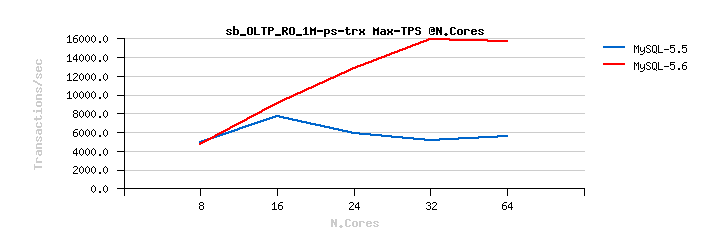

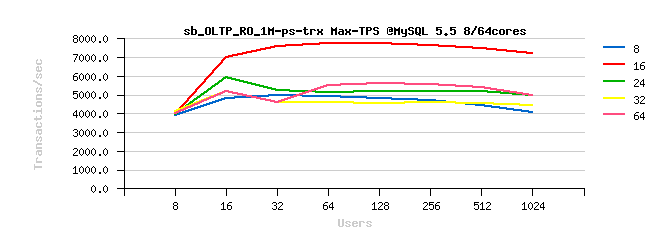

Sysbench OLTP_RO-trx :

Sysbench OLTP_RO 8-tables :

Sysbench OLTP_RO-trx 8-tables :

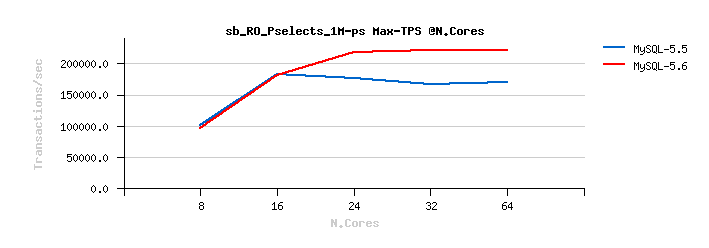

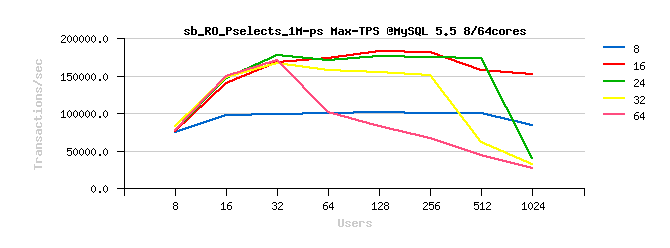

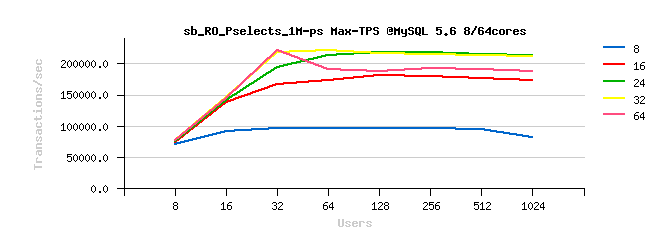

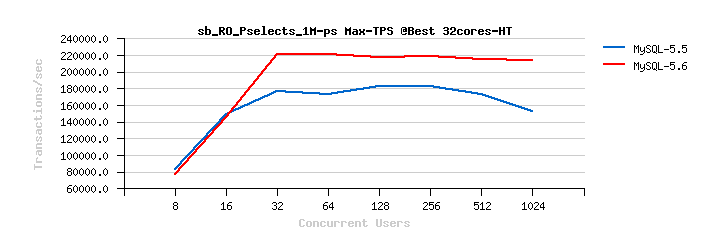

Sysbench OLTP_RO Point-Selects :

Sysbench OLTP_RO Point-Selects 8-tables :

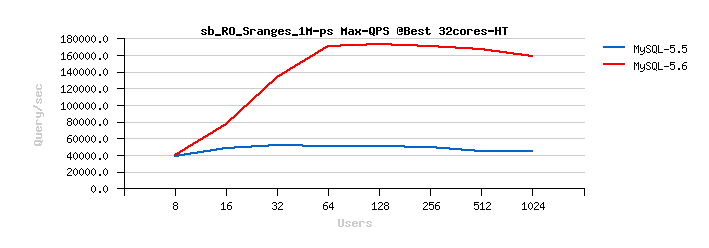

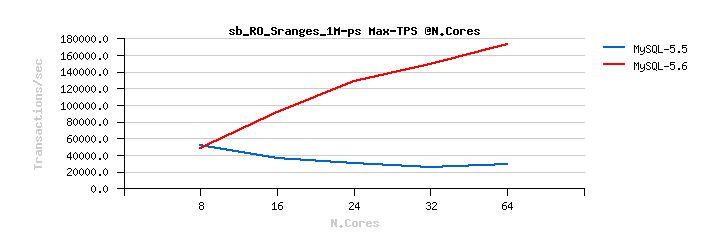

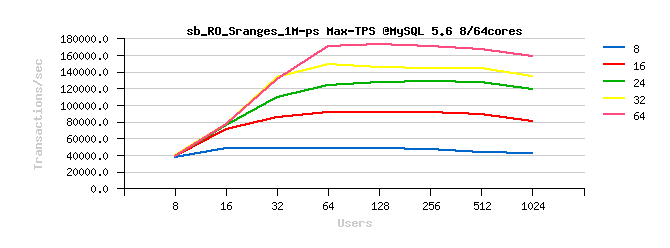

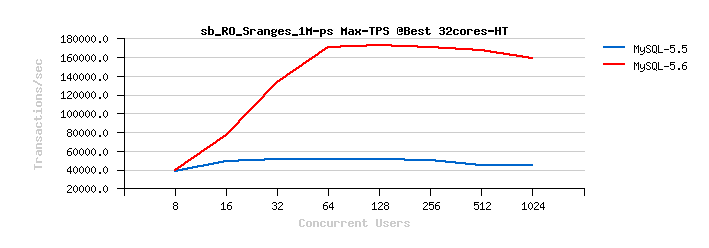

Sysbench OLTP_RO Simple-Ranges :

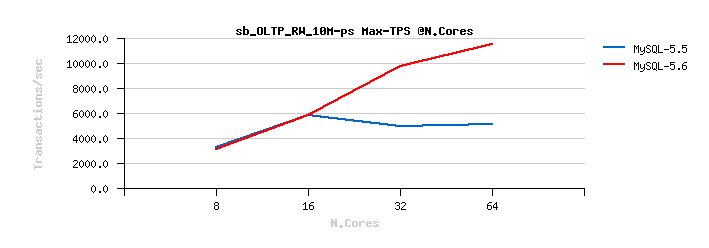

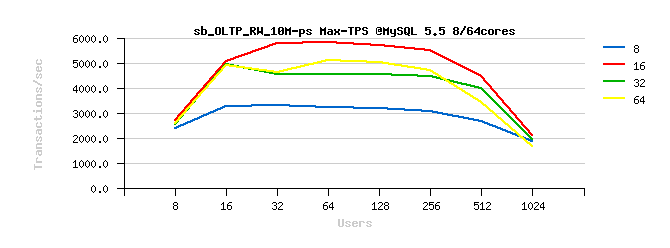

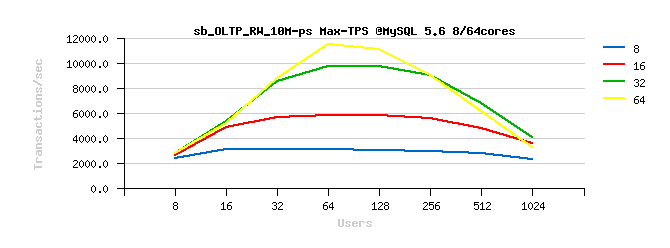

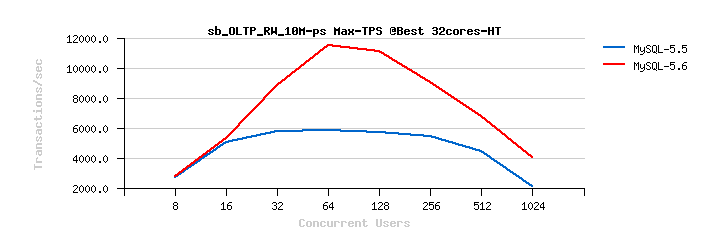

Sysbench OLTP_RW 10M :

Sysbench OLTP_RW 10M 8-tables :

SUMMARY :

- as you can see, MySQL 5.6 GA has the best performance, followed by MySQL 5.5, and MariaDB 5.5 comes in last..

- so, if you're getting performance problems with MySQL 5.5, then upgrade to MySQL 5.6 asap and you'll be really surprised!!! ;-)

- and it's not only about performance.. - get a look on a full list of all new MySQL 5.6 features presented by RobY and PeterZ, as well a very good DBA & developer guide with many other details..

Rgds,

-Dimitri

Tuesday, 12 February, 2013

MySQL Performance: MySQL 5.6 GA -vs- MySQL 5.5 tuning details

This post is the next part following the initial article about MySQL 5.6 vs 5.5 benchmark results (and MySQL 5.6 scalability).

The focus in this article is on the "tuning impact" used during the published tests, and also I have for you few more test results to present which were missed initially -- Sysbench tests using 8 tables instead of a single one (as it does by default).

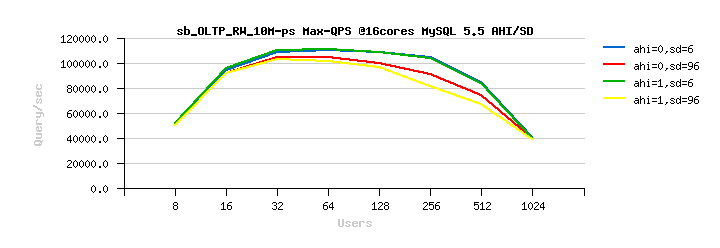

All my.conf setting I've used during the tests was already presented within an initial article, so let's go directly to details about special tuning setting. While many parameters are pretty important (like use or not use O_DIRECT, choose a right REDO log and Buffer Pool size, flush or not flush neighbor pages, right I/O capacity, etc.) -- but all these ones less or more predictable, and once chosen right, not giving you many troubles (unless something did not change in your workload or data volume). But there are two of them which are directly related to internal InnoDB contentions and should be very well tested before applied:

Adaptive Index Hashing (AHI, innodb_adaptive_hash_index, default=1) is helping a lot and in many cases, but sometimes supporting very badly concurrent access or concurrent changes which is expressed by growing contention on the "btr_search_latch" rw-lock. Such a contention may happen not only on a mixed Read-Write (RW) activity, but on a Read-Only (RO) as well. And there is no general rule, and the only real test may give you a real answer (while "generally" you may expect a higher contention on a RW workload rather on a RO)..

Spin Wait Delay (SD, innodb_spin_wait_delay, default=6) value is

used on spin wait loops of mutexes and rw-locks in InnoDB. The setting

is giving the "max" value for the random wait delay interval chosen on

spin wait (expecting a mutex or rw-lock will be free soon, InnoDB is

"spinning" (looping) on CPU involving "pause" instructions and trying to

acquire the lock in the next loop). In fact this solution is "hiding"

contention rather solving it, and may use CPU time a lot just for

"spinning" (while we can do some other and more useful work) -- but in

any case it gives you an expected benefit, and InnoDB locking is going

really faster, and usually you're finally obtaining a better performance

within your workload.. However, again, there is no "silver bullet", and

the "right" value cannot be suggested generally, and that's why in MySQL

5.6 the default value remaining the same as in 5.5, while a bigger one

can be more appropriate, but the result may still vary depending on your

workload, CPU number, and CPU frequency on your server, and so on. At

least the variable is dynamic and you can test it live on your workload

(as I've made in RO testing, looking

for the most optimal value).. I know that on my server this value

may vary from 6 (default) to 128, and give a pretty interesting

performance impact! For MySQL 5.6 tuning os this setting is simply a

must, and you'll see how on one of the presented tests performance is

dropping already on 64 concurrent user sessions (when default setting is

used (sd=6)), while with a more appropriated one (sd=96) performance

remains much more stable and much more higher!..

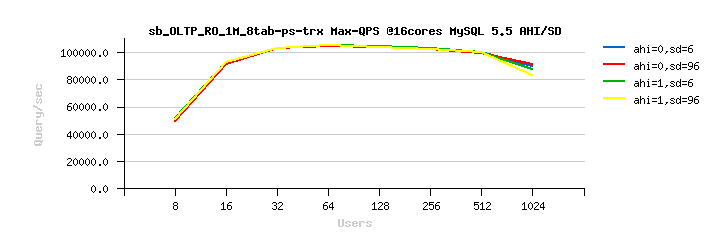

So far :

- the following graphs are representing test results obtaining with variations: AHI= 1 or 0, SD= 6 or 96

- in fact there are 4 combinations, and each one is named like "ahi=n,sd=m", where n is 1 or 0, and m is 6 or 96

- on configurations with few CPU cores having a smaller SD value seems to be better in most of cases, while with more CPU cores a higher SD value is preferable (while there is may be some exceptions as well)

- so, don't hesitate to test and you'll get the right answer for your workload

Then, for every test case the following graphs are presented :

- Best-to-best TPS/QPS performance : there are both, TPS and QPS graphs, so you're able to see a ratio between transactions and queries/sec -- personally I prefer to see queries/sec performance, as it speaks much better (reaching near 300K QPS with all SQL and MySQL layers overhead is telling more than 20K TPS, except if you're aware about what exactly your transactions are doing)..

-

And then impact of SD and AHI pair within each CPU cores configuration:

- Tuning impact @16cores

- Tuning impact @32cores

- Tuning impact @32cores with HT-enabled

Hope I did not forget anything. Here are the results:

Sysbench OLTP_RO

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO 8-tables

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO-trx

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO-trx 8-tables

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO Point-Selects

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO Point-Selects 8-tables

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RO Simple-Ranges

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RW 10M

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

Sysbench OLTP_RW 10M 8-tables

Best-to-best TPS/QPS performance:

Tuning impact @16cores:

Tuning impact @32cores:

Tuning impact @32cores with HT-enabled:

SUMMARY :

- as you can see, Spin Wait Delay is playing a very critical role for performance on your workload..

- as well enabling or disabling AHI may bring some unexpected regression, select it right..

- using or not using Hyper Threading (HT) for MySQL server is very depending on a workload too..

- so, the main rule is: keep in mind all these settings and tune your MySQL 5.6 server to the best! ;-)

- more to come..

to be continued..

Rgds,

-Dimitri

Monday, 11 February, 2013

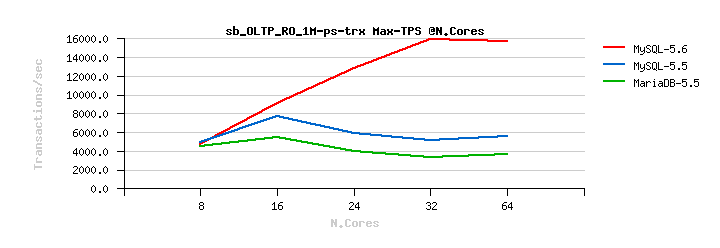

MySQL Performance: MySQL 5.6 GA and MySQL 5.5 scalability

As promised, this is the first part of details about MySQL 5.6 vs 5.5 benchmark results I've published earlier last week. The following graphs are representing scalability results obtained for both MySQL versions on the published tests (and I have yet more test results to present to you, but these test's are still running)..

Few remarks based on comments and discussions I've got since then:

- I'm using a "true" 32cores server (true 32 cores, each one yet has 2 threads (HT), so 64 threads in total)

- I'm not using "CPU threads" terminology as I'm finding it confusing (for ex. when you're reading "16 CPU threads" you may not really know if there were 16cores with HT-disabled, or 8cores with HT-enabled)..

- during all the tests I've disabled HT (as it took days and days more to test every case I'm interesting in)..

- also, in many tests on 5.5 until now I've observed a worse performance when HT is enabled.. (well, depends on a workload, and yes, there was an improvement made too over a time)

- MySQL 5.6 is running more often better when HT is enabled (but again, depends on a workload)

- so, to compare apples-to-apples and reduce my test cycles, HT was always disabled, except on the last one - "64cores" which you should read as "32cores with HT enabled" -- I've made an exception for this one just to see what is waiting us ahead after 32cores ;-)

- so for all tests when you're reading "N cores" it means exactly N physical CPU cores (HT disabled), except for 64cores = 32cores HT-enabled (which was just too long for graph legends)..

- also, during all the tests both MySQL servers are running with "jemalloc" library instead of default malloc, as it's the best malloc on Linux today and I'm using it during all my tests since probably more than 2 years now (but don't think to precise, as it's a part of my "default" config, so did not suppose that somebody is not using it when running MySQL Server on Linux.. - while I always have a dedicated slide in my MySQL Performance presentations ;-))

- for the same reasons the MySQL config parameters I've added in the previous article are not Sysbench specific or oriented -- it's just a "start kit" I'm using by default, and then adapt according a workload.. - and for Sysbench load such a config is more than ok ;-)

- if anything else I forgot to mention - please, just ask ;-)

And now the results.

Don't think you'll need any comments.. Except maybe just this one:

- MySQL 5.5 was already scaling less or more well on small servers, up to 16cores..

- except that if you need more power and have more cores available on your host, there was no way to get better just by adding cores.. (but at least there was no dramatic regression anymore as we observed just few years before ;-))

- MySLQ 5.6 is going way further now, and able to show you a pretty visible performance improvement when more cores are available on your server!

- We know, we're not perfect yet.. - but a huge gap between MySQL 5.6 and 5.5 is already here! ;-)

- And it's not only about better performance with more CPU/cores, there are also a lot of new features + improved design in many many places (and if you've missed something, there are long and short lists available, as well a very good DBA & developer guide with many details)

- So, don't wait more, and start to use your servers with MySQL 5.6 on their full power right now!

Sysbench OLTP_RO :

Sysbench OLTP_RO-trx :

Sysbench OLTP_RO Point-Selects :

Sysbench OLTP_RO Simple-Ranges :

Sysbench OLTP_RW :

INSTEAD OF SUMMARY :

- open your TODO right now..

- and just write on top: Start upgrade to MySQL 5.6 ASAP !!! ;-)

to be continued..

Rgds,

-Dimitri

Tuesday, 05 February, 2013

MySQL Performance: MySQL 5.6 GA -vs- MySQL 5.5 @32cores

As you already know, MySQL 5.6 GA is announced today and, there is no

doubt, it the best ever MySQL release for today!

If you've missed

something about a long list of new features coming with MySQL 5.6, I'm

inviting you to read excellent summaries written by Rob

and Peter,

as well many other detailed articles coming this week..

While my

story will be, of course, about performance ;-)

I'll present here

the results I've obtained on Sysbench workloads (Read-Only and

Read-Write) and will update this article with more details along the day

(as some other tests are still running)..

For my tests I'm using:

HW

Config :

- Server : 32cores bi-thread (HT) Intel 2300Mhz, 128GB RAM

- OS : Oracle Linux 6.2

- FS : XFS mounted with "noatime,nodiratime,nobarrier,logbufs=8"

- MySQL : 5.6-GA, latest 5.5

MySQL Config :

#-------------------------------------------------- max_connections = 4000 key_buffer_size = 200M low_priority_updates = 1 sort_buffer_size = 2097152 back_log = 1500 query_cache_type = 0 # files innodb_file_per_table innodb_log_file_size = 1024M innodb_log_files_in_group = 3 innodb_open_files = 4000 table_open_cache = 8000 table_open_cache_instances = 16 # buffers innodb_buffer_pool_size = 32000M innodb_buffer_pool_instances = 32 innodb_log_buffer_size = 64M join_buffer_size = 32K sort_buffer_size = 32K # tune innodb_checksums = 0 innodb_doublewrite = 0 innodb_support_xa = 0 innodb_thread_concurrency = 0 innodb_flush_log_at_trx_commit = 2 innodb_flush_method = O_DIRECT innodb_max_dirty_pages_pct = 50 innodb_use_native_aio =1 innodb_stats_persistent = 1 innodb_spin_wait_delay = 6 / 96 # perf special innodb_adaptive_flushing = 1 innodb_flush_neighbors = 0 innodb_read_io_threads = 16 innodb_write_io_threads = 4 innodb_io_capacity = 2000 innodb_purge_threads =1 innodb_adaptive_hash_index = 1 / 0 # Monitoring innodb_monitor_enable = '%' performance_schema = ON performance_schema_instrument = '%=on' #--------------------------------------------------

MySQL Tuning :

- the main key configuration options here which are making the difference are AHI (innodb_adaptive_hash_index) and Spin Delay (innodb_spin_wait_delay) -- while others are just good enough most of the time for the tested workloads..

- about the impact of the AHI I've wrote a lot in the past (and you may find all articles here), and the main dilemma with AHI is "to use or not to use".. - in many cases it helps a lot to avoid block locking and speed-up index access, but on a high concurrency may become a show stopper due high contention on its "btr_search_latch" rw-lock..

- while Spin Delay setting is requiring a very particular attention within MySQL 5.6, because it plays a critical role in managing of hot internal mutex and rw-locks contentions and may make you a difference in performance by 100% very easily ;-) (and you may find here all details about, while I may just add that there is no "silver bullet" e.g. no "universal" value which will be good enough for most cases -- all depends on your workload! - and that's why the default value (6) is the same as in MySQL 5.5, while it'll be up to you to find the most optimal for your workload; and as the setting is dynamic, you may try it live )..

- so, during my testing I was curious to see the best pair of AHI and Spin Delay settings for each workload

- then, keeping in mind scalability limits in MySQL 5.5 and 5.6, I'm replaying all the tests with MySQL server limited to run on 8, 16, 32 and 64 cores (64 means 32cores with HT-enabled, while on all other tests HT is disabled (only one thread per core))

So far, let me show you first the most fun comparison - the Best-to-Best - where the highest results from one engine (obtained on a given test and on any of tested configurations) compared to another one. To remain "compatible" with historical MySQL results I'll present results first in TPS (transactions/sec), and then switch to QPS (query/sec) which I'm preferring ;-)

The first test is the classic Sysbench OLTP_RO (Read-Only):

Sysbench OLTP_RO:

Then the "fun" case with the same OLTP_RO test, but using the "feature" that table open/close is not involved for tables within a transaction (the full explanation about is here )

Sysbench OLTP_RO-trx :

Then, "point-selects" - this kind of reads was historically pretty good on MySQL, well improved on MySQL 5.5, but it's still a pleasure to see that even here MySQL 5.6 is showing a difference!

Sysbench OLTP_RO Point-Selects :

The most "painful" test - Simple-Ranges (and you may read from here why it was so painful and frustrating during MySQL 5.6 development, and understand why the gap between MySQL 5.6 and 5.5 is so big ;-))

Sysbench OLTP_RO Simple-Ranges :

And then the Read-Write test, which is not the most heavy RW workload, but still very representative to fire internal MySQL and InnoDB contentions and other limits:

Sysbench OLTP_RW :

The decreasing TPS level since 256 concurrent users is still wishing to get a higher level.. - but it's already x2 times better comparing to MySQL 5.5, and, after all, work is continuing, and then next MySQL version will be yet more better, you may just trust me ;-)) (for the time being you may get a more stable and higher performance level on bigger number of concurrent users by tuning innodb thread concurrency setting (see my previous article with results about))..

While today I'm simply enjoying to see MySQL 5.6 GA release! ;-)

- it's really the best ever MySQL version today!

- it's way faster than MySQL 5.5 on many workloads!

- it's way better designed!

- it's way more "tunable"!

- it's way more "transparent" for performance analyzing!

- it's way better instrumented!

- it's just way better than everything you saw until now! ;-)

- and it comes with many new features in MySQL code you even did not hear around before 5.6 ;-)

So, my the only question : What are you waiting to test your workload on MySQL 5.6 and prepare your database migration?..

to be continued...

Rgds,

-Dimitri