« November 2013 | Main | September 2013 »

Thursday, 31 October, 2013

dim_STAT v.9.0-u13 Core Update (Halloween Edition ;-))

This is a special Halloween Edition of dim_STAT Core Update-13 available (as it's Update #13 it was hard to resist to not ship it exactly for Halloween day ;-))

The Update contains all the goodies from the #12 version plus "one more thing" ;-)

- Bookmark Presets are now introduced to the Multi-Host too (extended or not)

- And also directly accessible from the full Bookmark list on a Single-Host Analyze a well - you should be able to see all your preferred graphs within a one single click now ;-)

- Presets can be removed now and extended (you may at any time add any new Bookmark(s) to existing Preset)

- and just for fun, there is a possibility now to include a customized "welcome" message on the dim_STAT Home Page -- the code is testing for a presence of the "welcome.html" file in the /opt/WebX/apps/dim_STAT directory.. - and it exists, it'll be automatically included within a Home Page (in case you want to add some guide lines to your users, instructions, daily message - up to you ;-))

The installation instructions are exactly the same as before, except that I was too paranoid in the past with Core Updates when it was yet experimental ;-)) -- in fact you don't need to stop dim_STAT Server to apply a Core Update.. - you can do it live without any problem ;-)) (and I'm doing it every time on my servers) -- however, before to upgrade, don't forget to backup your existing /opt/WebX/apps content!!! (we never know ;-))

Enjoy!

Rgds,

-Dimitri

Thursday, 10 October, 2013

My Files for MySQL Tech Day @Paris today

|

As promised my slides from the morning session and files for PFS lab

this afternoon:

As well you may follow the event with #mysqltechday @twitter ;-) Rgds, -Dimitri |

My Slides from MySQL Connect 2013

|

As promised, my slides from MySQL Connect talks are now uploaded to the

#OOW13 site and also available from here:

Thanks to all who attended MySQL Connect this year, for all great talks, discussions, suggestions and your support! Rgds, -Dimitri

|

Friday, 04 October, 2013

MySQL Performance: The Road to 500K QPS with MySQL 5.7

This article is providing the details to the 500K

QPS with MySQL 5.7 benchmark results published earlier and explained

during my talk at MySQL Connect..

Looking back in the history of

MySQL / InnoDB improvement, you may easily realize that there was no any

so big speed-up(s) between releases in read-only (RO) performance as

they came with MySQL 5.6. And it's easy to understand as well that

without having a good level of scalability on read-only, it's hard to

expect to reach a good level on read+write (specially when reads are

dominating ;-))

However, we were so happy with RO performance in

MySQL 5.6, that with 5.7 we turned our main focus on the read+write

(RW), because on big data volumes things are not yet going as we wish..

But the RW dependency on RO jumped up once more again, and then InnoDB

team came with few more improvements to push the max QPS level in 5.7

yet more far.

But let's take the things by order..

In fact

in MySQL 5.6 on RO workload dominating internal contentions are

- Single table used only: MDL, trx_sys and lock_sys (InnoDB)

- Many tables used: trx_sys and lock_sys (so, InnoDB mainly)

For MySQL 5.6 our best obtained result was around 250K QPS, which on that time was the best ever seen on MySQL / InnoDB with SQL queries ;-)

However, it was only possible to achieve if READ-ONLY TRANSACTIONS were used (a new feature in MySQL 5.6), and again, if AUTOCOMMIT=1 was used, otherwise CPU cycles are simply wasted to process START TRANSACTION / COMMIT statements, and you're loosing in overall performance for sure..

So, the very first improvement introduced within MySQL 5.7 was an auto-discovery of RO transactions (in fact any InnoDB transaction is considered as read-only until any DML is not involved within it).. - this simplified a lot the RO transactions feature itself, and life for users and developers too - no need to take care anymore if RO transaction statement was used or not. But again, you cannot reach the max possible potential QPS on MySQL with this feature as CPU time is still wasted by processing transactions begin / end statements..

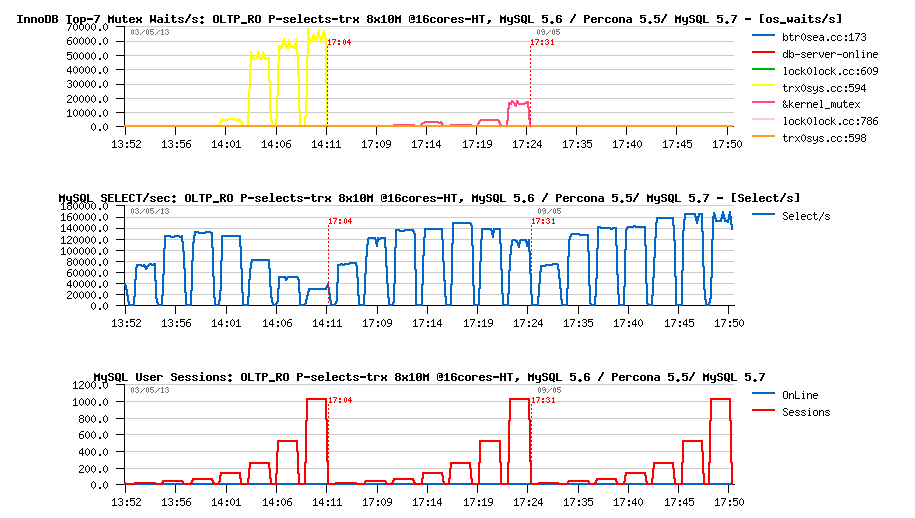

On the same time Percona came with a different solution to resolve "transaction list" management (TRX-list) and as the result lower the trx_sys mutex contention within InnoDB. Percona's solution kept better a high load on Point-Selects with transactions workload, but MySQL 5.7 was yet more better here (but I was not able to publish the results of 5.7 as its code was not yet public).. So, at least I'm able to do it now :

Observations :

- the same RO Point-Select-TRX test (using transactions) on 8-tables is executed on MySQL 5.6, Percona 5.5, and then MySQL 5.7 (results are from May.2013)

- and as you can see, we're far here from the peak 250K QPS obtained on the same 16cores-HT configuration..

- MySQL 5.6 is hitting a contention on the trx_sys mutex and QPS is decreasing since 64usr..

- Percona 5.5 is keeping a load longer, and QPS decrease is starting only since 512usr..

- while MySQL 5.7 already kept on that time QPS without any decrease at all (and even with a higher number of concurrent users which you don't see on these graphs)..

However, it's clear that if one is willing to get the MAX of potential RO QPS rate with MySQL - transactions should be avoided..

So, let's see where it was our MAX QPS in May-2013.

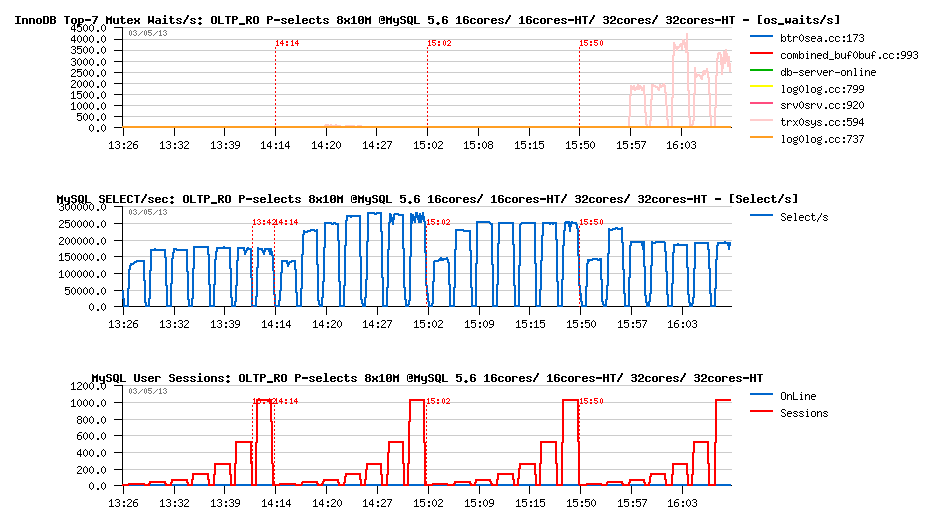

Same Point-Select 8-tables test, but without transactions @MySQL 5.6 :

Observations :

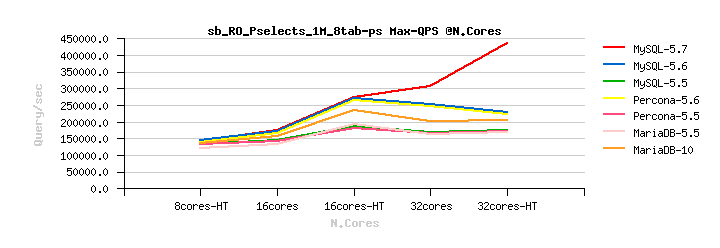

- the test is executed with keeping MySQL 5.6 running on 16cores only, then on 16cores-HT, then 32cores, and 32cores-HT

- as you can see, the Max QPS is even bigger than expected - it's 275K QPS on MySQL 5.6

- and the Max result is reached on 16cores-HT

- while result on 32cores is not better than on 16cores-HT (due internal contentions, and contentions are better managed here by having 2 CPU threads on the same core - so the true concurrency remains on the level of 16 threads, and not 32 as it will be on 32cores)..

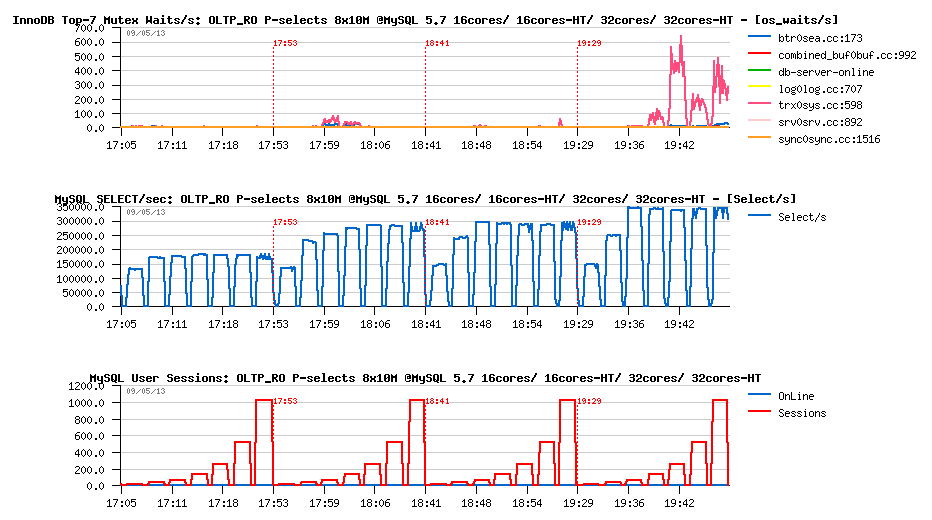

While the same test on MySQL 5.7 was looking pretty differently, as for that time contention on the lock_sys mutex was already lowered in 5.7, and trx_sys mutex related code also got its first changes:

Observations :

- first of all you may see that on the same 16cores-HT configuration 5.7 was already performing better than 5.6

- then, performance yet more improved on 32cores configuration!

- and then reaching its Max 350K QPS on 32cores-HT config!!

- for the first time in history on this particular (and most aggressive) RO workload we got a better result on 32cores -vs- 16cores-HT, and yet more improved with hyper-threading enabled (on 32cores-HT).. - awesome! ;-)

Comparing to all we saw until now, that was really very good!! ;-)

From the other hand, it was clear as well there is still a room for improvement. Contention on trx_sys was still remained. We did not use a full CPU power to do a useful work (still a lot of CPU cycles were spending on lock spinning).. But the result was already better than ever, and way better than 5.6, so there was no valid reason to continue digging to improve performance here, while our main focus was on RW workloads where we have even more huge room for improvement..

By the end of May, during our Perf-Meeting, Sunny added few changes more around trx_sys mutex contention, and our Max QPS moved to 375K QPS(!) since then - should be more than enough as improvement for 5.7, right? ;-)

On the same time we continued exchange with Percona team who proposed to manage TRX-list in a different way.. - the solution looked interesting, but on 5.5 code base was unable to show a higher QPS, and on 5.6 code base (once Percona Server 5.6 was available) the Max QPS was not bigger than in MySQL 5.6. However, the discussion involved a very interesting point: what will be the impact on RO performance if there will be some RW workloads running in parallel?.. And even if MySQL 5.7 code was still running better within the same test conditions, the impact was very visible (you may find my analyzes about here, while, again, I was not able to present 5.7 results during this time as the code was not public yet - may provide it in one of the next articles now)..

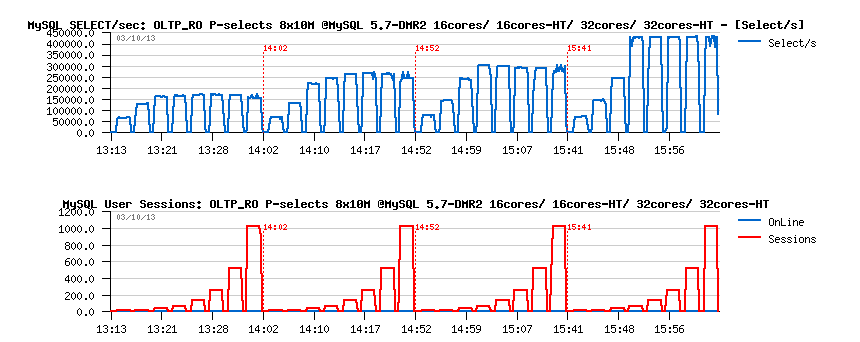

And as any pure RW workload was impacted here as well, there was enough of motivations to finally remaster the whole TRX-list related code in a way that Sunny wanted to see it from a long time.. And this experience was simply amazing! ;-)) Day after day we enjoyed to see our QPS graphs going higher and higher.. - till reached 440K QPS(!) on the same 32cores-HT server.

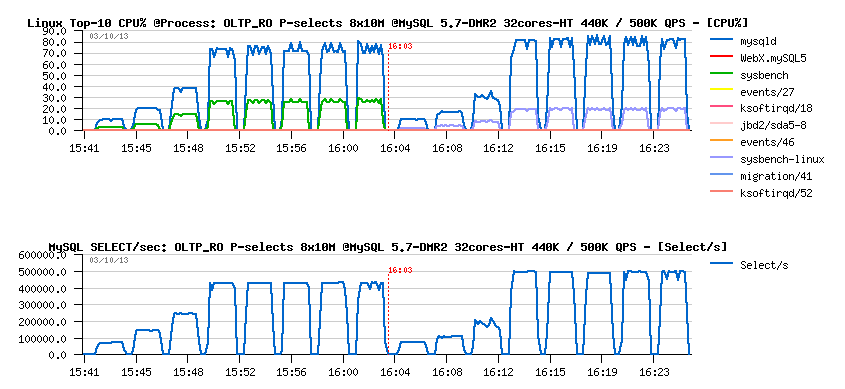

Point-Selects 8-tables @5.7-DMR2 :

No comments.. ;-))

However, there was a small mystery around.. - we intentionally with Sunny analyzed all bottlenecks and impact of code changes via different tools, and on some of the tests for my big surprise Sunny observed higher QPS levels than me.. This "mystery" was related to the following factors:

- on a high load the 5.7 code is running now near the limits of the HW capacity (mainly CPU), so every instruction matters!

- and the difference if UNIX socket or IP port is used becomes very visible!

- and Sysbench itself is using 30% of CPU time, but if the older Sysbench version is used (with a smaller code path) for the same test load it'll consume only 20% CPU, leaving additional 10% to the MySQL server ;-)

- so, in the same test workload, by using UNIX socket instead of IP port + replacing Sysbench-0.4.13 by Sysbench-0.4.8 we're out-passing 500K QPS(!) - easy, right? ;-))

Let's compare "before" and "after" :

Observations :

- Lowered CPU% usage by Sysbench

- resulting in a higher CPU time availability for MySQL server

- and we're hitting 500K QPS..

What else?.. ;-)

I may only add: kudos Sunny & whole MySQL Dev Team! ;-)

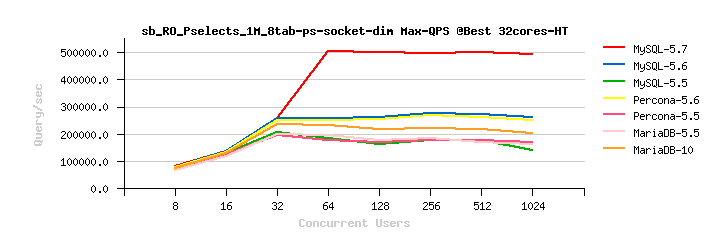

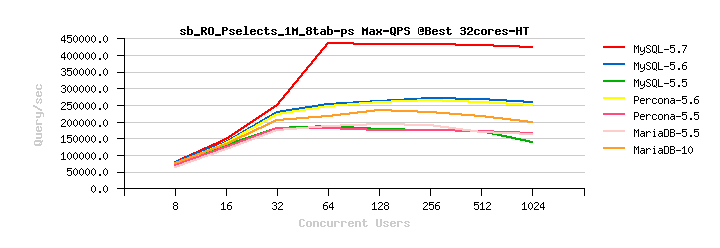

Let's get a look now on Max QPS obtained on the same Point-Select 8-tables workload on other engines as well. I've used the latest ones available on beginning of Sep.2013 :

- MySQL-5.7.2 (DMR2)

- MySQL-5.6.14

- MySQL-5.5.33

- Percona Server 5.6.13-rc60.5

- Percona Server 5.5.33-rel31.1

- MariaDB-10.0.4

- MariaDB-5.5.32

Each engine is tested within the following configuration variations:

- CPU taskset: 8cores-HT, 16cores, 16cores-HT, 32cores, 32cores-HT

- Concurrent Users Sessions: 8, 16, 32 ... 1024

- InnoDB Spin Wait Delay: 6, 96

Then the best results from any given combination for every engine are compared -vs- others. And I'm obtaining the graph which I've already presented in the previous article:

Few comments :

- No need to comment the gap on the MySQL 5.7 results, it's just evident..

- then, what is interesting that none of engines based on the MySQL 5.5 code base did not get any closer to the MySQL 5.6 results..

- which is just confirming that only since the MySQL 5.6 code base was used, Percona Server reached the 5.6 level, while MariaDB-10 is still on the road..

- so, the MySQL 5.6 code base rocks, no doubt!

- and MySQL 5.7 simply rocks twice! ;-))

And what about scalability?

The answer is simple: MySQL 5.7 is the only one scaling here.

Then, if IP port is used and a more "heavy" Sysbench-0.4.13, the results are the following:

QPS is just slightly lower, but the tendency is exactly the same..

And scalability is pretty similar:

More other results will come, so stay tuned ;-)

NOTE : for a single-table-bound workloads the story is not yet good:

- decreased contentions on the InnoDB level made other contentions much more visible

- and MDL contentions became even more dominating when the load is bound on a single table..

- this is as expected, and should be fixed within the next DMRs..

We have yet many challenges ahead of us ;-)

For reference, my HW config for presented tests was the following:

- Server : 32cores-HT (bi-thread) Intel 2300Mhz, 128GB RAM

- OS : Oracle Linux 6.2

- FS : EXT4 mounted with "noatime,nodiratime,nobarrier"

my.conf:

max_connections=4000 key_buffer_size=200M low_priority_updates=1 table_open_cache = 8000 back_log=1500 query_cache_type=0 table_open_cache_instances=16 # files innodb_file_per_table innodb_log_file_size=1024M innodb_log_files_in_group = 3 innodb_open_files=4000 # buffers innodb_buffer_pool_size=32000M innodb_buffer_pool_instances=32 innodb_additional_mem_pool_size=20M innodb_log_buffer_size=64M join_buffer_size=32K sort_buffer_size=32K # innodb innodb_checksums=0 innodb_doublewrite=0 innodb_support_xa=0 innodb_thread_concurrency=0 innodb_flush_log_at_trx_commit=2 innodb_max_dirty_pages_pct=50 innodb_use_native_aio=1 innodb_stats_persistent = 1 innodb_spin_wait_delay= 6 / 96 # perf special innodb_adaptive_flushing = 1 innodb_flush_neighbors = 0 innodb_read_io_threads = 4 innodb_write_io_threads = 4 innodb_io_capacity = 4000 innodb_purge_threads=1 innodb_adaptive_hash_index=0 # monitoring innodb_monitor_enable = '%' performance_schema=OFF

In case you need it, Linux Sysbench binaries available from here:

- Sysbench-0.4.13-lux86

- Sysbench-0.4.8-lux86

The Sysbench command used to run Point-Selects test via UNIX socket (starting 8 processes in parallel):

LD_PRELOAD=/usr/lib64/libjemalloc.so /BMK/sysbench-0.4.8 --num-threads=$1 --test=oltp --oltp-table-size=10000000 \

--oltp-dist-type=uniform --oltp-table-name=sbtest_10M_$n \

--max-requests=0 --max-time=$2 --mysql-socket=/SSD_raid0/mysql.sock \

--mysql-user=dim --mysql-password=dim --mysql-db=sysbench \

--mysql-table-engine=INNODB --db-driver=mysql \

--oltp-point-selects=1 --oltp-simple-ranges=0 --oltp-sum-ranges=0 \

--oltp-order-ranges=0 --oltp-distinct-ranges=0 --oltp-skip-trx=on \

--oltp-read-only=on run > /tmp/test_$n.log &

The Sysbench command used to run Point-Selects test via IP port (starting 8 processes in parallel):

LD_PRELOAD=/usr/lib64/libjemalloc.so /BMK/sysbench-0.4.13 --num-threads=$1 --test=oltp --oltp-table-size=10000000 \

--oltp-dist-type=uniform --oltp-table-name=sbtest_10M_$n \

--max-requests=0 --max-time=$2 --mysql-host=127.0.0.1 --mysql-port=5700 \

--mysql-user=dim --mysql-password=dim --mysql-db=sysbench \

--mysql-table-engine=INNODB --db-driver=mysql \

--oltp-point-selects=1 --oltp-simple-ranges=0 --oltp-sum-ranges=0 \

--oltp-order-ranges=0 --oltp-distinct-ranges=0 --oltp-skip-trx=on \

--oltp-read-only=on run > /tmp/test_$n.log &

Rgds,

-Dimitri