« July 2012 | Main | April 2012 »

Friday, 08 June, 2012

MySQL Performance: Binlog Group Commit in 5.6

The binlog sync contention was a problem from a long date in MySQL..

Well, it was less visible few years ago until MySQL performance was

quite limited even without binlog enabled ;-)) However, with all latest

performance improvement came with MySQL 5.5 and now with 5.6, it became

unacceptable to see all these excellent goodies simply killed once a

user enabling binlog..

Good news -- the latest MySQL 5.6 labs

release is coming with freshly released Binlog Group Commit which is

greatly solving this issue. You may find all details about in the Mat's

article, while my intention in this post is just to extend little

bit already presented benchmark results..

Well, the true

performance problem with MySQL binlog is coming not really when it's

enabled, but since the "sync_binlog" is set to 1 (means flush binlog to

disk on every binlog write).. Usually such a setting is used when user

want to avoid any possible data loss (while other value than 1 may be

used if it's not a case, and what is curious that setting

sync_binlog=100, for ex., may give you the same performance as

sync_binlog=0 (means no flush), which may be not far from the initial

"base line" performance level).. While setting sync_binlog=1 usually

gave you x2-x4 times or more performance regression comparing to the

"base line", and the main cost is coming from the number of sync/sec

MySQL is doing (as your storage here may be quickly limited in I/O

performance). So, the solution with "group commit" is coming naturally

here (similar to InnoDB group commit in redo log writes) -- more writes

you can group within a single "sync", better performance may be

expected. However, as usual, all depends on implementation ;-))

So,

what to say about the binlog group commit (BGC) in the latest MySQL

5.6?..

There were many tests executed before BGC became better

and better, but I'd like to present you just few ones which attracted my

own curiosity ;-)) While on "big" and powerful servers the performance

gain was very impressive and pretty easy to obtain, I've also focused on

a "small" 12cores server and very modest HDD + SSD config.

Test

Server:

- 12cores bi-thread 2900Mhz (small, but really fast), 72GB RAM

- OS: Oracle Linux 6.2

- DATA on x2 HDD, XFS

- REDO and binlog on single SSD, XFS

Test cases:

- binlog OFF

- binlog ON, sync_binlog=0

- binlog ON, sync_binlog=1

Test scenario:

- MySQL versions: 5.6.4, 5.6-labs-April, 5.6-labs-June

- Workloads: Sysbench OLTP_RW, dbSTRESS RW-Upd

- Concurrent users: 32

NOTE: I'm testing only 32 concurrent users on this server because a higher load with sync_binlog=1 is hitting overall I/O limits on the storage level, and as well historically 32 users workload was the most "free of internal contentions" :-)

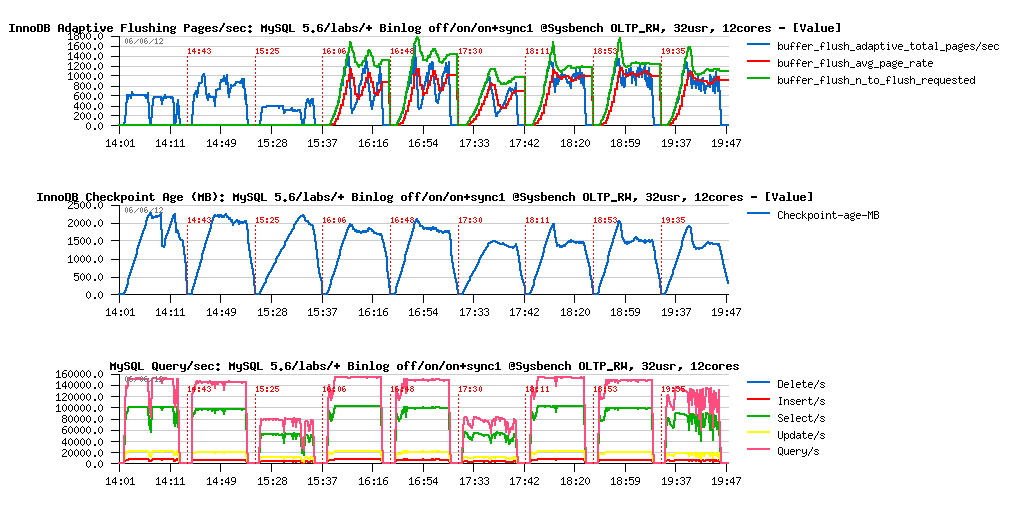

So far, on the following graphs you'll see 3 test cases (binlog OFF, ON, ON+sync_binlog=1) on 3 MySQL versions. Tests were executed sequentially, so you can see 3 tests executed on MySQL 5.6.4, then on 5.6-labs-April, then 5.6-labs-June. And there are also 3 graphs representing by order:

- Adaptive flushing activity (Pages/sec)

- Checkpoint Age in MB

- Executed query/sec (QPS)

Sysbench OLTP_RW:

Observations :

-

Looking on these graphs it's great to see an overall RW performance

improvements coming in MySQL 5.6 over this year:

- 5.6.4 is came with a better RW performance due removed kernel_mutex contention..

- since 5.6-labs-April improved Adaptive Flushing came to the game (and you don't see periodic QPS drops anymore)

- then 5.6-labs-June came with yet more improved Adaptive Flushing (you can see flushing activity more stable now) and Binlog Group Commit -- you can see near x2 times better performance now when sync_binlog=1 !!

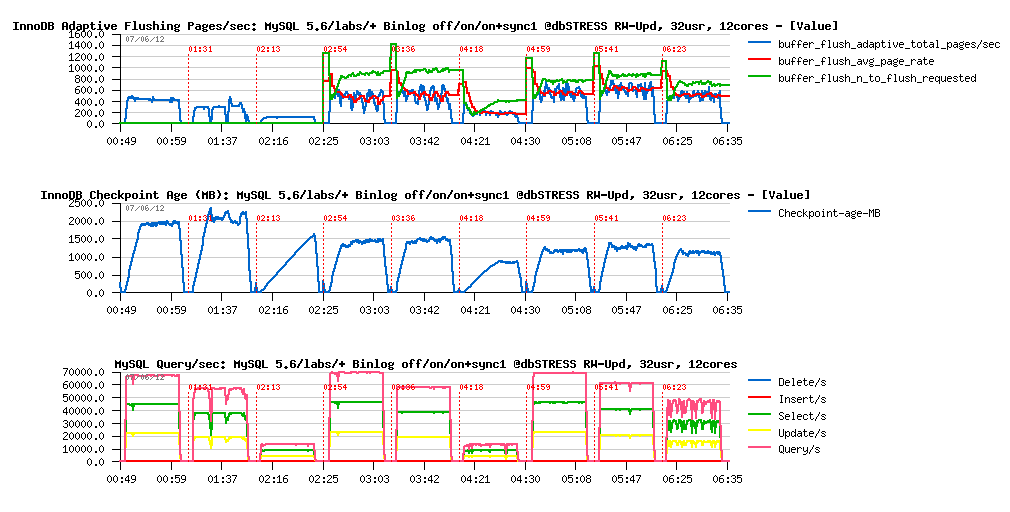

dbSTRESS RW-Upd:

Observations :

- dbSTRESS is representing a more aggressive RW workload, so the BGC impact here is much more important ;-)

- more than x3 times better performance on 5.6-labs-June when sync_binlog=1 now !!

However, what I don't really like in both test workloads is the QPS instability in 5.6-labs-June when sync_binlog=1 -- it may be related to some I/O level limits, or may be some kind of internal contention as well. So, have yet to investigate it later..

Then, of course the picture will be not complete for my curiosity, if I'll not test in the same conditions Percona Server 5.5 which is already integrating a similar solution developed by MariaDB team (MariaDB proposed its own BGC code before MySQL team, and if ideas are similar, implementations are not at all.. - but what about performance?..)

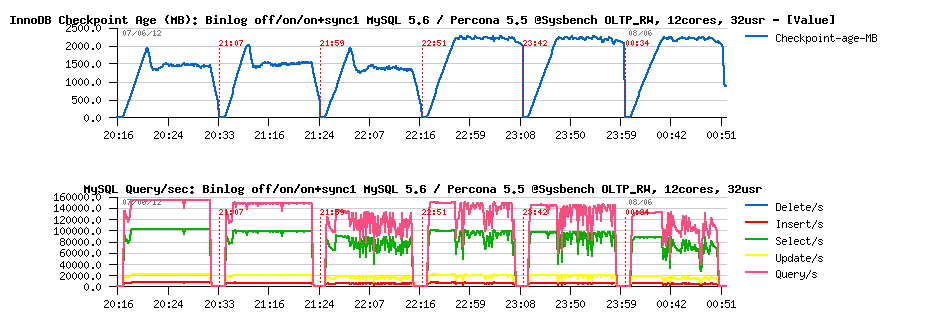

So, I've executed the same tests on Percona Server 5.5 keeping exactly the same config setting except changing adaptive flushing to "keep_average" (which is the best algorithm for this option in XtraDB), and here are the results -- similar graphs, but MySQL 5.6 first, then Percona 5.5 on the same test cases:

Sysbench OLTP_RW:

Observations :

- seems like Percona's "keep_average" mode for adaptive flushing is not really working well here.. (and I've also tested "estimate" option -- same result)..

- then, for test case with binlog + sync_binlog=1, we may say here that BGC in MySQL 5.6 is giving the same or better performance vs Percona 5.5

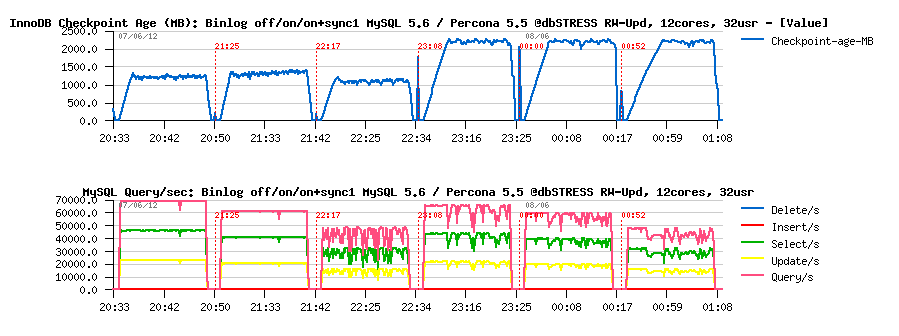

dbSTRESS RW-Upd:

Observations :

- comparison is similar to the previous test..

- performance with sync_binlog=1 too..

NOTE : I'm intentionally not presenting here any results on more powerful server (32cores or more) as it'll face Percona Server 5.5 to to all performance contentions fixed in MySQL 5.6, which will already make Percona Server way slower even without binlog, so it's out of interest in this scope..

Well, to summarize :

- Binary Group Commit in MySQL 5.6 is looking very promising!

- while I'm still not liking QPS drops observed with sync_binlog=1 ;-)

- NOTE: these QPS drops are not present when more powerful storage is used, so more testing + analyze should be done to fix it generally..

- and, my own pleasure - Adaptive Flushing in MySQL 5.6 seems to work better now than in Percona Server itself, which was the most famous for it until now.. - so I may be only happy with the result of our work in MySQL 5.6 ;-)

Anyway, work is continuing.. ;-)

Tuesday, 05 June, 2012

MySQL Performance: PFS Overhead in 5.6

Performance Schema (PFS) in MySQL 5.6 is coming yet more with many awesome new features. But, as it was already discussed in the past, enabling PFS instrumentation may create an additional overhead within your MySQL server, and as a result, decrease an overall performance.. The good news is that in MySQL 5.6 things become better and better ;-))

Well, you should understand as well, there is no miracle.. - some of most "hot" events within MySQL are happening over several millions(!) or tens/hundreds millions per second(!) -- so, it's sure, once such kind of event is traced, the overall overhead of the MySQL code may only be increased.. What is important that every instrumentation can be enabled or disabled dynamically, so you can go in depth progressively when tracing your events or bottlenecks, and stop instrumentation at any moment when it becomes critical..

However, since the current MySQL 5.6, Performance Schema is now enabled by default and gives you out-of-the-box some valuable data about your database load: query statements and table accesses. And some stuff (like mutexes or RW-locks instrumentation) should be explicitly enabled on MySQL start, otherwise will just remained ignored and guarantee to keep your server from potential unexpected overhead..

The following article is presenting the test results I've obtained on CPU-bound workloads while analyzing the performance impact of enabling PFS by default in MySQL 5.6 and the impact of default PFS instrumentation (as well some other instrumentations too ;-))

Test scenarios:

- Workload: Sysbench OLTP_RO, RO S-Ranges, dbSTRESS RO

- Concurrent users: 16, 32, .. 512

- Special tuning settings: innodb_spin_wait_delay= 12 / 96

- Server: X4470 32cores bi-thread 2300Mhz, 128GB RAM, OEL 6.2

Test cases:

- PFS off

- PFS on, but no instrumentation enabled (on+none)

- PFS on, default instrumentation enabled only (on+def)

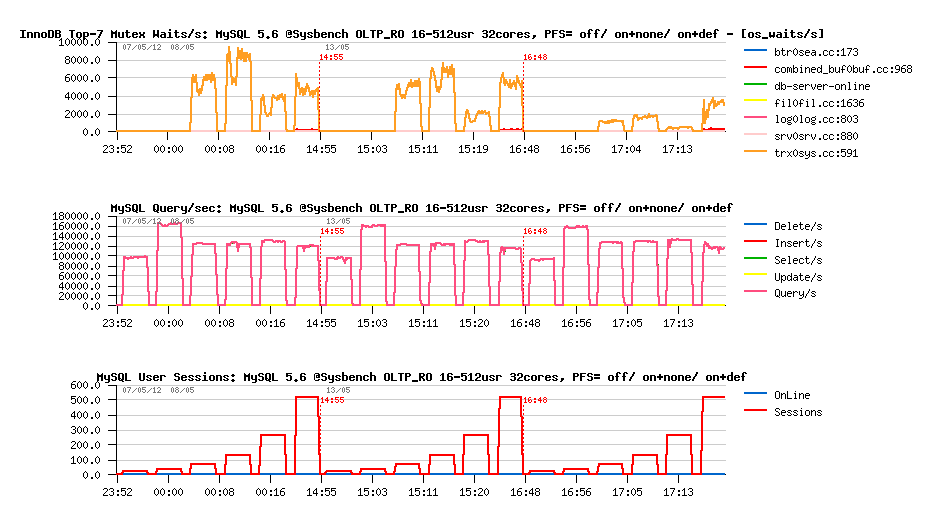

Sysbench OLTP_RO, innodb_spin_wait_delay=12 :

Observations :

- the overhead is near zero when PFS is enabled, but all instrumentations are remaining disabled..

- the overhead is 5% in worse case (16 users) when default PFS instrumentations is used, and near zero in most of other cases

Sysbench RO S-Ranges, innodb_spin_wait_delay=12 :

Observations :

- near zero overhead..

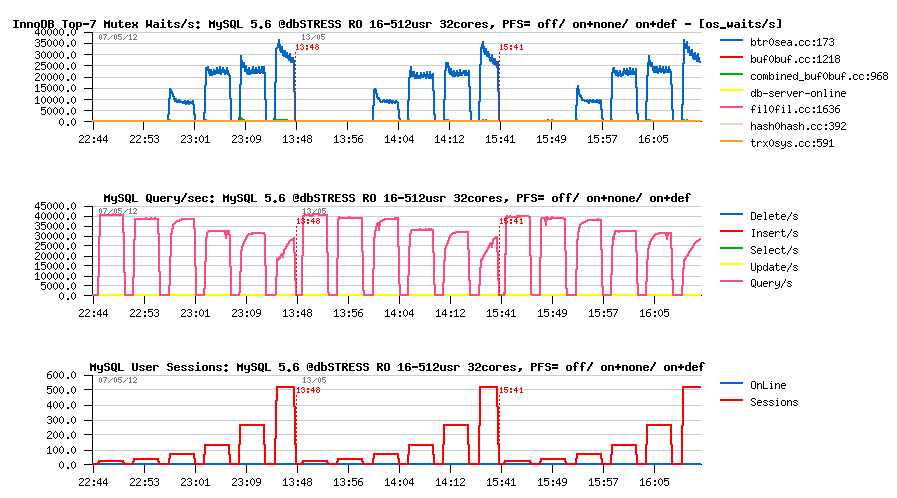

dbSTRESS RO, innodb_spin_wait_delay=12 :

Observations :

- zero overhead..

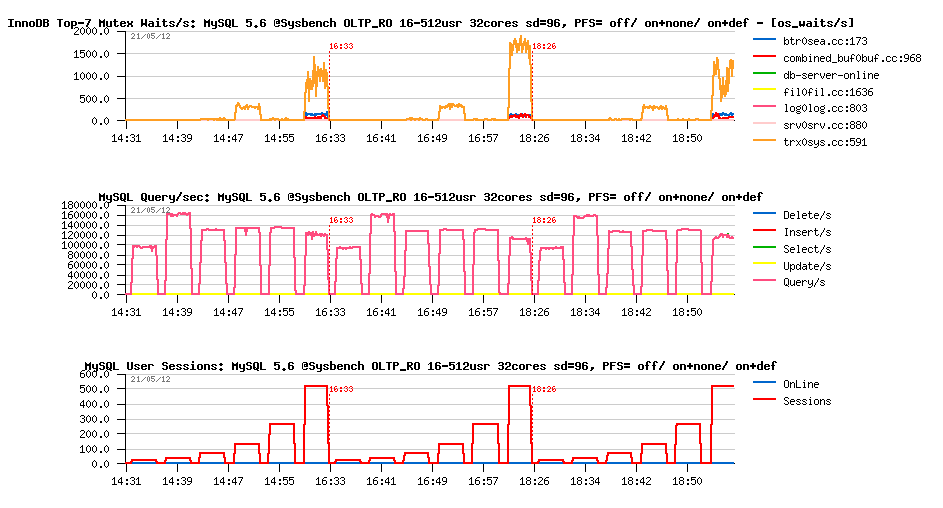

Sysbench OLTP_RO, innodb_spin_wait_delay=96 :

Observations :

- using innodb_spin_wait_delay=96 is lowering mutex contentions, so improving little bit an overall performance

- however, it's increasing little bit PFS overhead, but this increase is looking like random, rather consistent, and still not out-passing 3-5%..

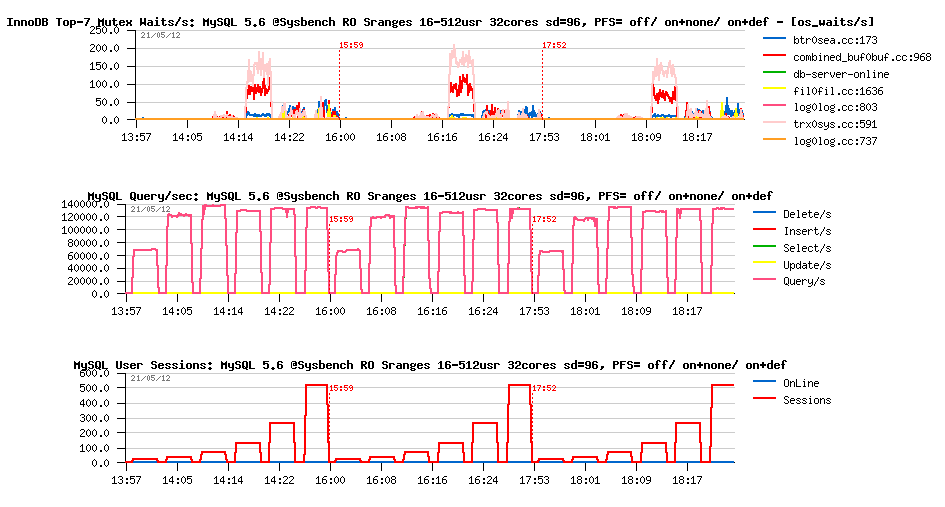

Sysbench RO S-Ranges, innodb_spin_wait_delay=96 :

Observations :

- higher an overall performance

- PFS representing near zero or less than 5% overhead..

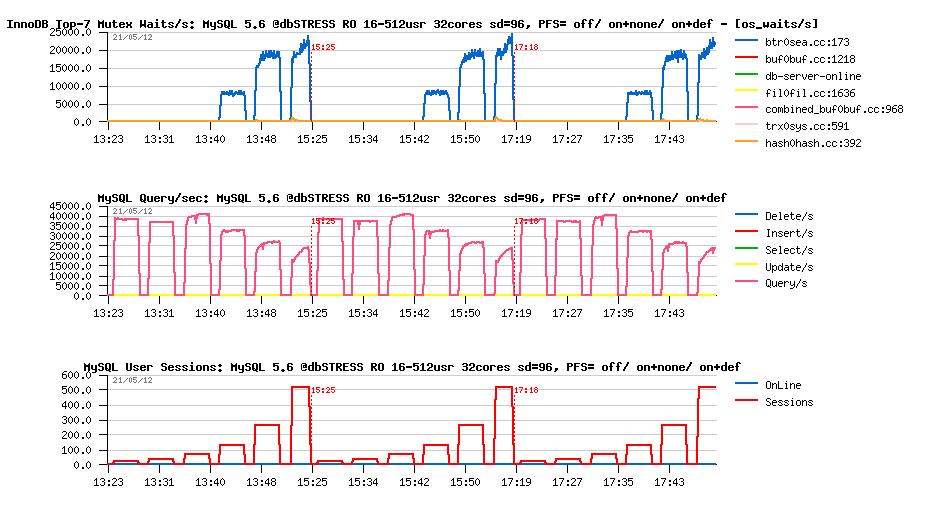

dbSTRESS RO, innodb_spin_wait_delay=96 :

Observations :

- not really much better performance here when innodb_spin_wait_delay=96 is used (RW-lock contentions are having a different profile)..

- however, no overhead due PFS instrumentation at all

Overhead of other groups of instrumentations

Test scenario: we're starting MySQL server with PFS enabled by default and then dynamically enabling or disabling various instrumentations.

NOTE: synch waits instrumentation (mutexes and RW-locks) cannot be enabled dynamically in this case, so "all_waits" test is not containing an overhead produced by mutexes and RW-locks instrumentation..

Test cases:

- off -- PFS is turned OFF

- def -- default instrumentations

- stages -- only stages instrumentation is enabled

- stmt -- only statements instrumentation is enabled

- all_waits -- all waits% instrumentation is enabled only

- all -- all instrumentations are enabled

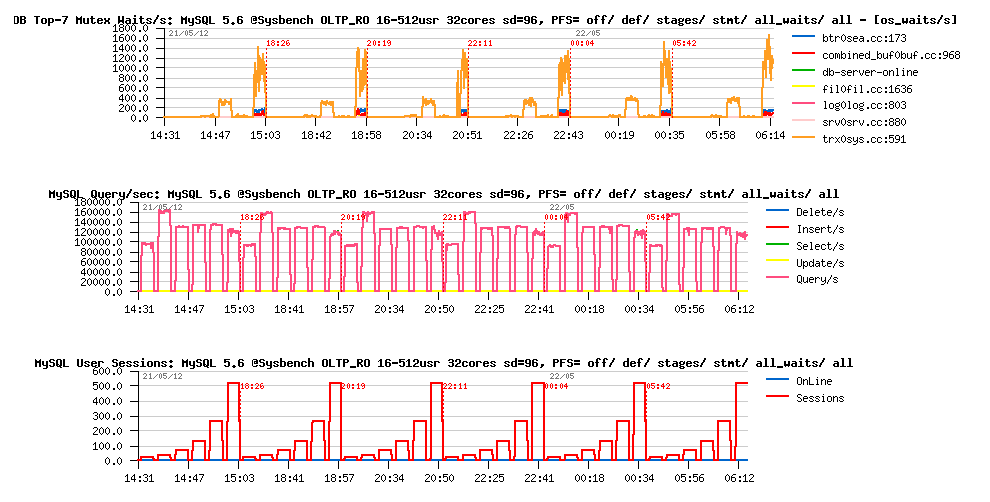

Sysbench OLTP_RO, innodb_spin_wait_delay=96 :

Observations :

- in most cases the overhead is remaining very or at least lower than 5%

- the most heavy case (when all(!) instrumentations are enabled) is still not creating an overhead bigger than 10%..

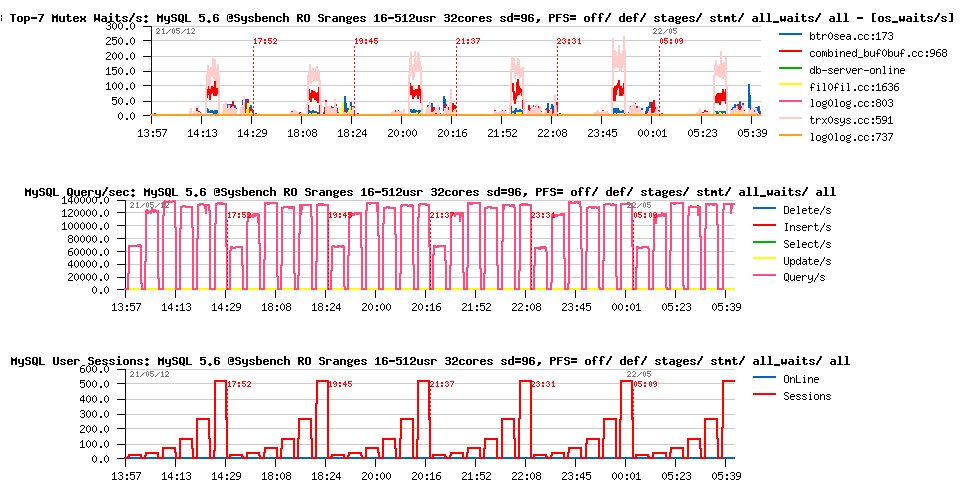

Sysbench RO S-Ranges, innodb_spin_wait_delay=96:

Observations :

- near zero overhead in most of cases..

dbSTRESS RO, innodb_spin_wait_delay=96 :

Observations :

- same near zero overhead..

Overhead of SYNCH WAITS instrumentation

PFS overhead is directly depending on the internal contentions within MySQL -- more hot such contentions become, bigger performance impact may be expected due instrumentation (as instrumentation code is increasing a code path to execute).. That's why enabling SYNCH waits instrumentation potentially may create a bigger regression than any other.

my.conf settings:

- performance_schema = on

- performance_schema_instrument = '%=on'

Test cases:

- def -- default instrumentations

- all_waits -- all waits% instrumentation is enabled only

- synch -- only synch waits events instrumentation is enabled

- non-synch -- all waits% except synch instrumentation is enabled only

- all -- all instrumentations are enabled

- off -- PFS is turned OFF

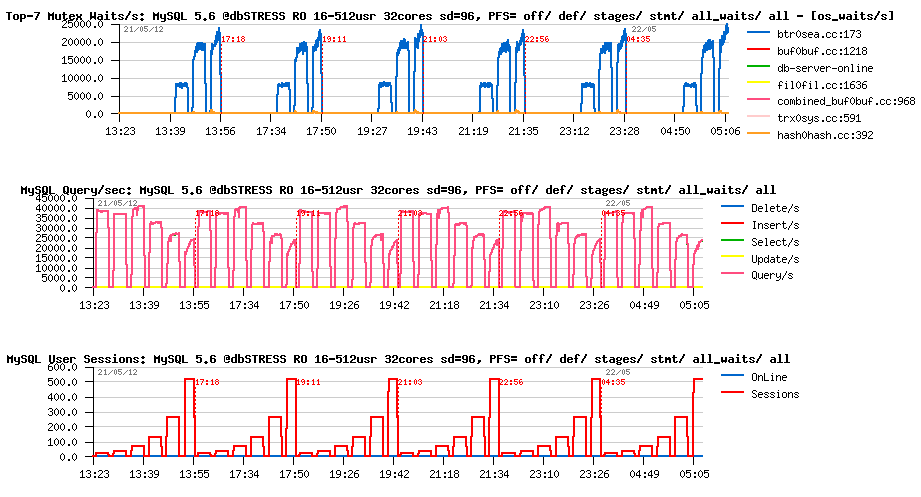

Sysbench OLTP_RO, innodb_spin_wait_delay=96:

Observations :

- NOTE : this test is representing the most worse case for PFS overhead ;-)

- so far, even if synch waits instrumentation is not collected, additional check conditions code involved on them is bringing a little bit more overhead anyway..

- and even default instrumentation become more heavy in this case..

- however, overhead is not much higher once all waits instrumentations are switched to ENABLED and TIMED!.. - so at least we're proposing a solution to monitor internal wait events without any excessive regression! - which is really very good ;-)

SUMMARY

Seems to me like the current default PFS instrumentation is quite safe for a final user and in worse case brings 5% overhead (and, of course, it depends on a workload). Having SYNHC WAITS disabled by default is looking to be a wise decision to avoid any unexpected surprises. A final user should simply be aware that SYNCH WAITS instrumentation should be enabled explicitly on MySQL start, and know exactly what it's doing ;-)