« December 2010 | Main | October 2010 »

Tuesday, 30 November, 2010

MySQL Performance Talks during UKOUG Conference in Birmingham (UK)

From a very snowy Paris (well, everything is relative) I'm finally in less snowy Birmingham (comparing to yesterday's Paris :-)) and even my flight arrived on time! (that's a mystery of Air France for me - how a flight leaving Paris one hour later that it should may still arrive on time to its destination).. But well, it's in the past now :-) And for the now - I'm happy to be here and learn from others! - there are so many interesting things presented by passionated people that it's hard to choose which talk to attend.. And it's pity we cannot be in several places on the same time :-) and the Day#1 was great, and hope other days will be to! (and more productive for myself as I'll be less asleep :-))

I'm also presenting during this conference, and hope to share about MySQL Performance during my talks as much as I can :-) Well, it's hard to enter all the materials into 45 min.. - so I have to focus on the most essential things and it's not easy to decide ;-)) but if you want to see one of the topics to be absolutely covered - just comment here or drop me email and I'll do my best :-) Also, the conference is very well organized, and even if you have any new questions, etc. - there are "Meet a speaker" events taking place, so you may still get all the answers you're looking for.. And if I speak about organizers - one thing I've really appreciated here and it's what I've asked all the time during Sun and MySQL conferences - doing a pool on planned presentations and to see what is the level of interest on each session, and then according to this poll schedule a room with a corresponding size to avoid that the most interesting sessions will be missed by people due lack of space in the room like it was for ex. for Domas sessions during the last MySQL UC.. So, great job UKOUG team! :-) (well, there are other things will be fine to improve, but I can live with it, not a real problem.. :-))

BTW, the abstract of my talk was reduced to one line in agenda probably by my mistake:

"Being more smart in 45min is a final goal of this session :-)"

Well, here is a full version:

"This session will cover MySQL/InnoDB configuration tuning. Which setting for which workload? Which value will be better for my system? How to avoid potential bottlenecks from the beginning? Do I need a purge thread? I was told InnoDB doesn't need thread concurrency anymore? Is my IO capacity setting good enough?.. - these an many other questions are often asked by MySQL DBA and developers.. Things are changing quickly and constantly, and there is no "silver bullet" :-) But understanding of the configuration settings impact is already a huge step in performance improvement :-) Bring your ideas too, share them with others - discussion is open, it's only driven by a speaker :-) Latest benchmark results will be presented as well..

Being more smart in 45min is a final goal of this session :-)"

Hope you'll have fun and will bring something to share too! :-))

Link with a time and place to my talk is here . As well I'm also presenting dim_STAT during this conference - if you want to discover a powerful (and free) monitoring tool from the source - you're welcome! :-)

Rgds,

-Dimitri

Tuesday, 16 November, 2010

MySQL Performance: 5.5 and InnoDB thread concurrency

Until now, when you observed a performance degradation with a growing number of concurrent user sessions in InnoDB the most simple way to avoid such a degradation was to set innodb_thread_concurrency to something different from zero (zero is a default now in 5.5) - and since 5.5.4 the most optimal value was around of 32 (before it was 16 )... But all latest performance improvements in 5.5 are so good that it become less and less true now, and during my last tests I've even observed a worse performance when a different from zero "innodb_thread_concurrency" setting was used.. - seems to me now the overhead of the thread concurrency management code in some cases become more important than an overhead of still existing lock contentions ;-)

Let's see more in details now..

First of all - why "innodb_thread_concurrency" is even existing as parameter?.. - MySQL is written as a multi-threaded application and each connected user session is a thread within MySQL (and OS as well), which is quite more optimal comparing to other vendors where a session is an independent process (like in PostgreSQL or Oracle) just because a thread is way more lightweight for OS comparing to a process, and context switching between threads is usually going much faster and cost much less comparing to processes based model.. - But! having a more optimal model doesn't yet mean to have a scalable database engine! - and as I say usually, everything depends on implementation ;-) And in multi-threaded application all threads are running within the same process and have direct access to everything inside of this process, they may change any data at any time, so you have to apply and follow some synchronization rules within your code to protect your data by shared or exclusive locks to be sure there will be not possible to have more than one changes of any critical data on the same time - and it has a cost! :-) But again, all depends on how these locks are implemented and used within a code.. And not so far, just 2 years ago(!) MySQL/InnoDB did not scale more then up to 2-4 CPU cores! :-) - Today the limit is already pushed up to 32 cores, and work is in progress ;-)

But what about InnoDB thread concurrency?..

InnoDB is also implemented as a multi-threaded application and also has a lot of locks ;-) And once locking become too hot, the system start to spend more CPU time to manage InnoDB thread locks rather to do any useful work.. And these locks are not "user application locks" (for ex. when many users are trying to modify the same raw) - no, these locks are coming from InnoDB implementation design and independent to your "user application" - and once you're blocked by InnoDB internal locks, you're done.. ;-) (except if you feel yourself comfortable to modify the MySQL/InnoDB code :-))

Ok, but even if this lock contention is inevitable, may it be at least reduced or limited?..

Yes, it's possible - all you need is just to find after which amount of threads running concurrently you're observing a performance degradation, and then simply limit the number of possible active threads to this amount! And there are at least two possible solutions:

-

Use "innodb_thread_concurrency=N" which will limit the number of

active threads within InnoDB exactly to N

- Place MySQL process into a processor set (Solaris) or task set (Linux) and limit it to N CPU cores

Both solutions are not free from limitations, but in the past the first one was the most optimal.. Then things are changed with a time, MySQL/InnoDB became more optimal and if you use Linux - it's also improving all the time :-)

So if in the past, on most of my tests setting "innodb_thread_concurrency=16" improved performance stability since 32 concurrent user sessions and looked like The MUST... Now, I have a 32 cores (bi-thread) Intel box running Linux, and let's see if things are looking differently TODAY ;-)

I want to compare the same workload within the following configurations :

- #1) 32 cores, no task set, innodb_thread_concurrency=0

- #2) MySQL is running within an 8 cores task set, innodb_thread_concurrency=0

- #3) MySQL is running within an 16 cores task set, innodb_thread_concurrency=0

- #4) 32 cores, no task set, innodb_thread_concurrency=8

- #5) 32 cores, no task set, innodb_thread_concurrency=16

- #6) 32 cores, no task set, innodb_thread_concurrency=32

NOTE : task set on Linux is not giving any exclusivity on the task-set CPU cores like processor-set on Solaris, so my load generator may use these cores too and it's not a problem here, because my goal is to reduce the MySQL/InnoDB contention by reducing the number of active tasks executed in parallel.. - Also, usually when we're presenting results on 2/ 4/ 8/ 16/ cores we're also limiting the injector to something equal.. - I'm not limiting injector here, I want to have it running on its full power, and just limit the contention within MySQL, so a test result on 8 cores task set doesn't mean a MySQL result on 8 cores! - just that MySQL contention is limited to 8 cores and other cores may still do other useful stuff (networking, I/O, etc. ;-))

Also - my CPU cores are having 2 hardware threads, so it's hard to say that one core is equal to one simple core, in case of MySQL bi-thread core may work as well as two cores!.. - and helping to reduce the lock contention as only one hardware thread from two may be executed by its core on the same time..

Testing Scenario

I'll start with a Read-Only workload first (and as the Read seems to drive a response time, Read+Write is hardly depending on the Read performance).. As the lock contention may greatly be dependent on the SQL query nature, I'll test at least 3 queries having a different contention pattern:

Queries :

- Query-1: main contention on "btr_search_latch" mutex

- Query-2: main contention on the "buffer pool mutex" and "kernel_mutex"

- Query-3: main contention on the "kernel_mutex"

Workload :

- Users: growing from 1 to 256 (step by step: 1, 2, 4, 8, .. 256)

- Think time between transactions: 0 ms

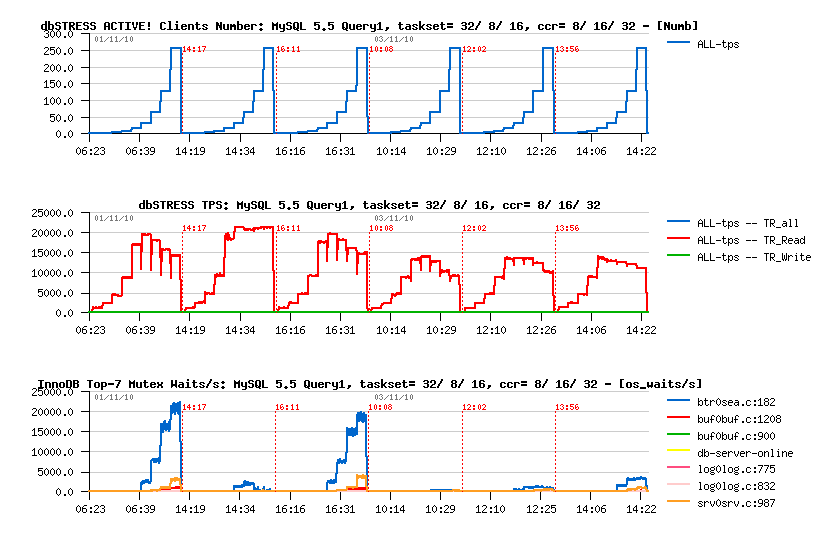

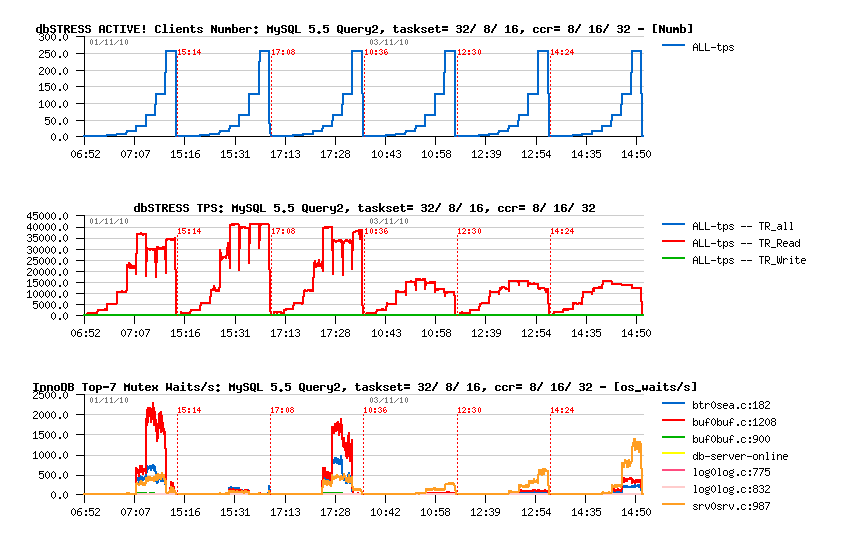

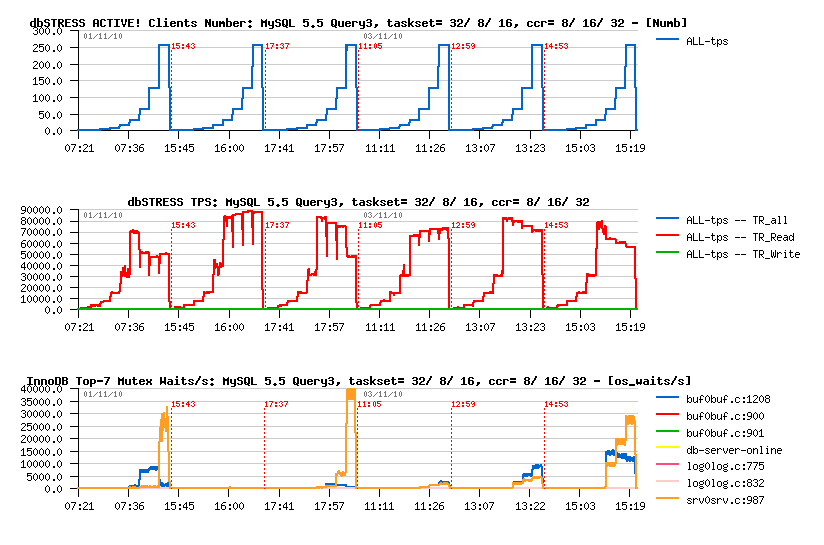

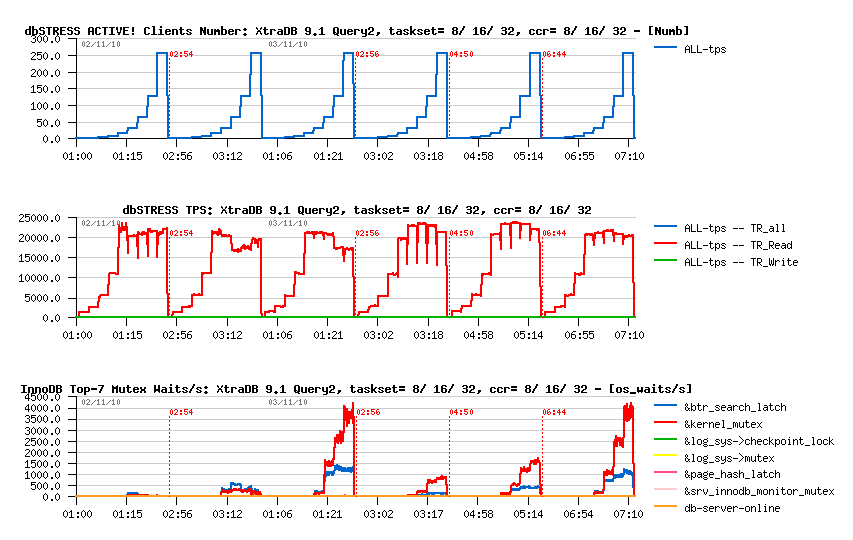

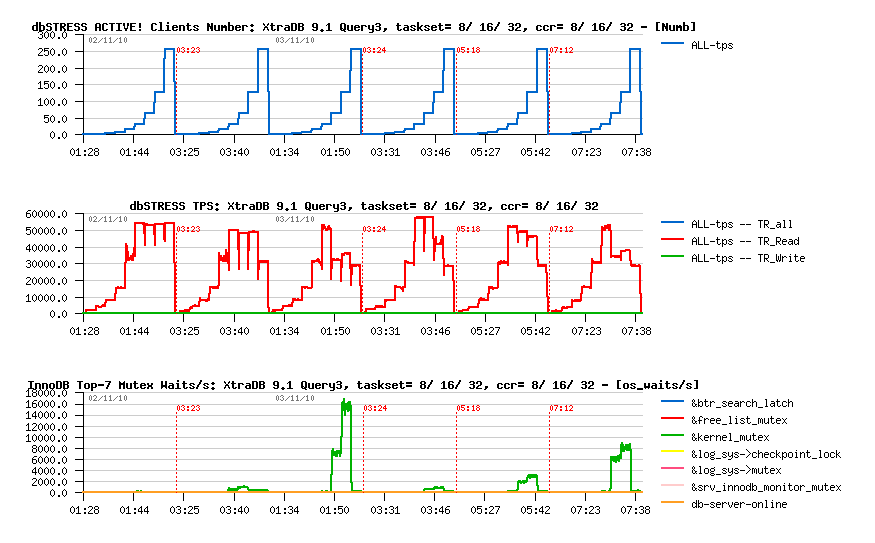

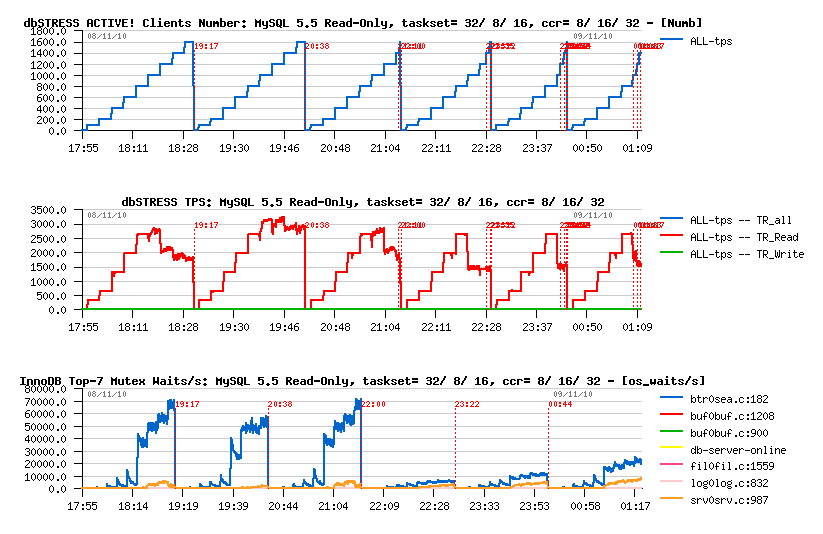

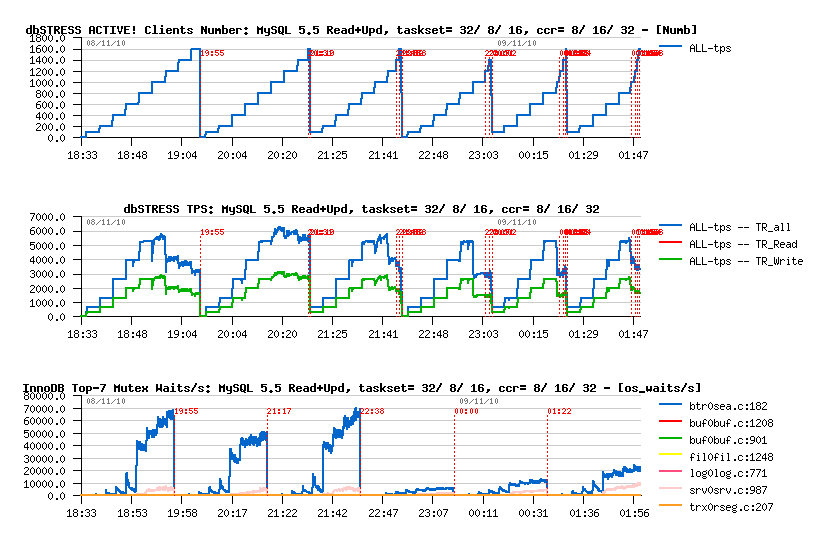

To save place here, I'm putting the results from all 6 tested configurations on the same graph. Each configuration was tested one after each other, so you may observe 6 workloads over a time scale per graph. The first graph representing the number of active sessions (users). The second one - reached TPS level. And the third one - Top-10 Mutex Waits reported by InnoDB.

NOTE : the code managing the InnoDB thread concurrency is also using a mutex, but the waits on this mutex are not reported by InnoDB (BUG ??), so I may only suppose that this mutex become the hottest when the "innodb_thread_concurrency" set to a different from zero value (and as you understand, it cannot be seen either by Performance Schema if InnoDB is not reporting it..)

Let's get a look on the results now..

Query-1 @MySQL 5.5.6

Workload scalability on this SQL query is mainly limited by contention on the "btr_search_latch" mutex.

Observations :

- Configuration with a task set on 8 cores seems to be the most optimal ;-) (BTW, I've also tested 2 and 4 cores task sets, the results is way worse, so I don't present these results here and you may just trust me here :-))

- Using non-zero innodb_thread_concurrency giving a lower results even on 256 concurrent users comparing to default innodb_thread_concurrency=0 setting..

- Interesting that in last 3 configurations the mutex waits are reduced dramatically! - however the waits on the concurrency mutex are not reported by InnoDB and I suppose these waits are the main stopper here, so we don't see an improvement similar to seen on 8 cores task set..

Query-2 @MySQL 5.5.6

This query is limited by the "buffer pool mutex" and "the kernel_mutex" contentions.

Observations :

- Similar to Query-1, the highest and the most stable performance level is obtained on the 8 cores task set configuration..

- Again, limiting lock contention via "innodb_thread_concurrency" makes things worse..

Query-3 @MySQL 5.5.6

This query is manly limited by contention on the "kernel_mutex".

Observations :

- Performance on the 8 cores task set is the best once again..

- However, on 32 cores having innodb_thread_concurrency=16 looks better than zero! - seems contention on the "kernel_mutex" has a different impact/pattern for this query..

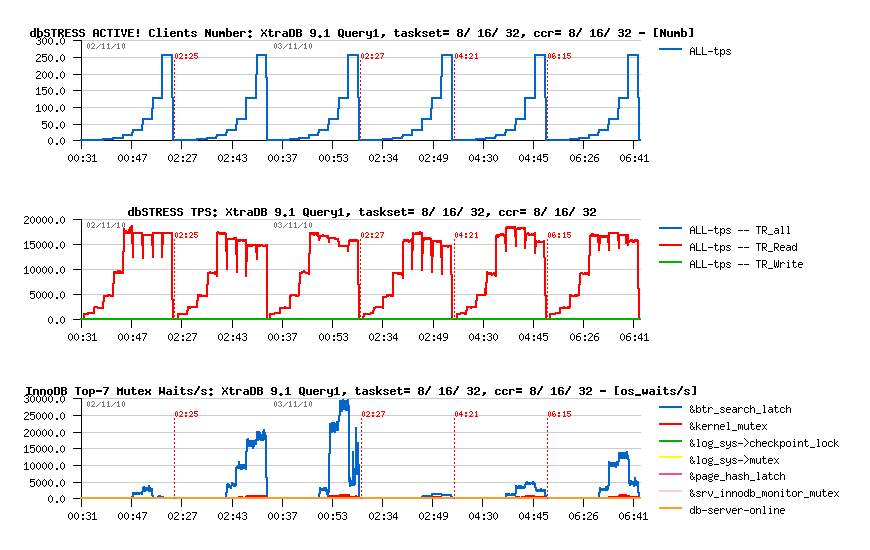

Testing same 3 configurations on XtraDB 9.1

I was curious to replay the same workloads also on XtraDB 9.1 - but NOT in goal to compare it with MySQL 5.5 (otherwise I have to use the latest XtraDB version, and it's not the case) - my interest here is to see the impact of the "innodb_thread_concurrency" setting but when the "timer-based" concurrency model is enabled! - this model was introduced within MySQL 5.4, ported by Percona team into XtraDB, but was finally not included within MySQL 5.5. However on its time this model was near free of locks, and as XtraDB 9.1 was comparable in performance with MySQL 5.5.4, I was curious to know if we did not miss anything within the latest releases of MySQL 5.5..

NOTE : during the following tests on XtraDB the task-set

configurations were tested in a different order - 8, 16, 32 cores vs

previously 32, 8, 16 cores.. - so just keep in mind when looking on the

graphs ;-) (sorry for that, but it was too late to fix it)..

Query-1 @XtraDB 9.1

Observations :

- As you can see, the task set on 8 cores is still giving a better results

- However, using a Timer-Based concurrency model is not too bad, and even showing the max TPS level!

Query-2 @XtraDB 9.1

Observations :

- Wow! - Timer-Based model is a true winer here!

- Interesting that Query-2 is running x2 times faster vs Query-1 on MySQL 5.5, but not on XtraDB 9.1 don't know why.. - any ideas?..

- As well curiously the highest mutex waits here are on the "kernel_mutex", while on MySQL 5.5 it's the "buffer pool mutex".. - seems to me it'll be always great to have 2 engines to replay the same workload and better understand performance issues you have on your application.. ;-)

Query-3 @XtraDB 9.1

Observations :

- Curiously, on this query NOT only 8 cores task-set is keeping workload stable and without degradation..

- As well, the max TPS level is reached with a Timer-based model here..

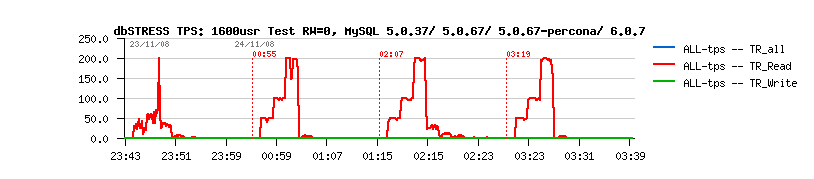

Test with 1600 concurrent users

So far, until now from the previous tests globally the most better results are obtained within an 8 cores task-set configuration. But what will happen if I have more than 256 concurrent user sessions?.. - in the past this test was impossible to run successfully having "innodb_thread_concurrency=0" whatever a number of CPU you have.. Well, of course, a system having 1600 concurrent users is usually has not the same workload profile as a non-stop 1-256 users tested before.. The main difference here will be with a presence of a "think time", which means that each user will do a pause between transactions (simulation of user actions, ex. reading a web page, etc.).

Before I've executed 1600 users test with a think time=1sec, which means that if everything is going well, each user should be able to process one transaction per second, and with a growing number of concurrent users we have to reach finally 1600 TPS. However, without limiting InnoDB thread concurrency it was impossible to reach this level before, and the following results were true just 2 years ago tested on different MySQL versions:

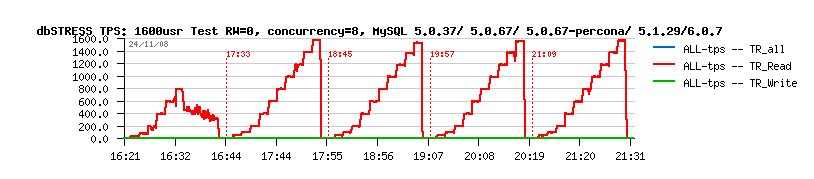

Testing 1600 users with innodb_thread_concurrency=0 :

Testing 1600 users with innodb_thread_concurrency=8 :

All details about these 2 years old results you may find in this report . As you can see, setting "inodb_thread_concurrency=8" solved the situation and guaranteed a stability within an execution order! - so each user was able to run on the one transaction/sec speed.

So, where we're now, 2 years later?.. ;-)

Few surprises..

Curiously reaching 1600 users sessions on the Linux box was not without surprises..

-

First of all I've got a Connection Error messages (the issue details

an its fix I've described previous

post ), but more surprising was to see MySQL 5.5 simply crashing

in this situation while 5.1 and XtraDB printed an error message.. -

But once I've told about the problem to the MySQL team, the bug

was already fixed the next day by Davi ! - and it was my next

surprise as there was not yet even bug open nor test case, etc.. -

it's simply great to see such a reactivity! ;-)

-

Another problem seems to be related to the Linux kernel and I was

unable to fix it on time: since a high number of running processes the

network communications started to have timeouts, and some statistics

data were missed time to time (you may recognize this periods by

vertical red point lines on the following graphs)..

- And the last surprise was that once I've start to test 1600 users according to the same conditions as 2 years ago (think time=1sec) - there is no difference at all whatever from 6 configurations I'm testing!.. - so 1sec for MySQL 5.5 is too much now as a think time, and I've reduced think time to 300ms to start to see any significant difference between the tested configurations.. ;-))

So, what about results?..

Read-Only 1600 users @MySQL 5.5.6

Observations :

- Hmm.. - once again the 8 cores task-set showing the best performance here..

- All 1600 users were active during every observed period

- However since 1000 users (in best case) not every user was able to execute its 3 transactions/sec, but TPS remains stable around 3000..

Read+Update 1600 users @MySQL 5.5.6

Observations :

- On the last test I wanted to see the impact of the presence of the Write transactions mixed to Reads.. - only Update statements are used within a Write transaction here and the ratio between Read/writes is 50/50 here..

- Surprisingly, random Updates are not slowing down MySQL performance on this test - it's simply executing 2 times more transactions!.. - contention on Reads seems to be the main blocking factor here

- Also interesting that the level of the mutex waits did not change with the presence of Updates - still the same contention on the "btr_search_latch" and "kernel_mutex"..

- Once again, the 8 cores task-set shows the most better result..

Instead of SUMMARY.. ;-)

-

Seems to me since MySQL 5.5.6 the "innofb_thread_concurrency" setting

should be used now very carefully! - as I was able to see several

cases when it makes things worse, then you'll be able too ;-) -

seriously, just keep in mind that it may reduce your

performance, so always check what's going on.. - what is fine at least

that this variable is dynamic and can be changed back at any time, so

it's easy to check if it helps you or not even on the live workload..

-

Using CPU Affinity - looks very attractive! and I

think there is definitely something to do! - this solution is

absolutely free of application locks, so may only work :-) then of

course it depends on how often do you spin on locks, etc. - but I

think just having a solution to assign Master and Purge threads to

dedicated CPU cores, and all "user" threads to other cores may help a

lot in heavy workloads! As well it can be dynamic, etc..

-

Timer-based concurrency model - is still looking interesting and gives

better results comparing to the original model. But as I wrote before,

it cannot guarantee CPU cycles for every thread just because threads

are waked up by timeout in a random order.. As well, it also as

original model doesn't have a solution for a case when an active

thread is involving an I/O operation and blocking on it for a while..

- during this time CPU cycles may be used by another thread from the

wait queue, but it's not so..

- Thread Pool - honestly, this option combined with CPU Affinity looks for me as the most attractive solution.. ;-)) But well, when I'm speaking about a Thread Pool I don't mean the "pool-of-thread" code proposed before within an MySQL 6.0 branch (once Mark very well commented about at the end of his article and I may only agree with him..) - but I mean here a Thread Pool natively integrated into MySQL and aware about everything what all the threads are doing: aware about transaction locks, sleeps, I/O requests, etc. :-) - about all these "internal events" CPU Affinity cannot be aware! - and according to these events, activity and configuration settings Thread Pool should limit optimally the number of the really active threads reduce missed CPU cycles due wait events if there are other threads in the wait queue.. - as well having less active threads requiring a CPU time will simplify a lot the things for OS scheduler too.. - And of course it should be a near free of locks!! :-)) - do I want to much?.. - don't think so ;-) but if you have any other ideas - please, share!! :-))

Of course, having free of locks and highly scalable MySQL+InnoDB code will resolve most of described problems! - but I think it's not yet for tomorrow.. ;-) and anyway, even if you're using a scalable database engine like Oracle you may still seek for a solution of connections pool limiting the amount of active tasks on your server.. - so I'm voting for an advanced Thread Pool for MySQL! :-)

Any comments are welcome! ;-)

Monday, 08 November, 2010

MySQL Performance: Hitting error "Can't create a new thread (errno 11)" on a high number of connections

Sorry if you'll find this post stupid, but probably it'll safe a day for somebody else who may hit a wall like it was for me last Friday ;-))

So far, I've started my test scenario to analyze a workload with up to 1600 concurrent users on the 32 cores Linux server. Load is growing step by step by 200 users every 5 min, and once the load is reached 800-1000 users I've started to observe all my new clients ejected with the following error message:

"ERROR 1135 (HY000): Can't create a new thread (errno 11); if you are not out of available memory, you can consult the manual for a possible OS-dependent bug"

I may say, once you hit such an error on your MySQL server you're less than happy ;-)

What about the error?.. - this error is generic, and what is really important inside of the error message is its error code -- "errno 11" (well, of course you should check if you're really not short in memory, and in my case I've still got 64GB of RAM free, and I've also verified the swap space in case if MySQL cannot reserve a place in swap for some buffer allocations - who knows?..) - then to check what is exactly the meaning of "error 11" you have to use "perror" command:

# perror 11 OS error code 11: Resource temporarily unavailable

But the question is which resource if it's not a memory?..

Next thing which is coming in mind is the limit for number of file descriptors - and it's easy to check with "ulimit -n":

$ ulimit -n 1024 $ ulimit -Hn 1024 $ ulimit -Sn 1024

The value 1024 drives me mad - because if it's really true, I should not be able to open more than 200-300 connections (as MySQL is using 4-5 file descriptors per connection in general), but I've already reached 800 connections until now!.. - And another killing thing is that my "max_connections" is set to 2000 and it's not changed by MySQL on start (usually if you're requesting a too high value it'll be adjusted by MySQL automatically on the start).. So what is wrong here?..

Just in case, I've updated my "nofile" limit within my "/etc/security/limits.conf" file:

# cat /etc/security/limits.conf mysql soft nofile 10240 mysql hard nofile 40960

and recheck it's seen now by "ulimit" too:

$ ulimit -n 10240 $ ulimit -Hn 40960 $ ulimit -Sn 10240 $

Then re-test again - the problem is still here since 1000 concurrent sessions..

What's next?.. - once you've asking all around, Google is your friend.. :-) - and I was surprised how often people are complaining by getting the same error!.. - some are even logged a bug on the MySQL site, but seems there was no solution for them as the problem was not reproduced.. Another lucky blog post was about a file descriptor limit: Can't create a new thread - but I've already check it, and MySQL is reporting as well "open_files_limit: 18010"..

The problem looks like a limit on the new thread creation on the system.. - but my threads limit on the system is:

# cat /proc/sys/kernel/threads-max 2065067

Without having any answer, I've started to group all cases I've found via Google:

- every time the case less or more random -- you cannot say what is exactly the limit of the open sessions, sometime it's changing over a time..

- most of cases was hard or impossible to reproduce, so it's clear the problem is coming from the particular user's configuration, just that user was unable to find which one..

- in most cases users have not reached any limit in RAM, but in some cases there was a limit on open files..

- I don't have all these limits, and my limit is appearing on the thread creation..

Thread creation.. Thread - was the word which bring a light in my mind!! :-)) - working mainly with Solaris servers I've completely forget that threads on the Linux are designed as processes !!! :-)) - then checking my process number limit:

$ ulimit -u 1024

it explains 1000 sessions limitation ;-)

adding few lines more to my "/etc/security/limits.conf" file:

# cat /etc/security/limits.conf mysql soft nofile 10240 mysql hard nofile 40960 mysql soft nproc 10240 mysql hard nproc 40960

fixed my issue! :-))

Well, it's strange to see a system limiting a number of processes per user by 1024, but from the other hand it'll be fine to add into MySQL code additional check according the "nproc" limit on the system and reduce the "max_connections" settings automatically if the limit is too low -- it's still better to spend a time understanding why your max sessions runtime value is lower than expected rather to see that 2000 for max sessions is accepted on the start up by MySQL, but then since some level you have connections reject for the reason you need yet to understand..

Edited on: Monday, 08 November, 2010 22:48

Categories: MySQL