This article is inspired by

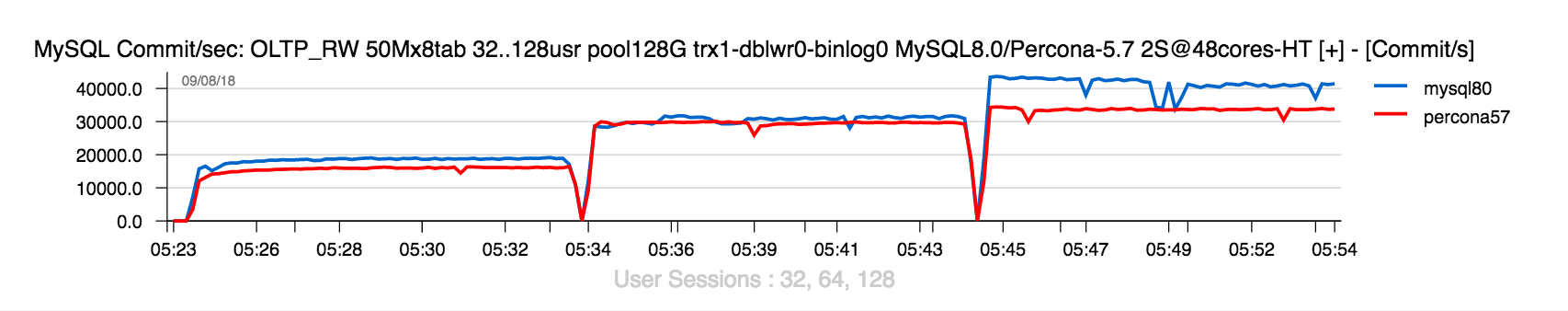

Percona blog post comparing MySQL 8.0 and Percona Server 5.7 on IO-bound

workload with Intel Optane storage. There are several claims made by Vadim based on a single test case, which is simply unfair. So, I'll try to clarify this all based on more test results and more

tech details..

But before we start, some intro :

InnoDB Parallel Flushing -- was introduced with MySQL 5.7 (as a single-thread flushing

could no more follow), and implemented as dedicated parallel threads

(cleaners) which are involved in background once per second to do LRU-driven flushing first (in case there is no more or too low amount of free pages) and then REDO-driven flushing (to flush the

oldest dirty pages and allow more free space in REDO). The amount of cleaners was intentionally made configurable as there were many worries that these threads will use too much CPU ;-)) -- but at

least configuring their number equal to number of your Buffer Pool (BP) Instances was resulting in nearly the same as if you have dedicated cleaner-per-BP-instance.

Multi-threaded LRU Flusher -- was introduced in Percona Server 5.7, implementing dedicated LRU cleaners (one thread per BP instance) independently running in background. The real valid

point in this approach is to keep LRU cleaners independent to so called "detected activity" in InnoDB (which was historically always buggy), so whatever happens, every LRU cleaner remains active to

deliver free pages according the demand. While in MySQL 5.7 the same was expected to be covered by involving "free page event" (not what I'd prefer, but this is also historical to InnoDB). However,

on any IO-bounded workload I've tested with MySQL 5.7 and 8.0 by configuring 16 BP instances with 16 page cleaners and with LRU depth setting matching the required free page rate -- I've never

observed lower TPS comparing to Percona..

Single Page Flushing -- historically, in InnoDB when a user thread was not able to get a free page for its data, it was involving a "single page flush" itself, expecting to get a free page

sooner -- the motivation behind such an approach was "better to try to do something than just do nothing". And this was blamed so often.. -- while, again, it's largely exaggerated, because the only

real problem here is coming due a historical "limited space" for single page flush in DoubleWrite Buffer, and that's all. To be honest, making this option configurable could allow anyone to evaluate

it very easily and decide to keep it ON or not by his own results ;-))

DoubleWrite Buffer -- probably one of the biggest historical PITA in InnoDB.. -- the feature is implemented to guarantee page "atomic writes" (e.g. to avoid partially written pages, each

page is written first to DoubleWrite (DBLWR) place, and only then to its real place in data file). It was still "good enough" while storage was very slow, but quickly became a bottleneck on faster

storage. However, such a bottleneck you could not observe on every workload.. -- despite you have to write your data twice, but as long as your storage is able to follow and you don't have waits on

IO writes (e.g. not on REDO space nor on free pages) -- your overall TPS will still not be impacted ;-)) The impact is generally becomes visible since 64 concurrent users (really *concurrent*, e.g.

doing things on the same time). Anyway, we addressed this issue yet for MySQL 5.7, but our fix arrived after GA date, so it was not delivered with 5.7 -- on the same time Percona delivered their

"Parallel DoubleWrite", solving the problem for Percona Server 5.7 -- lucky guys, kudos for timing ! ;-))

Now, why we did NOT put all these points on the first priority for MySQL 8.0 release ?

-

there is one main thing changes since MySQL 8.0 -- for the first time in MySQL history we decided to move to "continuous release" model !

-

which means that we may still deliver new changes with every update ;-))

-

(e.g. if the same was possible with 5.7, the fix for DBLWR would be already here)

-

however, we should be also "realistic" as we cannot address fundamental changes in updates..

-

so, if any fundamental changes should be delivered, they should be made before GA deadline

-

and the most critical from such planned changes was our

new REDO log

implementation !

-

(we can address DBLWR and other changes later, but redesigning REDO is

much more complex story ;-))

-

so, yes, we're aware about all the real issues we have, and we know how to fix them -- and it's only a question of time now..

So far, now let's see what of the listed issues are real problems and how much each one is impacting ;-))

For my following investigation I'll use :

-

the same 48cores-HT 2S Skylake server

as before

-

x2 Optane drives used together as a single RAID-0 volume via MDM

-

same OL7.4, EXT4

-

Sysbench 50M x 8-tables data volume (same as I used before, and then Vadim)

-

Similar my.conf but with few changes :

-

trx_commit=1 (flush REDO on every COMMIT as before)

-

PFS=on (Performance Schema)

-

checksums=on (crc32)

-

doublewrite=off/on (to validate the impact)

-

binlog=off/on & sync_binlog=1 (to validate the impact as well)

Test scenarios :

-

Concurrent users : 32, 64, 128

-

Buffer Pool : 128GB / 32GB

-

Workload : Sysbench OLTP_RW 50Mx8tab (100GB)

-

Config variations :

-

1) base config + dblwr=0 + binlog=0

-

2) base config + dblwr=1 + binlog=0

-

3) base config + dblwr=0 + binlog=1

-

4) base config + dblwr=1 + binlog=1

-

where base config : trx_commit=1 + PFS=on + checksums=on

NOTE : I did not build for all the following test results "user friendly" charts -- all the graphs are representing real TPS (Commit/sec) stats collected during the tests, and matching 3 load

levels : 32, 64, and 128 concurrent users.

128GB BUFFER POOL

So far, let's start first with Buffer Pool =128GB :

OLTP_RW-50Mx8tab | BP=128G, trx=1, dblwr=0, binlog=0

Comments :

-

with Buffer Pool (BP) of 128GB we keep the whole dataset "cached" (so, no IO reads)

-

NOTE : PFS=on and checksums=on -- while TPS is mostly the same as in the

previous test

on the same data volume (where both PFS & checksums were OFF), they are not impacting here

-

as well from the previous test you can see that even if the data are fully cached in BP, there is still an impact due used storage -- the result on Intel SSD was way lower than on Intel Optane

-

so, in the current test result you can see that MySQL 8.0 also getting better benefit from a faster storage comparing to Percona Server, even if the given test is mostly about REDO related

improvements ;-))

And now, let's switch DobleWrite=ON :

Read more...