« MySQL Performance: Reaching 100M(!) Transactions/sec with MySQL 5.5 running on Exadata! | Main | MySQL Performance: 5.6 Notes, part 2 - Under full dbSTRESS workload... »

Monday, 11 April, 2011

MySQL Performance: 5.6 Notes, part 1 - Discovery...

I was lucky to discover MySQL 5.6 features ahead and here are my notes about performance improvements and pending issues observed on my benchmark workloads.. The story finally was very passionate and that's why very long for a one blog post :-)) so I've decided to split it into several parts and will publish all of them over a day..

Here is the first part, Discovery of MySQL 5.6.2 as it..

The new MySQL 5.6 version brings many "fundamental" improvement

within InnoDB code:

- first of all a split of the "kernel_mutex" was introduced! - it was one of the most hot contentions observed in MySQL 5.5 and limited InnoDB performance in so many cases..

- purge processing became multi threaded! - so we may expect to not see purge lagging anymore or reduce it considerably on heavy writes..

- page cleaning and flushing activity was removed from the Master Thread and assigned to the Page Cleaner (dedicated thread)

- METRICS table was introduced to make monitoring easier ;-))

- Performance Schema was improved and extended with a lot of new stuff..

There are other new features as well, but the listed ones were in my top

priority list to test them and see what kind of new limits or

contentions we'll discover now in 5.6 :-)) - as I always saying to our

customers when they are coming in our Benchmark Center - "you

may have a brilliant idea, but it may still be killed by poor or not

optimal implementation.."

HW Platform - For my tests I've used a 48 cores AMD box running

Oracle Linux 5.5 and having fast enough I/O level (Sun's Flash cards

were used here, but I'm skipping any details about because I'm not

seeking for I/O performance, but will stress MySQL to see existing

contentions within its code..) - so I'm not using O_DIRECT to not enter

into any I/O issues I've observed before in the previous

tests :-))

SW Configuration : I did not use any specific system settings

different from "default", and again (to be compatible) used the EXT4

filesystem. The following "base" MySQL configuration settings was used:

[mysqld]

#-------------------------------------------------------

max_connections=2000

key_buffer_size=200M

low_priority_updates=1

sort_buffer_size = 2097152

table_open_cache = 8000

# files

innodb_file_per_table

innodb_log_file_size=1024M

innodb_log_files_in_group=3

innodb_open_files=4000

# buffers

innodb_buffer_pool_size=16000M

innodb_buffer_pool_instances=16

innodb_additional_mem_pool_size=20M

innodb_log_buffer_size=64M

# tune

innodb_checksums=0

innodb_doublewrite=0

innodb_support_xa=0

innodb_thread_concurrency=0

innodb_flush_log_at_trx_commit=2

### innodb_flush_method= O_DIRECT

innodb_max_dirty_pages_pct=50

### innodb_change_buffering= none

# perf special

innodb_adaptive_flushing=1

innodb_read_io_threads = 16

innodb_write_io_threads = 16

innodb_io_capacity = 4000

innodb_purge_threads=1

### innodb_max_purge_lag=400000

### innodb_adaptive_hash_index = 0

# transaction isolation

### transaction-isolation = REPEATABLE-READ

### transaction-isolation = READ-COMMITTED

### transaction-isolation = READ-UNCOMMITTED

# Monitoring via METRICS table since MySQL 5.6

innodb_monitor_enable = '%'#-------------------------------------------------------

All these settings were used "by default" within every test except if

there are some other details are given..

Load Generator : don't think you'll be surprised I've used dbSTRESS

:-)) (as usual).. My initial plan was to test all the workloads with

various scenarios, and specially I was curious to see what will be the

next bottleneck on the workloads which were limited before by the

contention on the "kernel_mutex" (you may get a look on my previous

posts about)..

How to read the following graphs :

- first of all, there is a title on each graph ;-)

- then the legend on the right side explaining the meaning of curves..

- and then, if nothing else specified, I've tested a step by step growing workload, and on each step there were x2 times more concurrent user sessions than on the previous one..

So far, time is for testing now ;-)

First bad surprise..

Well, the first bad surprises came from the beginning on the yet first

Read-Only probe tests - MySQL 5.6 was way slower than 5.5!.. and the

main killing factor was an incredibly grown contention on the

"btr_search_latch" mutex..

I was very surprised to see it so hot on the Read-Only workload.. -

well, not really, as in my understanding this contention usually

appeared until now when the new data pages were loaded into the buffer

pool, so even it was a Read-Only activity, the contention was still

observed until most of the pages were not kept in the buffer pool..

My feeling here that before the split, "kernel_mutex" limited impact of

"btr_search_latch" as instead of one contention there were two

contentions :-)) but now, when the "kernel_mutex" contention is gone,

sessions are beating more hard for the "btr_search_latch".. - and I

don't know why this contention is still remaining even once all

accessed pages have been already loaded into the buffer pool?..

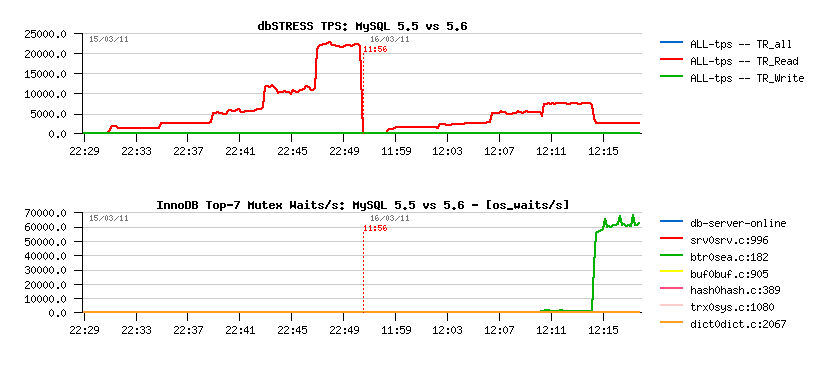

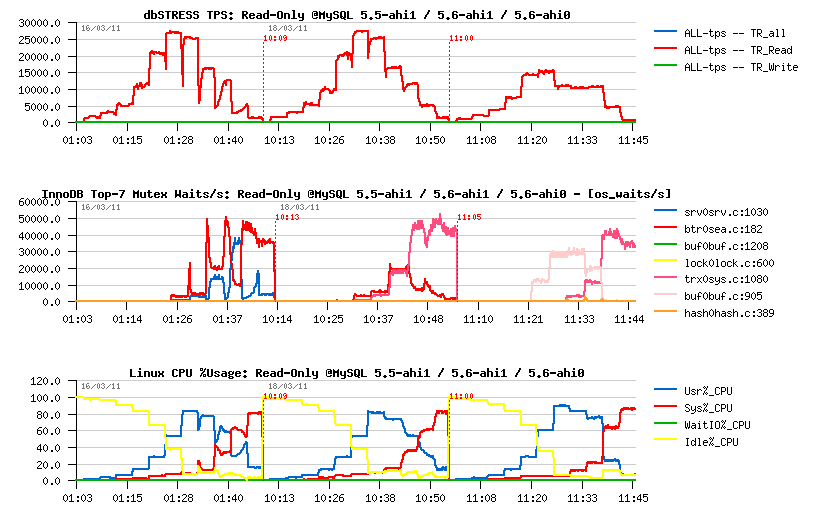

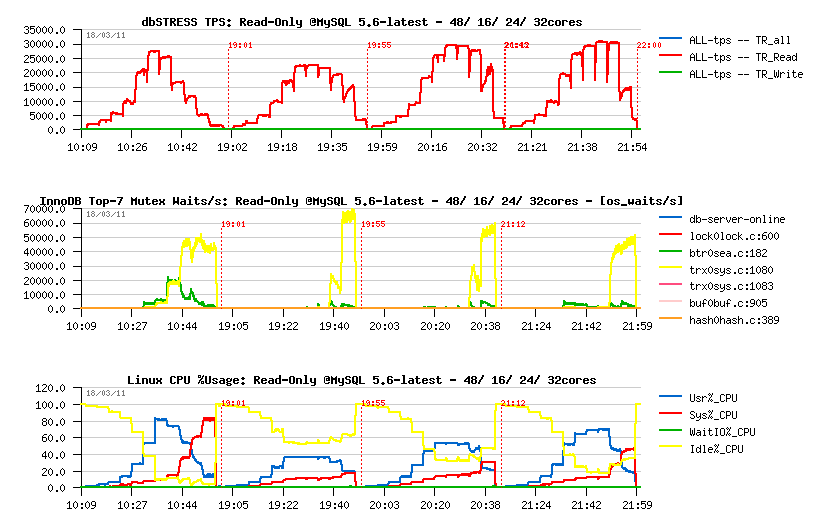

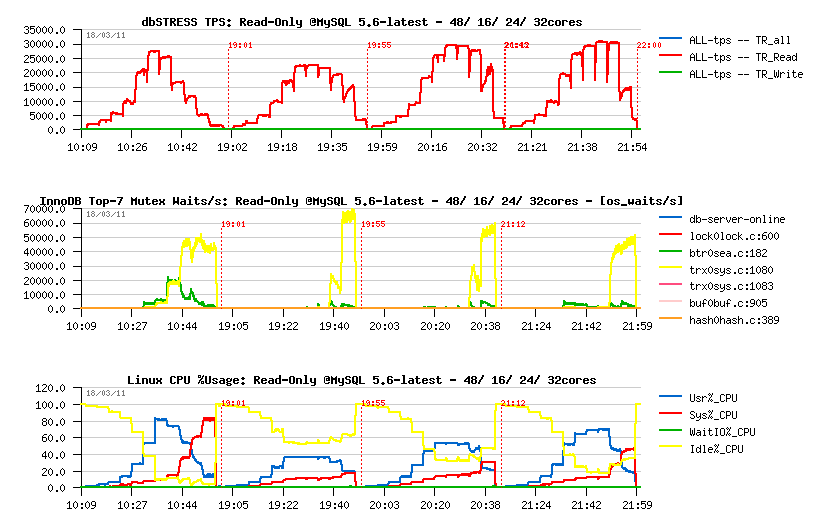

Here are TPS and Mutex Waits graphs from the beginning of the Read-Only

test, just from 1 to 16 users, and it's clearly seen performance

degradations is already starting since 16 users:

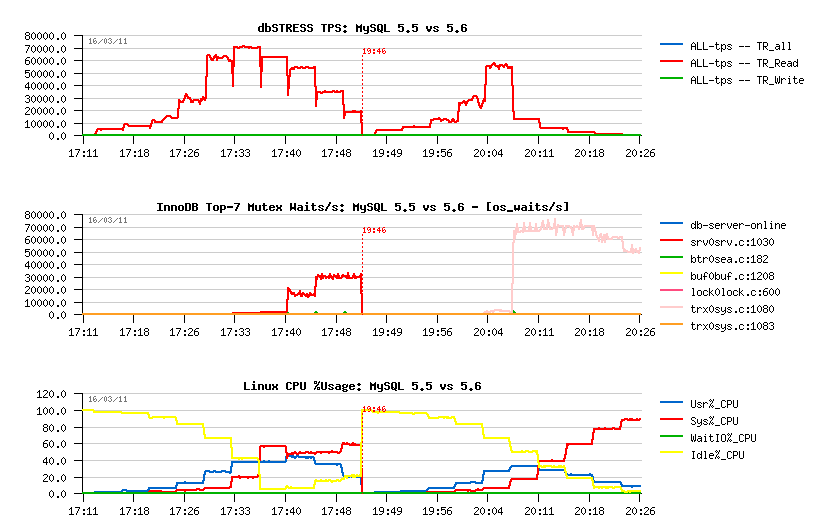

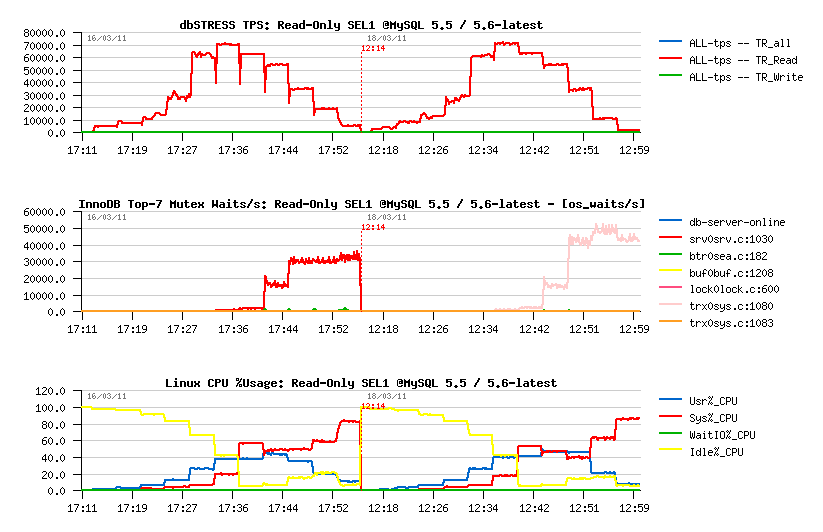

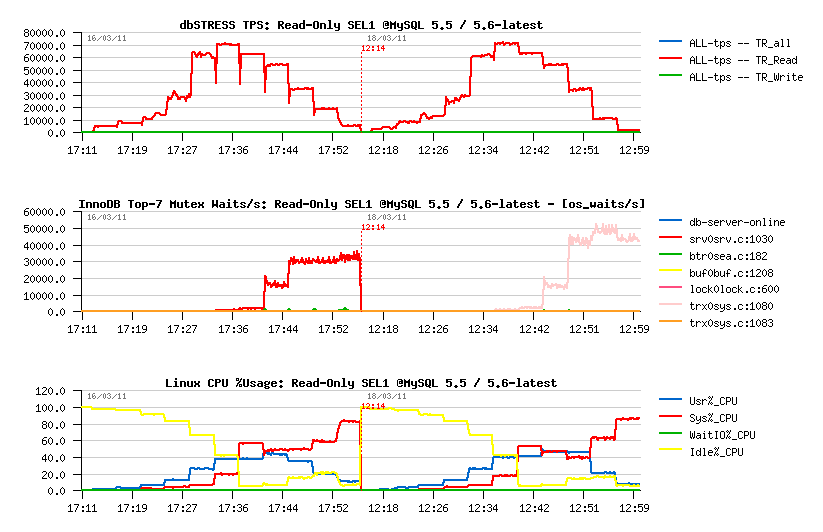

On the workload scenario which was previously blocked by the

"kernel_mutex" contention in MySQL 5.5, the things are not yet better on

5.6 due the "trx_sys" mutex contention now:

The workload is still the same Read-Only scenario, but we're executing

only "simple" queries here (SEL1 - a single line join).. As you see, on

5.5 performance is limited by growing kernel_mutex waits. But on 5.6

we're hitting "trx_sys" contention since 32 concurrent sessions..

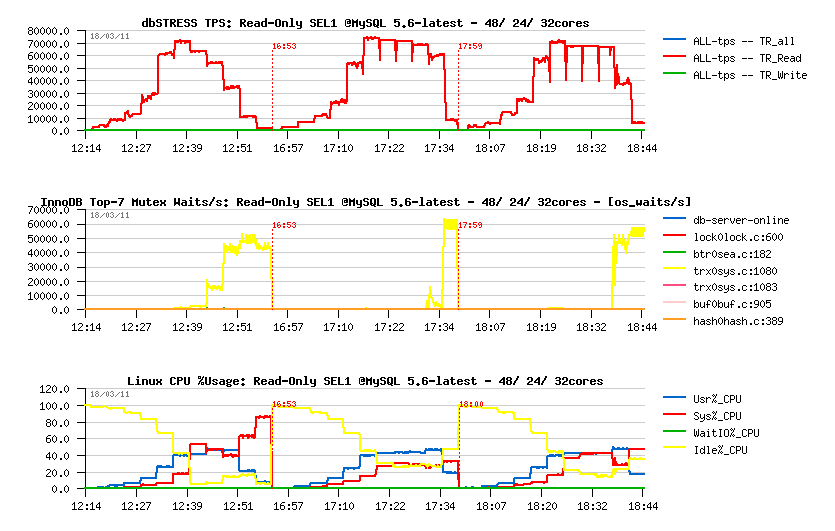

On the same time I was curious why this issue was not seen before on

other workloads like sysbench for ex., and realized that you need to

have at least a server with 24cores. For example, if I'm limiting MySQL

to only 16 cores, the issue near to be gone (which is normal, as I'm

forcing a limiting of a concurrency)..

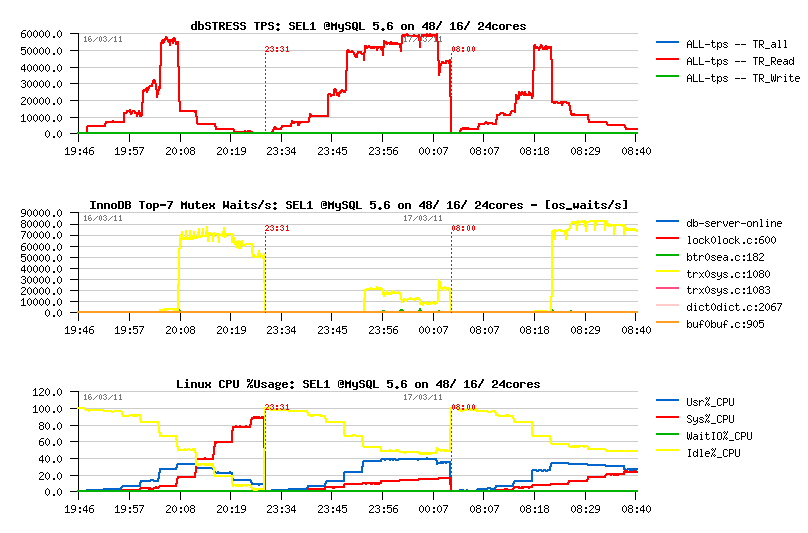

Here is the graph of the same test but having MySQL server running on

48, 16 and 24 cores:

As you see, trx_sys contention can be reduced here by limiting MySQL to

16 cores, but I cannot consider it as a solution.. MySQL 5.6 should

scale far away comparing to 5.5, so let's forget about workarounds :-))

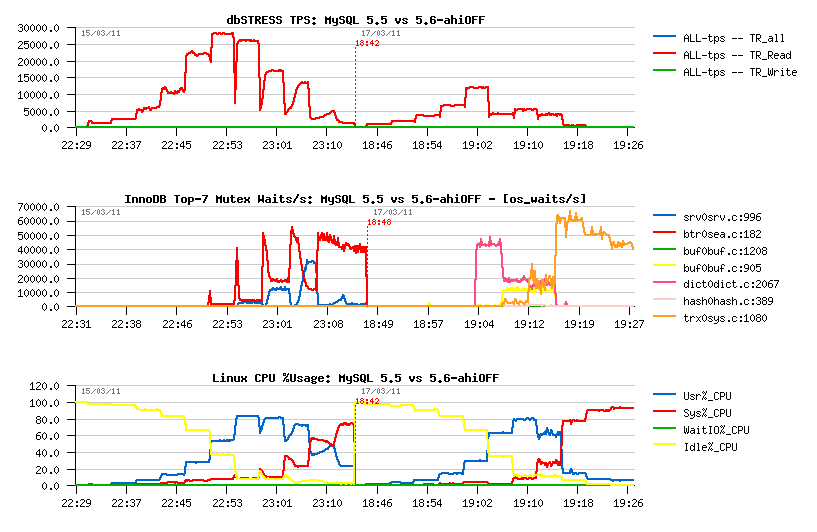

Next step: the "btr_search_latch" mutex is related to the Adaptive Hash

Index (AHI) feature. Many people are advising to disable AHI and remove

any use of "btr_search_latch" mutex within InnoDB code, and some even

reporting to have a better performance when the AHI is turn OFF.. -

Well, personally, until now, I saw only performance degradation with

AHI=OFF, but things may be different in different workloads.. In my case

it's not helping (even the result is better comparing to the initial

one, but still worse than with 5.5):

Retesting with innodb_adaptive_hash_index=OFF :

yes, it's removing "btr_search_latch" bottleneck!!

then, I expected to see the buffer pool contention as it was in 5.5

before.. - but if I remember well, this part of code was improved in

5.6, so on 16 sessions TPS continued to increase ;-)) but then on 32

sessions TPS was dropped due "index mutex" locks (initially created

within a dictionary).. - then with a growing load the "trx_sys"

contention is killing performance completely..

MySQL 5.6.2-latest version

Finally I was lucky to discover I did not use the "latest" 5.6 trunk -

as you can see the things are changing very quickly in the 5.6

development, and the issues I was observed were already fixed one week

before.. :-))

Retesting with the MySQL 5.6.2-latest version:

Observations:

- high contention on the btr_search_latch mutex since 16 sessions is gone!

- contention on btr_search_latch mutex is starting now since 32 concurrent sessions as before..

- since 64 sessions the trx_sys mutex contention is added too..

- since 256 sessions the trx_sys mutex contention is dominating..

-

disabling A.H.I is completely removing btr_search_latch mutex

contention, but it's not improving performance:

-

even before any other contention is coming in game, the TPS level

is already lower when AHI=OFF

(for ex.: on 8 sessions TPS is 7.500 with AHI=OFF, while it's 10.000 with AHI=ON) -

then we hit a contention on the buffer pool index..

NOTE: buffer pool mutex contention may be very random - all depends how the data arrived into the buffer pool.. - if one of the BP instances is more accessed than others, we're getting back a situation similar to a single BP instance..

-

then once again the trx_sys mutex is taking a dominating position..

-

even before any other contention is coming in game, the TPS level

is already lower when AHI=OFF

Questions:

- why do we still have a contention on the btr_search_mutex even once all accessed pages are already loaded to the buffer pool ???

-

any idea about reducing the trx_sys mutex contention ??..

An now retesting the SEL1 Read-Only:

It's way better than before, but performance is still limited by the

"trx_sys" mutex contention..

NOTE: testing with READ COMMITTED and READ UNCOMMITTED transaction level

isolations did not change absolutely nothing..

And now the same test on 5.6-latest but with a MySQL server limited to

24 and 32 cores:

Observations :

- on 24 cores the ~max TPS level is kept up to 512 concurrent sessions

- on 32 cores it's up to 256 sessions only..

- on 48 cores performance is already decreasing since 64 sessions..

And now retesting initial Read-Only also on 16, 24 and 32 cores:

What is interesting: limiting thread concurrency by binding MySQL

process to less cores even improved the peak performance obtained by 5.6

on this test!..

To be continued.. (see part #2)..

blog comments powered by DisqusNote: if you don't see any "comment" dialog above, try to access this page with another web browser, or google for known issues on your browser and DISQUS..